py-smi

Disclaimer: This post has been translated to English using a machine translation model. Please, let me know if you find any mistakes.

Do you want to be able to use nvidia-smi from Python? Here you have a library to do it.

Installation

To install it, run:

pip install python-smi```

Usage

We import the library

from py_smi import NVML

We create an object of pynvml (the library behind nvidia-smi)

nv = NVML()

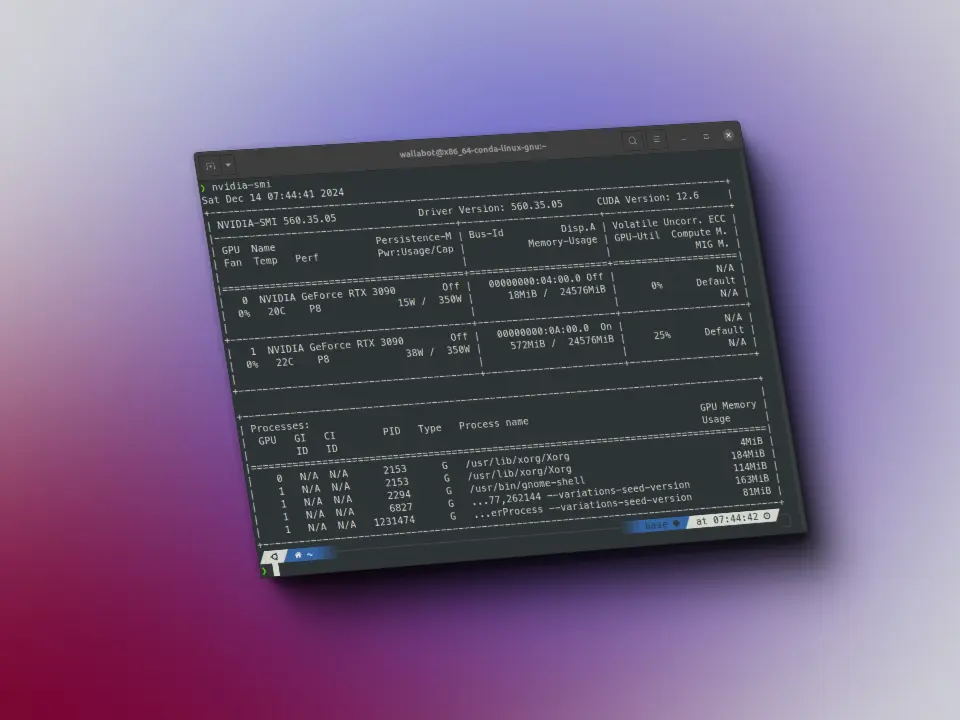

We get the version of the driver and CUDA

nv.driver_version, nv.cuda_version

('560.35.03', '12.6')

Since in my case I have two GPUs, I create a variable with the number of GPUs

num_gpus = 2

I get the memory of each GPU

[nv.mem(i) for i in range(num_gpus)]

[_Memory(free=24136.6875, total=24576.0, used=439.3125),_Memory(free=23509.0, total=24576.0, used=1067.0)]

The utilization

[nv.utilization() for i in range(num_gpus)]

[_Utilization(gpu=0, memory=0, enc=0, dec=0),_Utilization(gpu=0, memory=0, enc=0, dec=0)]

The power used

This works very well for me, because when I was training a model and had both GPUs full, sometimes my computer would shut down, and looking at the power, I see that the second one consumes a lot, so it may be what I already suspected, which was due to power supply.

[nv.power(i) for i in range(num_gpus)]

[_Power(usage=15.382, limit=350.0), _Power(usage=40.573, limit=350.0)]

The clocks of each GPU

[nv.clocks(i) for i in range(num_gpus)]

[_Clocks(graphics=0, sm=0, mem=405), _Clocks(graphics=540, sm=540, mem=810)]

PCI Data

[nv.pcie_throughput(i) for i in range(num_gpus)]

[_PCIeThroughput(rx=0.0, tx=0.0),_PCIeThroughput(rx=0.1630859375, tx=0.0234375)]

And the processes (I'm not running anything now)

[nv.processes(i) for i in range(num_gpus)]

[[], []]