En este post vamos a ver cómo hacer fine tuning a pequeños modelos de lenguaje, vamos a ver cómo hacer fine tuning para clasificación de texto y para generación de texto. Primero vamos a ver cómo hacerlo con las librerías de Hugging Face, ya que Hugging Face se ha convertido en un actor muy importante en el ecosistema de IA en estos momentos.

Pero aunque las librerías de Hugging Face son muy importantes y útiles, es muy importante saber cómo se hace realmente el entrenamiento y qué está pasando por debajo, así que vamos a repetir el entrenamiento para clasificación y generación de texto pero con Pytorch

Fine tuning para clasificación de texto con Hugging Face

Login

Para poder subir el resultado del entrenamiento al hub debemos logearnos primero, para ello necesitamos un token

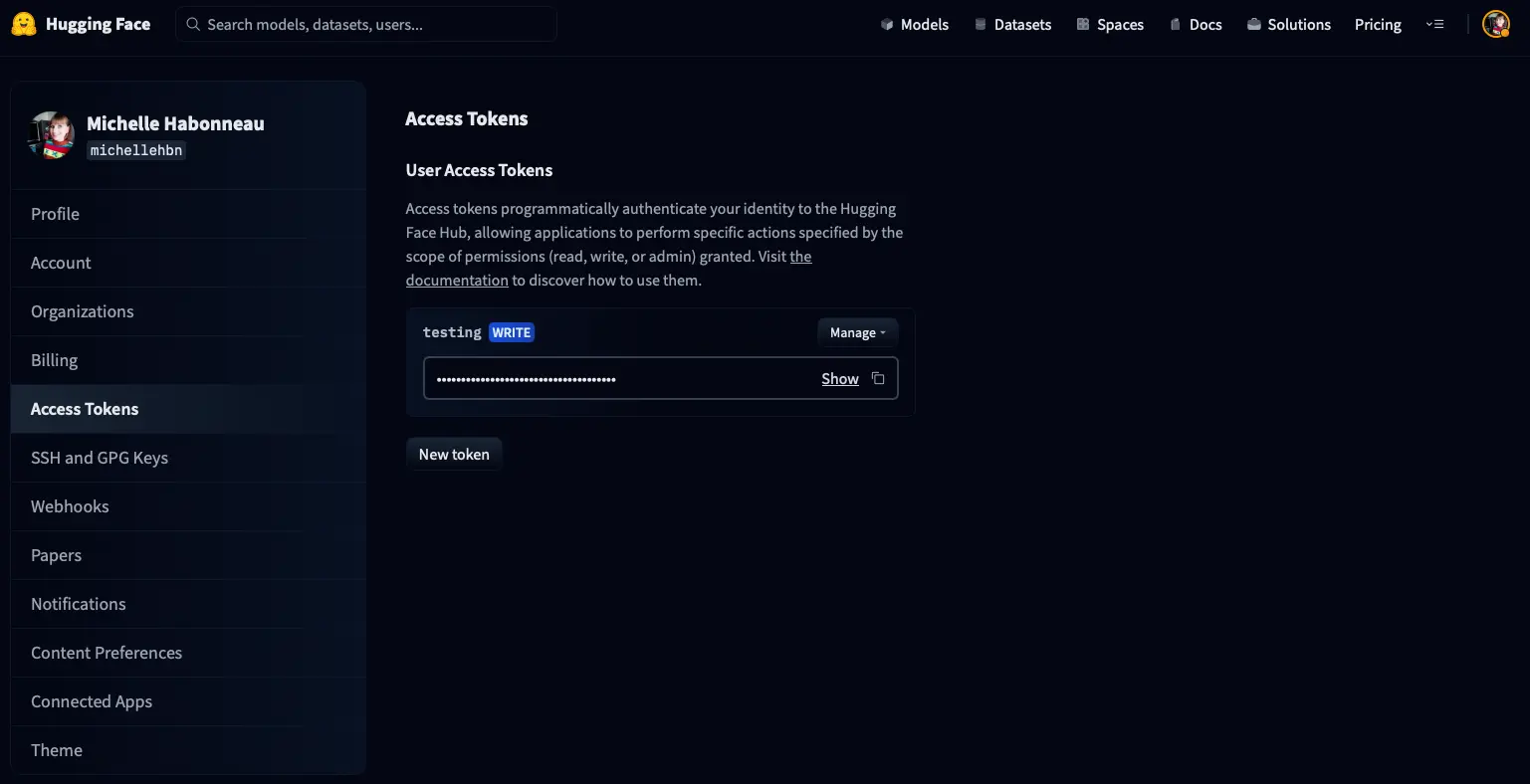

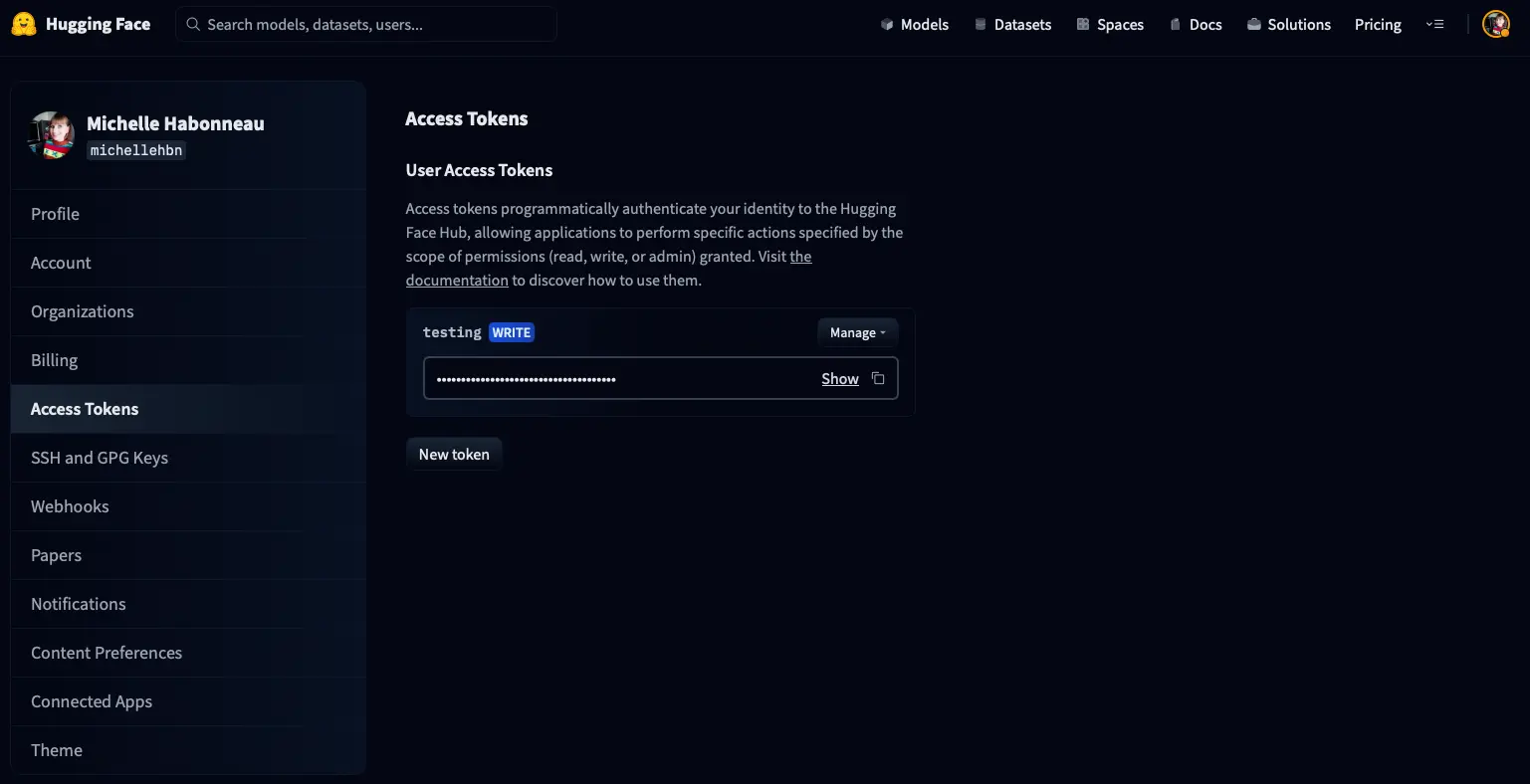

Para crear un token hay que ir a la página de settings/tokens de nuestra cuenta, nos aparecerá algo así

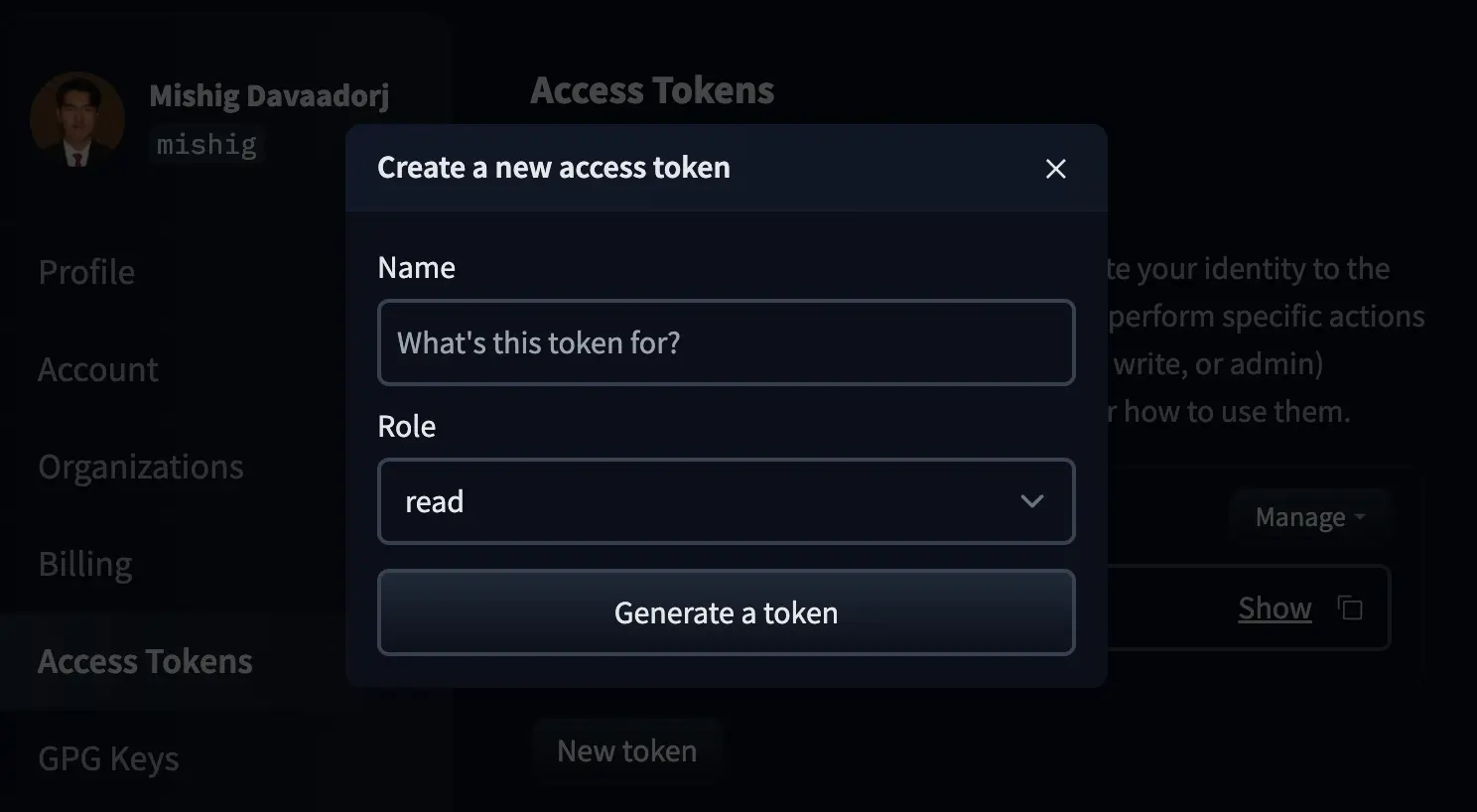

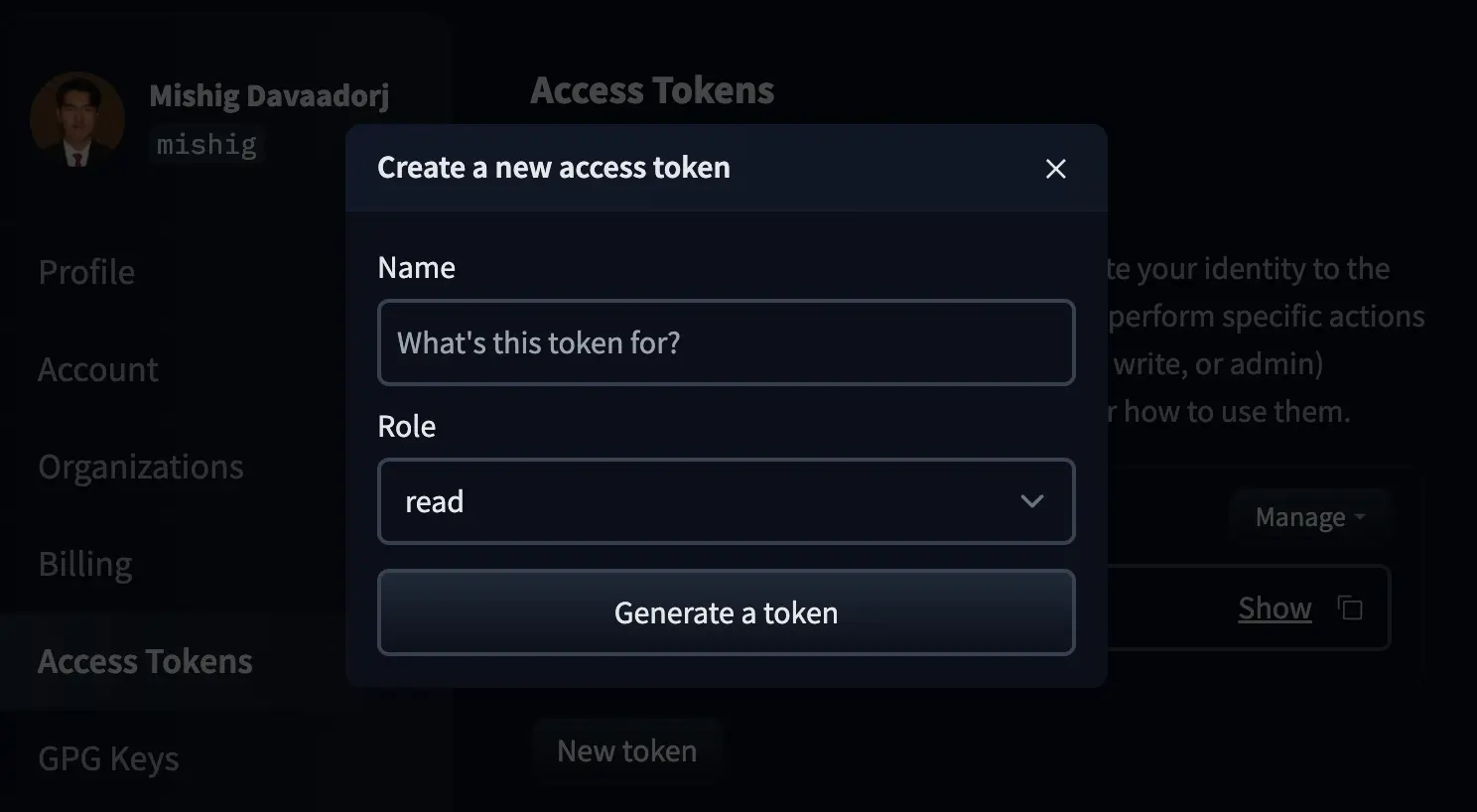

Le damos a New token y nos aparecerá una ventana para crear un nuevo token

Le damos un nombre al token y lo creamos con el rol write, o con el rol Fine-grained, que nos permite seleccionar exactamente cuáles permisos tendrá el token

Una vez creado lo copiamos y lo pegamos a continuación

from huggingface_hub import notebook_loginnotebook_login()Copied

Dataset

Ahora nos descargamos un dataset, en este caso nos vamos a descargar uno de reviews de Amazon

from datasets import load_datasetdataset = load_dataset("mteb/amazon_reviews_multi", "en")Copied

Vamos a verlo un poco

datasetCopied

DatasetDict({train: Dataset({features: ['id', 'text', 'label', 'label_text'],num_rows: 200000})validation: Dataset({features: ['id', 'text', 'label', 'label_text'],num_rows: 5000})test: Dataset({features: ['id', 'text', 'label', 'label_text'],num_rows: 5000})})

Vemos que tiene un conjunto de entrenamiento con 200.000 muestras, uno de validación con 5.000 muestras y uno de test de 5.000 muestras

Vamos a ver un ejemplo del conjunto de entrenamiento

from random import randintidx = randint(0, len(dataset['train']) - 1)dataset['train'][idx]Copied

{'id': 'en_0907914','text': 'Mixed with fir it’s passable Not the scent I had hoped for . Love the scent of cedar, but this one missed','label': 3,'label_text': '3'}

Vemos que tiene la review en el campo text y la puntuación que le ha dado el usuario en el campo label

Como vamos a hacer un modelo de clasificación de textos, necesitamos saber cuántas clases vamos a tener

num_classes = len(dataset['train'].unique('label'))num_classesCopied

5

Vamos a tener 5 clases, ahora vamos a ver el valor mínimo de estas clases para saber si la puntuación comienza en 0 o en 1. Para ello usamos el método unique

dataset.unique('label')Copied

{'train': [0, 1, 2, 3, 4],'validation': [0, 1, 2, 3, 4],'test': [0, 1, 2, 3, 4]}

El mínimo valor va a ser 0

Para entrenar, las etiquetas tienen que estar en un campo llamado labels, mientras que en nuestro dataset está en un campo que se llama label, por lo que creamos el nuevo campo labels con el mismo valor que label

Creamos una función que haga lo que queramos

def set_labels(example):example['labels'] = example['label']return exampleCopied

Aplicamos la función al dataset

dataset = dataset.map(set_labels)Copied

Vamos a ver cómo queda el dataset

dataset['train'][idx]Copied

{'id': 'en_0907914','text': 'Mixed with fir it’s passable Not the scent I had hoped for . Love the scent of cedar, but this one missed','label': 3,'label_text': '3','labels': 3}

Tokenizador

Como en el dataset tenemos las reviews en texto, necesitamos tokenizarlas para poder meter los tokens al modelo

from transformers import AutoTokenizercheckpoint = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoint)Copied

Ahora creamos una función para tokenizar el texto. Lo vamos a hacer de manera que todas las sentencias tengan la misma longitud, de manera que el tokenizador truncará cuando sea necesario y añadirá tokens de padding cuando sea necesario. Además le indicamos que devuelva tensores de pytorch

Hacemos que la longitud de cada sentencia sea de 768 tokens porque estamos usando el modelo pequeño de GPT2, que como vimos en el post de GPT2 tiene una dimensión de embedding de 768 tokens

def tokenize_function(examples):return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=768, return_tensors="pt")Copied

Vamos a probar a tokenizar un texto

tokens = tokenize_function(dataset['train'][idx])Copied

---------------------------------------------------------------------------ValueError Traceback (most recent call last)Cell In[11], line 1----> 1 tokens = tokenize_function(dataset['train'][idx])Cell In[10], line 2, in tokenize_function(examples)1 def tokenize_function(examples):----> 2 return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=768, return_tensors="pt")File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2883, in PreTrainedTokenizerBase.__call__(self, text, text_pair, text_target, text_pair_target, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)2881 if not self._in_target_context_manager:2882 self._switch_to_input_mode()-> 2883 encodings = self._call_one(text=text, text_pair=text_pair, **all_kwargs)2884 if text_target is not None:2885 self._switch_to_target_mode()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2989, in PreTrainedTokenizerBase._call_one(self, text, text_pair, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)2969 return self.batch_encode_plus(2970 batch_text_or_text_pairs=batch_text_or_text_pairs,2971 add_special_tokens=add_special_tokens,(...)2986 **kwargs,2987 )2988 else:-> 2989 return self.encode_plus(2990 text=text,2991 text_pair=text_pair,2992 add_special_tokens=add_special_tokens,2993 padding=padding,2994 truncation=truncation,2995 max_length=max_length,2996 stride=stride,2997 is_split_into_words=is_split_into_words,2998 pad_to_multiple_of=pad_to_multiple_of,2999 return_tensors=return_tensors,3000 return_token_type_ids=return_token_type_ids,3001 return_attention_mask=return_attention_mask,3002 return_overflowing_tokens=return_overflowing_tokens,3003 return_special_tokens_mask=return_special_tokens_mask,3004 return_offsets_mapping=return_offsets_mapping,3005 return_length=return_length,3006 verbose=verbose,3007 **kwargs,3008 )File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:3053, in PreTrainedTokenizerBase.encode_plus(self, text, text_pair, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)3032 """3033 Tokenize and prepare for the model a sequence or a pair of sequences.3034(...)3049 method).3050 """3052 # Backward compatibility for 'truncation_strategy', 'pad_to_max_length'-> 3053 padding_strategy, truncation_strategy, max_length, kwargs = self._get_padding_truncation_strategies(3054 padding=padding,3055 truncation=truncation,3056 max_length=max_length,3057 pad_to_multiple_of=pad_to_multiple_of,3058 verbose=verbose,3059 **kwargs,3060 )3062 return self._encode_plus(3063 text=text,3064 text_pair=text_pair,(...)3080 **kwargs,3081 )File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2788, in PreTrainedTokenizerBase._get_padding_truncation_strategies(self, padding, truncation, max_length, pad_to_multiple_of, verbose, **kwargs)2786 # Test if we have a padding token2787 if padding_strategy != PaddingStrategy.DO_NOT_PAD and (self.pad_token is None or self.pad_token_id < 0):-> 2788 raise ValueError(2789 "Asking to pad but the tokenizer does not have a padding token. "2790 "Please select a token to use as `pad_token` `(tokenizer.pad_token = tokenizer.eos_token e.g.)` "2791 "or add a new pad token via `tokenizer.add_special_tokens({'pad_token': '[PAD]'})`."2792 )2794 # Check that we will truncate to a multiple of pad_to_multiple_of if both are provided2795 if (2796 truncation_strategy != TruncationStrategy.DO_NOT_TRUNCATE2797 and padding_strategy != PaddingStrategy.DO_NOT_PAD(...)2800 and (max_length % pad_to_multiple_of != 0)2801 ):ValueError: Asking to pad but the tokenizer does not have a padding token. Please select a token to use as `pad_token` `(tokenizer.pad_token = tokenizer.eos_token e.g.)` or add a new pad token via `tokenizer.add_special_tokens({'pad_token': '[PAD]'})`.

Nos da un error porque el tokenizador de GPT2 no tiene un token para padding y nos pide que asignemos uno, además nos sugiere hacer tokenizer.pad_token = tokenizer.eos_token, así que lo hacemos

tokenizer.pad_token = tokenizer.eos_tokenCopied

Volvemos a probar la función de tokenización

tokens = tokenize_function(dataset['train'][idx])tokens['input_ids'].shape, tokens['attention_mask'].shapeCopied

(torch.Size([1, 768]), torch.Size([1, 768]))

Ahora que hemos comprobado que la función tokeniza bien, aplicamos esta función al dataset, pero además la aplicamos por batches para que se ejecute más rápido

Además, aprovechamos y eliminamos las columnas que no vamos a necesitar

dataset = dataset.map(tokenize_function, batched=True, remove_columns=['text', 'label', 'id', 'label_text'])Copied

Vemos ahora cómo queda el dataset

datasetCopied

DatasetDict({train: Dataset({features: ['id', 'text', 'label', 'label_text', 'labels'],num_rows: 200000})validation: Dataset({features: ['id', 'text', 'label', 'label_text', 'labels'],num_rows: 5000})test: Dataset({features: ['id', 'text', 'label', 'label_text', 'labels'],num_rows: 5000})})

Vemos que tenemos los campos 'labels', 'input_ids' y 'attention_mask', que es lo que nos interesa para entrenar

Modelo

Instanciamos un modelo para clasificación de secuencias y le indicamos el número de clases que tenemos

from transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=num_classes)Copied

Some weights of GPT2ForSequenceClassification were not initialized from the model checkpoint at openai-community/gpt2 and are newly initialized: ['score.weight']You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

Nos dice que los pesos de la capa score han sido inicializados de manera aleatoria y que tenemos que reentrenarlos, vamos a ver por qué pasa esto

El modelo de GPT2 sería este

from transformers import AutoModelForCausalLMcasual_model = AutoModelForCausalLM.from_pretrained(checkpoint)Copied

Mientras que el modelo de GPT2 para generar texto es este

Vamos a ver su arquitectura

casual_modelCopied

GPT2LMHeadModel((transformer): GPT2Model((wte): Embedding(50257, 768)(wpe): Embedding(1024, 768)(drop): Dropout(p=0.1, inplace=False)(h): ModuleList((0-11): 12 x GPT2Block((ln_1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(attn): GPT2Attention((c_attn): Conv1D()(c_proj): Conv1D()(attn_dropout): Dropout(p=0.1, inplace=False)(resid_dropout): Dropout(p=0.1, inplace=False))(ln_2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(mlp): GPT2MLP((c_fc): Conv1D()(c_proj): Conv1D()(act): NewGELUActivation()(dropout): Dropout(p=0.1, inplace=False))))(ln_f): LayerNorm((768,), eps=1e-05, elementwise_affine=True))(lm_head): Linear(in_features=768, out_features=50257, bias=False))

Y ahora la arquitectura del modelo que vamos a usar para clasificar las reviews

modelCopied

GPT2ForSequenceClassification((transformer): GPT2Model((wte): Embedding(50257, 768)(wpe): Embedding(1024, 768)(drop): Dropout(p=0.1, inplace=False)(h): ModuleList((0-11): 12 x GPT2Block((ln_1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(attn): GPT2Attention((c_attn): Conv1D()(c_proj): Conv1D()(attn_dropout): Dropout(p=0.1, inplace=False)(resid_dropout): Dropout(p=0.1, inplace=False))(ln_2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(mlp): GPT2MLP((c_fc): Conv1D()(c_proj): Conv1D()(act): NewGELUActivation()(dropout): Dropout(p=0.1, inplace=False))))(ln_f): LayerNorm((768,), eps=1e-05, elementwise_affine=True))(score): Linear(in_features=768, out_features=5, bias=False))

De aquí hay dos cosas que mencionar

- La primera es que en ambos, la primera capa tiene dimensiones de 50257x768, que corresponde a 50257 posibles tokens del vocabulario de GPT2 y a 768 dimensiones del embedding, por lo que hemos hecho bien en tokenizar las reviews con un tamaño de 768 tokens

- La segunda es que el modelo

casual(el de generación de texto) tiene al final una capaLinearque genera 50257 valores, es decir, es la encargada de predecir el siguiente token y a posible token le da un valor. Mientras que el modelo de clasificación tiene una capaLinearque solo genera 5 valores, uno por cada clase, lo que nos dará la probabilidad de que la review pertenezca a cada clase

Por eso nos salía el mensaje de que los pesos de la capa score habían sido inicializados de manera aleatoria, porque la librería transformers ha eliminado la capa Linear de 768x50257 y ha añadido una capa Linear de 768x5, la ha inicializado con valores aleatorios y nosotros la tenemos que entrenar para nuestro problema en particular

Borramos el modelo casual, porque no lo vamos a usar

del casual_modelCopied

Trainer

Vamos ahora a configurar los argumentos del entrenamiento

from transformers import TrainingArgumentsmetric_name = "accuracy"model_name = "GPT2-small-finetuned-amazon-reviews-en-classification"LR = 2e-5BS_TRAIN = 28BS_EVAL = 40EPOCHS = 3WEIGHT_DECAY = 0.01training_args = TrainingArguments(model_name,eval_strategy="epoch",save_strategy="epoch",learning_rate=LR,per_device_train_batch_size=BS_TRAIN,per_device_eval_batch_size=BS_EVAL,num_train_epochs=EPOCHS,weight_decay=WEIGHT_DECAY,lr_scheduler_type="cosine",warmup_ratio = 0.1,fp16=True,load_best_model_at_end=True,metric_for_best_model=metric_name,push_to_hub=True,)Copied

Definimos una métrica para el dataloader de validación

import numpy as npfrom evaluate import loadmetric = load("accuracy")def compute_metrics(eval_pred):print(eval_pred)predictions, labels = eval_predpredictions = np.argmax(predictions, axis=1)return metric.compute(predictions=predictions, references=labels)Copied

Definimos ahora el trainer

from transformers import Trainertrainer = Trainer(model,training_args,train_dataset=dataset['train'],eval_dataset=dataset['validation'],tokenizer=tokenizer,compute_metrics=compute_metrics,)Copied

Entrenamos

trainer.train()Copied

0%| | 0/600000 [00:00<?, ?it/s]

---------------------------------------------------------------------------ValueError Traceback (most recent call last)Cell In[21], line 1----> 1 trainer.train()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:1876, in Trainer.train(self, resume_from_checkpoint, trial, ignore_keys_for_eval, **kwargs)1873 try:1874 # Disable progress bars when uploading models during checkpoints to avoid polluting stdout1875 hf_hub_utils.disable_progress_bars()-> 1876 return inner_training_loop(1877 args=args,1878 resume_from_checkpoint=resume_from_checkpoint,1879 trial=trial,1880 ignore_keys_for_eval=ignore_keys_for_eval,1881 )1882 finally:1883 hf_hub_utils.enable_progress_bars()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:2178, in Trainer._inner_training_loop(self, batch_size, args, resume_from_checkpoint, trial, ignore_keys_for_eval)2175 rng_to_sync = True2177 step = -1-> 2178 for step, inputs in enumerate(epoch_iterator):2179 total_batched_samples += 12181 if self.args.include_num_input_tokens_seen:File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/accelerate/data_loader.py:454, in DataLoaderShard.__iter__(self)452 # We iterate one batch ahead to check when we are at the end453 try:--> 454 current_batch = next(dataloader_iter)455 except StopIteration:456 yieldFile ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/torch/utils/data/dataloader.py:631, in _BaseDataLoaderIter.__next__(self)628 if self._sampler_iter is None:629 # TODO(https://github.com/pytorch/pytorch/issues/76750)630 self._reset() # type: ignore[call-arg]--> 631 data = self._next_data()632 self._num_yielded += 1633 if self._dataset_kind == _DatasetKind.Iterable and \634 self._IterableDataset_len_called is not None and \635 self._num_yielded > self._IterableDataset_len_called:File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/torch/utils/data/dataloader.py:675, in _SingleProcessDataLoaderIter._next_data(self)673 def _next_data(self):674 index = self._next_index() # may raise StopIteration--> 675 data = self._dataset_fetcher.fetch(index) # may raise StopIteration676 if self._pin_memory:677 data = _utils.pin_memory.pin_memory(data, self._pin_memory_device)File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/torch/utils/data/_utils/fetch.py:54, in _MapDatasetFetcher.fetch(self, possibly_batched_index)52 else:53 data = self.dataset[possibly_batched_index]---> 54 return self.collate_fn(data)File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/data/data_collator.py:271, in DataCollatorWithPadding.__call__(self, features)270 def __call__(self, features: List[Dict[str, Any]]) -> Dict[str, Any]:--> 271 batch = pad_without_fast_tokenizer_warning(272 self.tokenizer,273 features,274 padding=self.padding,275 max_length=self.max_length,276 pad_to_multiple_of=self.pad_to_multiple_of,277 return_tensors=self.return_tensors,278 )279 if "label" in batch:280 batch["labels"] = batch["label"]File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/data/data_collator.py:66, in pad_without_fast_tokenizer_warning(tokenizer, *pad_args, **pad_kwargs)63 tokenizer.deprecation_warnings["Asking-to-pad-a-fast-tokenizer"] = True65 try:---> 66 padded = tokenizer.pad(*pad_args, **pad_kwargs)67 finally:68 # Restore the state of the warning.69 tokenizer.deprecation_warnings["Asking-to-pad-a-fast-tokenizer"] = warning_stateFile ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:3299, in PreTrainedTokenizerBase.pad(self, encoded_inputs, padding, max_length, pad_to_multiple_of, return_attention_mask, return_tensors, verbose)3297 # The model's main input name, usually `input_ids`, has be passed for padding3298 if self.model_input_names[0] not in encoded_inputs:-> 3299 raise ValueError(3300 "You should supply an encoding or a list of encodings to this method "3301 f"that includes {self.model_input_names[0]}, but you provided {list(encoded_inputs.keys())}"3302 )3304 required_input = encoded_inputs[self.model_input_names[0]]3306 if required_input is None or (isinstance(required_input, Sized) and len(required_input) == 0):ValueError: You should supply an encoding or a list of encodings to this method that includes input_ids, but you provided ['label', 'labels']

Nos vuelve a salir un error porque el modelo no tiene asignado un token de padding, así que al igual que con el tokenizador se lo asignamos

model.config.pad_token_id = model.config.eos_token_idCopied

Volvemos a crear los argumentos del trainer con el nuevo modelo, que ahora sí tiene token de padding, el trainer y volvemos a entrenar

training_args = TrainingArguments(model_name,eval_strategy="epoch",save_strategy="epoch",learning_rate=LR,per_device_train_batch_size=BS_TRAIN,per_device_eval_batch_size=BS_EVAL,num_train_epochs=EPOCHS,weight_decay=WEIGHT_DECAY,lr_scheduler_type="cosine",warmup_ratio = 0.1,fp16=True,load_best_model_at_end=True,metric_for_best_model=metric_name,push_to_hub=True,logging_dir="./runs",)trainer = Trainer(model,training_args,train_dataset=dataset['train'],eval_dataset=dataset['validation'],tokenizer=tokenizer,compute_metrics=compute_metrics,)Copied

Ahora que hemos visto que está todo bien, podemos entrenar

trainer.train()Copied

<IPython.core.display.HTML object>

<IPython.core.display.HTML object>

<transformers.trainer_utils.EvalPrediction object at 0x782767ea1450><transformers.trainer_utils.EvalPrediction object at 0x782767eeefe0><transformers.trainer_utils.EvalPrediction object at 0x782767eecfd0>

TrainOutput(global_step=21429, training_loss=0.7846888848762739, metrics={'train_runtime': 26367.7801, 'train_samples_per_second': 22.755, 'train_steps_per_second': 0.813, 'total_flos': 2.35173445632e+17, 'train_loss': 0.7846888848762739, 'epoch': 3.0})

Evaluación

Una vez entrenado, evaluamos sobre el dataset de test

trainer.evaluate(eval_dataset=dataset['test'])Copied

<IPython.core.display.HTML object>

<transformers.trainer_utils.EvalPrediction object at 0x7826ddfded40>

{'eval_loss': 0.7973636984825134,'eval_accuracy': 0.6626,'eval_runtime': 76.3016,'eval_samples_per_second': 65.529,'eval_steps_per_second': 1.638,'epoch': 3.0}

Publicar el modelo

Ya tenemos nuestro modelo entrenado, ya podemos compartirlo con el mundo, así que primero creamos una **model card**

trainer.create_model_card()Copied

Y ya lo podemos publicar. Como lo primero que hemos hecho ha sido loguearnos con el hub de huggingface, lo podremos subir a nuestro hub sin ningún problema

trainer.push_to_hub()Copied

Uso del modelo

Limpiamos todo lo posible

import torchimport gcdef clear_hardwares():torch.clear_autocast_cache()torch.cuda.ipc_collect()torch.cuda.empty_cache()gc.collect()clear_hardwares()clear_hardwares()Copied

Como hemos subido el modelo a nuestro hub, podemos descargarlo y usarlo

from transformers import pipelineuser = "maximofn"checkpoints = f"{user}/{model_name}"task = "text-classification"classifier = pipeline(task, model=checkpoints, tokenizer=checkpoints)Copied

Ahora si queremos que nos devuelva la probabilidad de todas las clases, simplemente usamos el clasificador que acabamos de instanciar, con el parámetro top_k=None

labels = classifier("I love this product", top_k=None)labelsCopied

[{'label': 'LABEL_4', 'score': 0.8253807425498962},{'label': 'LABEL_3', 'score': 0.15411493182182312},{'label': 'LABEL_2', 'score': 0.013907806016504765},{'label': 'LABEL_0', 'score': 0.003939222544431686},{'label': 'LABEL_1', 'score': 0.0026572425849735737}]

Si solo queremos la clase con la mayor probabilidad, hacemos lo mismo pero con el parámetro top_k=1

label = classifier("I love this product", top_k=1)labelCopied

[{'label': 'LABEL_4', 'score': 0.8253807425498962}]

Y si queremos n clases, hacemos lo mismo pero con el parámetro top_k=n

two_labels = classifier("I love this product", top_k=2)two_labelsCopied

[{'label': 'LABEL_4', 'score': 0.8253807425498962},{'label': 'LABEL_3', 'score': 0.15411493182182312}]

También podemos probar el modelo con Automodel y AutoTokenizer

from transformers import AutoTokenizer, AutoModelForSequenceClassificationimport torchmodel_name = "GPT2-small-finetuned-amazon-reviews-en-classification"user = "maximofn"checkpoint = f"{user}/{model_name}"num_classes = 5tokenizer = AutoTokenizer.from_pretrained(checkpoint)model = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=num_classes).half().eval().to("cuda")Copied

tokens = tokenizer.encode("I love this product", return_tensors="pt").to(model.device)with torch.no_grad():output = model(tokens)logits = output.logitslables = torch.softmax(logits, dim=1).cpu().numpy().tolist()lables[0]Copied

[0.003963470458984375,0.0026721954345703125,0.01397705078125,0.154541015625,0.82470703125]

Si quieres probar más el modelo puedes verlo en Maximofn/GPT2-small-finetuned-amazon-reviews-en-classification

Fine tuning para generación de texto con Hugging Face

Para asegurarme de no tener problemas de memoria VRAM, reinicio el notebook

Login

Para poder subir el resultado del entrenamiento al hub debemos logearnos primero, para ello necesitamos un token

Para crear un token hay que ir a la página de settings/tokens de nuestra cuenta, nos aparecerá algo así

Le damos a New token y nos aparecerá una ventana para crear un nuevo token

Le damos un nombre al token y lo creamos con el rol write, o con el rol Fine-grained, que nos permite seleccionar exactamente cuáles permisos tendrá el token

Una vez creado lo copiamos y lo pegamos a continuación

from huggingface_hub import notebook_loginnotebook_login()Copied

Dataset

Vamos a usar un dataset de chistes en inglés

from datasets import load_datasetjokes = load_dataset("Maximofn/short-jokes-dataset")jokesCopied

DatasetDict({train: Dataset({features: ['ID', 'Joke'],num_rows: 231657})})

Vamos a verlo un poco

jokesCopied

DatasetDict({train: Dataset({features: ['ID', 'Joke'],num_rows: 231657})})

Vemos que es un único set de entrenamiento de más de 200 mil chistes. Así que más adelante lo tendremos que dividir en train y evaluación

Vamos a ver una muestra

from random import randintidx = randint(0, len(jokes['train']) - 1)jokes['train'][idx]Copied

{'ID': 198387,'Joke': 'My hot dislexic co-worker said she had an important massage to give me in her office... When I got there, she told me it can wait until I put on some clothes.'}

Vemos que tiene una ID del chiste que no nos interesa para nada y el propio chiste

Por si tienes poca memoria en la GPU voy a hacer un subset del dataset, elije el porcentaje de chistes que quieres usar

percent_of_train_dataset = 1 # If you want 50% of the dataset, set this to 0.5subset_dataset = jokes["train"].select(range(int(len(jokes["train"]) * percent_of_train_dataset)))subset_datasetCopied

Dataset({features: ['ID', 'Joke'],num_rows: 231657})

Ahora dividimos el subset en un conjunto de entrenamiento y otro de validación

percent_of_train_dataset = 0.90split_dataset = subset_dataset.train_test_split(train_size=int(subset_dataset.num_rows * percent_of_train_dataset), seed=19, shuffle=False)train_dataset = split_dataset["train"]validation_test_dataset = split_dataset["test"]split_dataset = validation_test_dataset.train_test_split(train_size=int(validation_test_dataset.num_rows * 0.5), seed=19, shuffle=False)validation_dataset = split_dataset["train"]test_dataset = split_dataset["test"]print(f"Size of the train set: {len(train_dataset)}. Size of the validation set: {len(validation_dataset)}. Size of the test set: {len(test_dataset)}")Copied

Size of the train set: 208491. Size of the validation set: 11583. Size of the test set: 11583

Tokenizador

Instanciamos el tokenizador. Instanciamos el token de padding del tokenizador para que no nos dé error como antes

from transformers import AutoTokenizercheckpoints = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoints)tokenizer.pad_token = tokenizer.eos_tokentokenizer.padding_side = "right"Copied

Vamos a añadir dos nuevos tokens de inicio de chiste y final de chiste para tener más control

new_tokens = ['<SJ>', '<EJ>'] # Start and end of joke tokensnum_added_tokens = tokenizer.add_tokens(new_tokens)print(f"Added {num_added_tokens} tokens")Copied

Added 2 tokens

Creamos una función para añadir los nuevos tokens a las sentencias

joke_column = "Joke"def format_joke(example):example[joke_column] = '<SJ> ' + example['Joke'] + ' <EJ>'return exampleCopied

Seleccionamos las columnas que no necesitamos

remove_columns = [column for column in train_dataset.column_names if column != joke_column]remove_columnsCopied

['ID']

Formateamos el dataset y eliminamos las columnas que no necesitamos

train_dataset = train_dataset.map(format_joke, remove_columns=remove_columns)validation_dataset = validation_dataset.map(format_joke, remove_columns=remove_columns)test_dataset = test_dataset.map(format_joke, remove_columns=remove_columns)train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['Joke'],num_rows: 208491}),Dataset({features: ['Joke'],num_rows: 11583}),Dataset({features: ['Joke'],num_rows: 11583}))

Ahora creamos una función para tokenizar los chistes

def tokenize_function(examples):return tokenizer(examples[joke_column], padding="max_length", truncation=True, max_length=768, return_tensors="pt")Copied

Tokenizamos el dataset y eliminamos la columna con el texto

train_dataset = train_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])validation_dataset = validation_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])test_dataset = test_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['input_ids', 'attention_mask'],num_rows: 208491}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}))

Modelo

Ahora instanciamos el modelo para generación de texto y le asignamos al token de padding el token de end of string

from transformers import AutoModelForCausalLMmodel = AutoModelForCausalLM.from_pretrained(checkpoints)model.config.pad_token_id = model.config.eos_token_idCopied

Vemos el tamaño del vocabulario del modelo

vocab_size = model.config.vocab_sizevocab_sizeCopied

50257

Tiene 50257 tokens, que es el tamaño del vocabulario de GPT2. Pero como hemos dicho que íbamos a crear dos tokens nuevos con el inicio de chiste y el final de chiste, los añadimos al modelo

model.resize_token_embeddings(len(tokenizer))new_vocab_size = model.config.vocab_sizeprint(f"Old vocab size: {vocab_size}. New vocab size: {new_vocab_size}. Added {new_vocab_size - vocab_size} tokens")Copied

Old vocab size: 50257. New vocab size: 50259. Added 2 tokens

Se han añadido los dos nuevos tokens

Entrenamiento

Configuramos los parámetros de entrenamiento

from transformers import TrainingArgumentsmetric_name = "accuracy"model_name = "GPT2-small-finetuned-Maximofn-short-jokes-dataset-casualLM"output_dir = f"./training_results"LR = 2e-5BS_TRAIN = 28BS_EVAL = 32EPOCHS = 3WEIGHT_DECAY = 0.01WARMUP_STEPS = 100training_args = TrainingArguments(model_name,eval_strategy="epoch",save_strategy="epoch",learning_rate=LR,per_device_train_batch_size=BS_TRAIN,per_device_eval_batch_size=BS_EVAL,warmup_steps=WARMUP_STEPS,num_train_epochs=EPOCHS,weight_decay=WEIGHT_DECAY,lr_scheduler_type="cosine",warmup_ratio = 0.1,fp16=True,load_best_model_at_end=True,# metric_for_best_model=metric_name,push_to_hub=True,)Copied

Ahora no usamos metric_for_best_model, después de definir el trainer explicamos por qué

Definimos el trainer

from transformers import Trainertrainer = Trainer(model,training_args,train_dataset=train_dataset,eval_dataset=validation_dataset,tokenizer=tokenizer,# compute_metrics=compute_metrics,)Copied

En este caso no le pasamos una función compute_metrics, sino se le pasa, durante la evaluación se usará la loss para evaluar el modelo. Por eso al definir los argumentos no definimos metric_for_best_model, porque no vamos a usar una métrica para evaluar el modelo, sino la loss

Entrenamos

trainer.train()Copied

0%| | 0/625473 [00:00<?, ?it/s]

---------------------------------------------------------------------------ValueError Traceback (most recent call last)Cell In[19], line 1----> 1 trainer.train()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:1885, in Trainer.train(self, resume_from_checkpoint, trial, ignore_keys_for_eval, **kwargs)1883 hf_hub_utils.enable_progress_bars()1884 else:-> 1885 return inner_training_loop(1886 args=args,1887 resume_from_checkpoint=resume_from_checkpoint,1888 trial=trial,1889 ignore_keys_for_eval=ignore_keys_for_eval,1890 )File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:2216, in Trainer._inner_training_loop(self, batch_size, args, resume_from_checkpoint, trial, ignore_keys_for_eval)2213 self.control = self.callback_handler.on_step_begin(args, self.state, self.control)2215 with self.accelerator.accumulate(model):-> 2216 tr_loss_step = self.training_step(model, inputs)2218 if (2219 args.logging_nan_inf_filter2220 and not is_torch_xla_available()2221 and (torch.isnan(tr_loss_step) or torch.isinf(tr_loss_step))2222 ):2223 # if loss is nan or inf simply add the average of previous logged losses2224 tr_loss += tr_loss / (1 + self.state.global_step - self._globalstep_last_logged)File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:3238, in Trainer.training_step(self, model, inputs)3235 return loss_mb.reduce_mean().detach().to(self.args.device)3237 with self.compute_loss_context_manager():-> 3238 loss = self.compute_loss(model, inputs)3240 del inputs3241 torch.cuda.empty_cache()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:3282, in Trainer.compute_loss(self, model, inputs, return_outputs)3280 else:3281 if isinstance(outputs, dict) and "loss" not in outputs:-> 3282 raise ValueError(3283 "The model did not return a loss from the inputs, only the following keys: "3284 f"{','.join(outputs.keys())}. For reference, the inputs it received are {','.join(inputs.keys())}."3285 )3286 # We don't use .loss here since the model may return tuples instead of ModelOutput.3287 loss = outputs["loss"] if isinstance(outputs, dict) else outputs[0]ValueError: The model did not return a loss from the inputs, only the following keys: logits,past_key_values. For reference, the inputs it received are input_ids,attention_mask.

Como vemos, nos da un error, nos dice que el modelo no devuelve el valor del loss, que es clave para poder entrenar, vamos a ver por qué

Primero veamos cómo es un ejemplo del dataset

idx = randint(0, len(train_dataset) - 1)sample = train_dataset[idx]sampleCopied

{'input_ids': [50257,4162,750,262,18757,6451,2245,2491,30,4362,340,373,734,10032,13,220,50258,50256,50256,...,50256,50256,50256],'attention_mask': [1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,0,...,0,0,0]}

Como vemos, tenemos un diccionario con los input_ids y las attention_mask. Si se lo pasamos al modelo obtenemos esto

import torchoutput = model(input_ids=torch.Tensor(sample["input_ids"]).long().unsqueeze(0).to(model.device),attention_mask=torch.Tensor(sample["attention_mask"]).long().unsqueeze(0).to(model.device),)print(output.loss)Copied

None

Como vemos no devuelve el valor de la loss porque está esperando un valor para labels, que no se lo hemos pasado. En el ejemplo anterior, en el que hacíamos fine tuning para clasificación de texto, dijimos que las etiquetas había que pasarlas a un campo del dataset llamado labels, pero en este caso no tenemos ese campo en el dataset

Si ahora asignamos las labels a los input_ids y volvemos a ver la loss

import torchoutput = model(input_ids=torch.Tensor(sample["input_ids"]).long().unsqueeze(0).to(model.device),attention_mask=torch.Tensor(sample["attention_mask"]).long().unsqueeze(0).to(model.device),labels=torch.Tensor(sample["input_ids"]).long().unsqueeze(0).to(model.device))print(output.loss)Copied

tensor(102.1873, device='cuda:0', grad_fn=<NllLossBackward0>)

Ahora sí obtenemos una loss

Por tanto tenemos dos opciones, añadir un campo labels al dataset, con los valores de input_ids o utilizar una función de la librería transformers llamada data_collator, en este caso usaremos DataCollatorForLanguageModeling. Vamos a verlo

from transformers import DataCollatorForLanguageModelingmy_data_collator=DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)Copied

Pasamos la muestra sample por este data_collator

collated_sample = my_data_collator([sample]).to(model.device)Copied

Vemos cómo es la salida

for key, value in collated_sample.items():print(f"{key} ({value.shape}): {value}")Copied

input_ids (torch.Size([1, 768])): tensor([[50257, 4162, 750, 262, 18757, 6451, 2245, 2491, 30, 4362,340, 373, 734, 10032, 13, 220, 50258, 50256, ..., 50256, 50256]],device='cuda:0')attention_mask (torch.Size([1, 768])): tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, ..., 0, 0]],device='cuda:0')labels (torch.Size([1, 768])): tensor([[50257, 4162, 750, 262, 18757, 6451, 2245, 2491, 30, 4362,340, 373, 734, 10032, 13, 220, 50258, -100, ..., -100, -100]],device='cuda:0')

Como se puede ver, el data_collator ha creado un campo labels y le ha asignado los valores de input_ids. Los tokens que están enmascarados les ha asignado el valor -100. Esto es porque cuando definimos el data_collator le pasamos el parámetro mlm=False, que significa que no estamos haciendo Masked Language Modeling, sino Language Modeling, por eso no enmascara ningún token original

Vamos a ver si ahora obtenemos una loss con este data_collator

output = model(**collated_sample)output.lossCopied

tensor(102.7181, device='cuda:0', grad_fn=<NllLossBackward0>)

Así que volvemos a definir el trainer con el data_collator y volvemos a entrenar

from transformers import DataCollatorForLanguageModelingtrainer = Trainer(model,training_args,train_dataset=train_dataset,eval_dataset=validation_dataset,tokenizer=tokenizer,data_collator=DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False),)Copied

trainer.train()Copied

<IPython.core.display.HTML object>

There were missing keys in the checkpoint model loaded: ['lm_head.weight'].

TrainOutput(global_step=22341, training_loss=3.505178199598342, metrics={'train_runtime': 9209.5353, 'train_samples_per_second': 67.916, 'train_steps_per_second': 2.426, 'total_flos': 2.45146666696704e+17, 'train_loss': 3.505178199598342, 'epoch': 3.0})

Evaluación

Una vez entrenado, evaluamos el modelo sobre el dataset de test

trainer.evaluate(eval_dataset=test_dataset)Copied

<IPython.core.display.HTML object>

{'eval_loss': 3.201305866241455,'eval_runtime': 65.0033,'eval_samples_per_second': 178.191,'eval_steps_per_second': 5.569,'epoch': 3.0}

Publicar el modelo

Creamos la model card

trainer.create_model_card()Copied

Lo publicamos

trainer.push_to_hub()Copied

events.out.tfevents.1720875425.8de3af1b431d.6946.1: 0%| | 0.00/364 [00:00<?, ?B/s]

CommitInfo(commit_url='https://huggingface.co/Maximofn/GPT2-small-finetuned-Maximofn-short-jokes-dataset-casualLM/commit/d107b3bb0e02076483238f9975697761015ec390', commit_message='End of training', commit_description='', oid='d107b3bb0e02076483238f9975697761015ec390', pr_url=None, pr_revision=None, pr_num=None)

Uso del modelo

Limpiamos todo lo posible

import torchimport gcdef clear_hardwares():torch.clear_autocast_cache()torch.cuda.ipc_collect()torch.cuda.empty_cache()gc.collect()clear_hardwares()clear_hardwares()Copied

Descargamos el modelo y el tokenizador

from transformers import AutoTokenizer, AutoModelForCausalLMuser = "maximofn"checkpoints = f"{user}/{model_name}"tokenizer = AutoTokenizer.from_pretrained(checkpoints)tokenizer.pad_token = tokenizer.eos_tokentokenizer.padding_side = "right"model = AutoModelForCausalLM.from_pretrained(checkpoints)model.config.pad_token_id = model.config.eos_token_idCopied

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Comprobamos que el tokenizador y el modelo tienen los 2 tokens extra que hemos añadido

tokenizer_vocab = tokenizer.get_vocab()model_vocab = model.config.vocab_sizeprint(f"tokenizer_vocab: {len(tokenizer_vocab)}. model_vocab: {model_vocab}")Copied

tokenizer_vocab: 50259. model_vocab: 50259

Vemos que tienen 50259 tokens, es decir, los 50257 tokens de GPT2 más los 2 que hemos añadido

Creamos una función para generar chistes

def generate_joke(prompt_text):text = f"<SJ> {prompt_text}"tokens = tokenizer(text, return_tensors="pt").to(model.device)with torch.no_grad():output = model.generate(**tokens, max_new_tokens=256, eos_token_id=tokenizer.encode("<EJ>")[-1])return tokenizer.decode(output[0], skip_special_tokens=False)Copied

Generamos un chiste

generate_joke("Why didn't the frog cross the road?")Copied

Setting `pad_token_id` to `eos_token_id`:50258 for open-end generation.

"<SJ> Why didn't the frog cross the road? Because he was frog-in-the-face. <EJ>"

Si quieres probar más el modelo puedes verlo en Maximofn/GPT2-small-finetuned-Maximofn-short-jokes-dataset-casualLM

Fine tuning para clasificación de texto con Pytorch

Repetimos el entrenamiento con Pytorch

Reiniciamos el notebook para asegurarnos

Dataset

Descargamos el mismo dataset que cuando hicimos el entrenamiento con las librerías de Hugging Face

from datasets import load_datasetdataset = load_dataset("mteb/amazon_reviews_multi", "en")Copied

Creamos una variable con el número de clases

num_classes = len(dataset['train'].unique('label'))num_classesCopied

5

Antes procesamos todo el dataset para crear un campo llamado labels, pero ahora no hace falta porque, como vamos a programar nosotros todo, nos adaptamos a cómo es el dataset

Tokenizador

Creamos el tokenizador. Le asignamos el token de padding para que no nos de error como antes

from transformers import AutoTokenizercheckpoint = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoint)tokenizer.pad_token = tokenizer.eos_tokenCopied

Creamos una función para tokenizar el dataset

def tokenize_function(examples):return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=768, return_tensors="pt")Copied

Lo tokenizamos. Eliminamos columnas que no nos hagan falta, pero ahora dejamos la de texto

dataset = dataset.map(tokenize_function, batched=True, remove_columns=['id', 'label_text'])Copied

datasetCopied

DatasetDict({train: Dataset({features: ['text', 'label', 'input_ids', 'attention_mask'],num_rows: 200000})validation: Dataset({features: ['text', 'label', 'input_ids', 'attention_mask'],num_rows: 5000})test: Dataset({features: ['text', 'label', 'input_ids', 'attention_mask'],num_rows: 5000})})

percentage = 1subset_train = dataset['train'].select(range(int(len(dataset['train']) * percentage)))percentage = 1subset_validation = dataset['validation'].select(range(int(len(dataset['validation']) * percentage)))subset_test = dataset['test'].select(range(int(len(dataset['test']) * percentage)))print(f"len subset_train: {len(subset_train)}, len subset_validation: {len(subset_validation)}, len subset_test: {len(subset_test)}")Copied

len subset_train: 200000, len subset_validation: 5000, len subset_test: 5000

Modelo

Importamos los pesos y asignamos el token de padding

from transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=num_classes)model.config.pad_token_id = model.config.eos_token_idCopied

Some weights of GPT2ForSequenceClassification were not initialized from the model checkpoint at openai-community/gpt2 and are newly initialized: ['score.weight']You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

Device

Creamos el dispositivo donde se va a ejecutar todo

import torchdevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')Copied

De paso, pasamos el modelo al dispositivo y, de paso, lo pasamos a FP16 para que ocupe menos memoria

model.half().to(device)print()Copied

Pytorch Dataset

Creamos un dataset de pytorch

from torch.utils.data import Datasetclass ReviewsDataset(Dataset):def __init__(self, huggingface_dataset):self.dataset = huggingface_datasetdef __getitem__(self, idx):label = self.dataset[idx]['label']input_ids = torch.tensor(self.dataset[idx]['input_ids'])attention_mask = torch.tensor(self.dataset[idx]['attention_mask'])return input_ids, attention_mask, labeldef __len__(self):return len(self.dataset)Copied

Instanciamos los datasets

train_dataset = ReviewsDataset(subset_train)validatation_dataset = ReviewsDataset(subset_validation)test_dataset = ReviewsDataset(subset_test)Copied

Vamos a ver una muestra

input_ids, at_mask, label = train_dataset[0]input_ids.shape, at_mask.shape, labelCopied

(torch.Size([768]), torch.Size([768]), 0)

Pytorch Dataloader

Creamos ahora un DataLoader de PyTorch

from torch.utils.data import DataLoaderBS = 12train_loader = DataLoader(train_dataset, batch_size=BS, shuffle=True)validation_loader = DataLoader(validatation_dataset, batch_size=BS)test_loader = DataLoader(test_dataset, batch_size=BS)Copied

Vamos a ver una muestra

input_ids, at_mask, labels = next(iter(train_loader))input_ids.shape, at_mask.shape, labelsCopied

(torch.Size([12, 768]),torch.Size([12, 768]),tensor([2, 1, 2, 0, 3, 3, 0, 4, 3, 3, 4, 2]))

Para ver que esté todo bien, pasamos la muestra al modelo para ver qué salga todo bien. Primero pasamos los tokens al dispositivo.

input_ids = input_ids.to(device)at_mask = at_mask.to(device)labels = labels.to(device)Copied

Ahora se los pasamos al modelo

output = model(input_ids=input_ids, attention_mask=at_mask, labels=labels)output.keys()Copied

odict_keys(['loss', 'logits', 'past_key_values'])

Como vemos, nos da la loss y los logits

output['loss']Copied

tensor(5.9414, device='cuda:0', dtype=torch.float16,grad_fn=<NllLossBackward0>)

output['logits']Copied

tensor([[ 6.1953e+00, -1.2275e+00, -2.4824e+00, 5.8867e+00, -1.4734e+01],[ 5.4062e+00, -8.4570e-01, -2.3203e+00, 5.1055e+00, -1.1555e+01],[ 6.1641e+00, -9.3066e-01, -2.5664e+00, 6.0039e+00, -1.4570e+01],[ 5.2266e+00, -4.2358e-01, -2.0801e+00, 4.7461e+00, -1.1570e+01],[ 3.8184e+00, -2.3460e-03, -1.7666e+00, 3.4160e+00, -7.7969e+00],[ 4.1641e+00, -4.8169e-01, -1.6914e+00, 3.9941e+00, -8.7734e+00],[ 4.6758e+00, -3.0298e-01, -2.1641e+00, 4.1055e+00, -9.3359e+00],[ 4.1953e+00, -3.2471e-01, -2.1875e+00, 3.9375e+00, -8.3438e+00],[-1.1650e+00, 1.3564e+00, -6.2158e-01, -6.8115e-01, 4.8672e+00],[ 4.4961e+00, -8.7891e-02, -2.2793e+00, 4.2812e+00, -9.3359e+00],[ 4.9336e+00, -2.6627e-03, -2.1543e+00, 4.3711e+00, -1.0742e+01],[ 5.9727e+00, -4.3152e-02, -1.4551e+00, 4.3438e+00, -1.2117e+01]],device='cuda:0', dtype=torch.float16, grad_fn=<IndexBackward0>)

Métrica

Vamos a crear una función para obtener la métrica, que en este caso va a ser el accuracy

def predicted_labels(logits):percent = torch.softmax(logits, dim=1)predictions = torch.argmax(percent, dim=1)return predictionsCopied

def compute_accuracy(logits, labels):predictions = predicted_labels(logits)correct = (predictions == labels).float()return correct.mean()Copied

Vamos a ver si lo calcula bien

compute_accuracy(output['logits'], labels).item()Copied

0.1666666716337204

Optimizador

Como vamos a necesitar un optimizador, creamos uno

from transformers import AdamWLR = 2e-5optimizer = AdamW(model.parameters(), lr=LR)Copied

/usr/local/lib/python3.10/dist-packages/transformers/optimization.py:588: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warningwarnings.warn(

Entrenamiento

Creamos el bucle de entrenamiento

from tqdm import tqdmEPOCHS = 3accuracy = 0for epoch in range(EPOCHS):model.train()train_loss = 0progresbar = tqdm(train_loader, total=len(train_loader), desc=f'Epoch {epoch + 1}')for input_ids, at_mask, labels in progresbar:input_ids = input_ids.to(device)at_mask = at_mask.to(device)label = labels.to(device)output = model(input_ids=input_ids, attention_mask=at_mask, labels=label)loss = output['loss']train_loss += loss.item()optimizer.zero_grad()loss.backward()optimizer.step()progresbar.set_postfix({'train_loss': loss.item()})train_loss /= len(train_loader)progresbar.set_postfix({'train_loss': train_loss})model.eval()valid_loss = 0progresbar = tqdm(validation_loader, total=len(validation_loader), desc=f'Epoch {epoch + 1}')for input_ids, at_mask, labels in progresbar:input_ids = input_ids.to(device)at_mask = at_mask.to(device)labels = labels.to(device)output = model(input_ids=input_ids, attention_mask=at_mask, labels=labels)loss = output['loss']valid_loss += loss.item()step_accuracy = compute_accuracy(output['logits'], labels)accuracy += step_accuracyprogresbar.set_postfix({'valid_loss': loss.item(), 'accuracy': step_accuracy.item()})valid_loss /= len(validation_loader)accuracy /= len(validation_loader)progresbar.set_postfix({'valid_loss': valid_loss, 'accuracy': accuracy})Copied

Epoch 1: 100%|██████████| 16667/16667 [44:13<00:00, 6.28it/s, train_loss=nan]Epoch 1: 100%|██████████| 417/417 [00:32<00:00, 12.72it/s, valid_loss=nan, accuracy=0]Epoch 2: 100%|██████████| 16667/16667 [44:06<00:00, 6.30it/s, train_loss=nan]Epoch 2: 100%|██████████| 417/417 [00:32<00:00, 12.77it/s, valid_loss=nan, accuracy=0]Epoch 3: 100%|██████████| 16667/16667 [44:03<00:00, 6.30it/s, train_loss=nan]Epoch 3: 100%|██████████| 417/417 [00:32<00:00, 12.86it/s, valid_loss=nan, accuracy=0]

Uso del modelo

Vamos a probar el modelo que hemos entrenado

Primero tokenizamos un texto

input_tokens = tokenize_function({"text": "I love this product. It is amazing."})input_tokens['input_ids'].shape, input_tokens['attention_mask'].shapeCopied

(torch.Size([1, 768]), torch.Size([1, 768]))

Ahora se lo pasamos al modelo

output = model(input_ids=input_tokens['input_ids'].to(device), attention_mask=input_tokens['attention_mask'].to(device))output['logits']Copied

tensor([[nan, nan, nan, nan, nan]], device='cuda:0', dtype=torch.float16,grad_fn=<IndexBackward0>)

Vemos las predicciones de esos logits

predicted = predicted_labels(output['logits'])predictedCopied

tensor([0], device='cuda:0')

Fine tuning para generación de texto con Pytorch

Repetimos el entrenamiento con Pytorch

Reiniciamos el notebook para asegurarnos

Dataset

Volvemos a descargar el dataset de chistes

from datasets import load_datasetjokes = load_dataset("Maximofn/short-jokes-dataset")jokesCopied

DatasetDict({train: Dataset({features: ['ID', 'Joke'],num_rows: 231657})})

Creamos un subset por si se tiene poca memoria

percent_of_train_dataset = 1 # If you want 50% of the dataset, set this to 0.5subset_dataset = jokes["train"].select(range(int(len(jokes["train"]) * percent_of_train_dataset)))subset_datasetCopied

Dataset({features: ['ID', 'Joke'],num_rows: 231657})

Dividimos el dataset en subsets de entrenamiento, validación y test

percent_of_train_dataset = 0.90split_dataset = subset_dataset.train_test_split(train_size=int(subset_dataset.num_rows * percent_of_train_dataset), seed=19, shuffle=False)train_dataset = split_dataset["train"]validation_test_dataset = split_dataset["test"]split_dataset = validation_test_dataset.train_test_split(train_size=int(validation_test_dataset.num_rows * 0.5), seed=19, shuffle=False)validation_dataset = split_dataset["train"]test_dataset = split_dataset["test"]print(f"Size of the train set: {len(train_dataset)}. Size of the validation set: {len(validation_dataset)}. Size of the test set: {len(test_dataset)}")Copied

Size of the train set: 208491. Size of the validation set: 11583. Size of the test set: 11583

Tokenizador

Iniciamos el tokenizador y asignamos al token de padding el de end of string

from transformers import AutoTokenizercheckpoints = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoints)tokenizer.pad_token = tokenizer.eos_tokentokenizer.padding_side = "right"Copied

Añadimos los tokens especiales de inicio de chiste y fin de chiste

new_tokens = ['<SJ>', '<EJ>'] # Start and end of joke tokensnum_added_tokens = tokenizer.add_tokens(new_tokens)print(f"Added {num_added_tokens} tokens")Copied

Added 2 tokens

Los añadimos al dataset

joke_column = "Joke"def format_joke(example):example[joke_column] = '<SJ> ' + example['Joke'] + ' <EJ>'return exampleremove_columns = [column for column in train_dataset.column_names if column != joke_column]train_dataset = train_dataset.map(format_joke, remove_columns=remove_columns)validation_dataset = validation_dataset.map(format_joke, remove_columns=remove_columns)test_dataset = test_dataset.map(format_joke, remove_columns=remove_columns)train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['Joke'],num_rows: 208491}),Dataset({features: ['Joke'],num_rows: 11583}),Dataset({features: ['Joke'],num_rows: 11583}))

Tokenizamos el dataset

def tokenize_function(examples):return tokenizer(examples[joke_column], padding="max_length", truncation=True, max_length=768, return_tensors="pt")train_dataset = train_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])validation_dataset = validation_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])test_dataset = test_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['input_ids', 'attention_mask'],num_rows: 208491}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}))

Modelo

Instanciamos el modelo, asignamos el token de padding y añadimos los nuevos tokens de inicio de chiste y fin de chiste

from transformers import AutoModelForCausalLMmodel = AutoModelForCausalLM.from_pretrained(checkpoints)model.config.pad_token_id = model.config.eos_token_idmodel.resize_token_embeddings(len(tokenizer))Copied

Embedding(50259, 768)

Device

Creamos el dispositivo y pasamos el modelo al dispositivo

import torchdevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')model.half().to(device)print()Copied

Pytorch Dataset

Creamos un dataset de PyTorch

from torch.utils.data import Datasetclass JokesDataset(Dataset):def __init__(self, huggingface_dataset):self.dataset = huggingface_datasetdef __getitem__(self, idx):input_ids = torch.tensor(self.dataset[idx]['input_ids'])attention_mask = torch.tensor(self.dataset[idx]['attention_mask'])return input_ids, attention_maskdef __len__(self):return len(self.dataset)Copied

Instanciamos los datasets de entrenamiento, validación y test

train_pytorch_dataset = JokesDataset(train_dataset)validation_pytorch_dataset = JokesDataset(validation_dataset)test_pytorch_dataset = JokesDataset(test_dataset)Copied

Veamos una muestra

input_ids, attention_mask = train_pytorch_dataset[0]input_ids.shape, attention_mask.shapeCopied

(torch.Size([768]), torch.Size([768]))

Pytorch Dataloader

Creamos los dataloaders

from torch.utils.data import DataLoaderBS = 28train_loader = DataLoader(train_pytorch_dataset, batch_size=BS, shuffle=True)validation_loader = DataLoader(validation_pytorch_dataset, batch_size=BS)test_loader = DataLoader(test_pytorch_dataset, batch_size=BS)Copied

Vemos una muestra

input_ids, attention_mask = next(iter(train_loader))input_ids.shape, attention_mask.shapeCopied

(torch.Size([28, 768]), torch.Size([28, 768]))

Se lo pasamos al modelo

output = model(input_ids.to(device), attention_mask=attention_mask.to(device))output.keys()Copied

odict_keys(['logits', 'past_key_values'])

Como vemos, no tenemos valor de loss. Como hemos visto, tenemos que pasarle el input_ids y el labels.

output = model(input_ids.to(device), attention_mask=attention_mask.to(device), labels=input_ids.to(device))output.keys()Copied

odict_keys(['loss', 'logits', 'past_key_values'])

Ahora sí tenemos loss

output['loss'].item()Copied

80.5625

Optimizador

Creamos un optimizador

from transformers import AdamWLR = 2e-5optimizer = AdamW(model.parameters(), lr=5e-5)Copied

/usr/local/lib/python3.10/dist-packages/transformers/optimization.py:588: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warningwarnings.warn(

Entrenamiento

Creamos el bucle de entrenamiento

from tqdm import tqdmEPOCHS = 3for epoch in range(EPOCHS):model.train()train_loss = 0progresbar = tqdm(train_loader, total=len(train_loader), desc=f'Epoch {epoch + 1}')for input_ids, at_mask in progresbar:input_ids = input_ids.to(device)at_mask = at_mask.to(device)output = model(input_ids=input_ids, attention_mask=at_mask, labels=input_ids)loss = output['loss']train_loss += loss.item()optimizer.zero_grad()loss.backward()optimizer.step()progresbar.set_postfix({'train_loss': loss.item()})train_loss /= len(train_loader)progresbar.set_postfix({'train_loss': train_loss})Copied

Epoch 1: 100%|██████████| 7447/7447 [51:07<00:00, 2.43it/s, train_loss=nan]Epoch 2: 100%|██████████| 7447/7447 [51:06<00:00, 2.43it/s, train_loss=nan]Epoch 3: 100%|██████████| 7447/7447 [51:07<00:00, 2.43it/s, train_loss=nan]

Uso del modelo

Probamos el modelo

def generate_text(decoded_joke, max_new_tokens=100, stop_token='<EJ>', top_k=0, temperature=1.0):input_tokens = tokenize_function({'Joke': decoded_joke})output = model(input_tokens['input_ids'].to(device), attention_mask=input_tokens['attention_mask'].to(device))nex_token = torch.argmax(output['logits'][:, -1, :], dim=-1).item()nex_token_decoded = tokenizer.decode(nex_token)decoded_joke = decoded_joke + nex_token_decodedfor _ in range(max_new_tokens):nex_token = torch.argmax(output['logits'][:, -1, :], dim=-1).item()nex_token_decoded = tokenizer.decode(nex_token)if nex_token_decoded == stop_token:breakdecoded_joke = decoded_joke + nex_token_decodedinput_tokens = tokenize_function({'Joke': decoded_joke})output = model(input_tokens['input_ids'].to(device), attention_mask=input_tokens['attention_mask'].to(device))return decoded_jokeCopied

generated_text = generate_text("<SJ> Why didn't the frog cross the road")generated_textCopied

"<SJ> Why didn't the frog cross the road!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"