Disclaimer: This post has been translated to English using a machine translation model. Please, let me know if you find any mistakes.

In this post, we are going to see how to perform fine-tuning on small language models. We will explore how to do fine-tuning for text classification and text generation. First, we will look at how to do it using the Hugging Face libraries, as Hugging Face has become a very important player in the AI ecosystem at this moment.

But although the Hugging Face libraries are very important and useful, it is very important to know how the training is actually done and what is happening underneath, so we are going to repeat the training for classification and text generation but with Pytorch.

Fine tuning for text classification with Hugging Face

Login

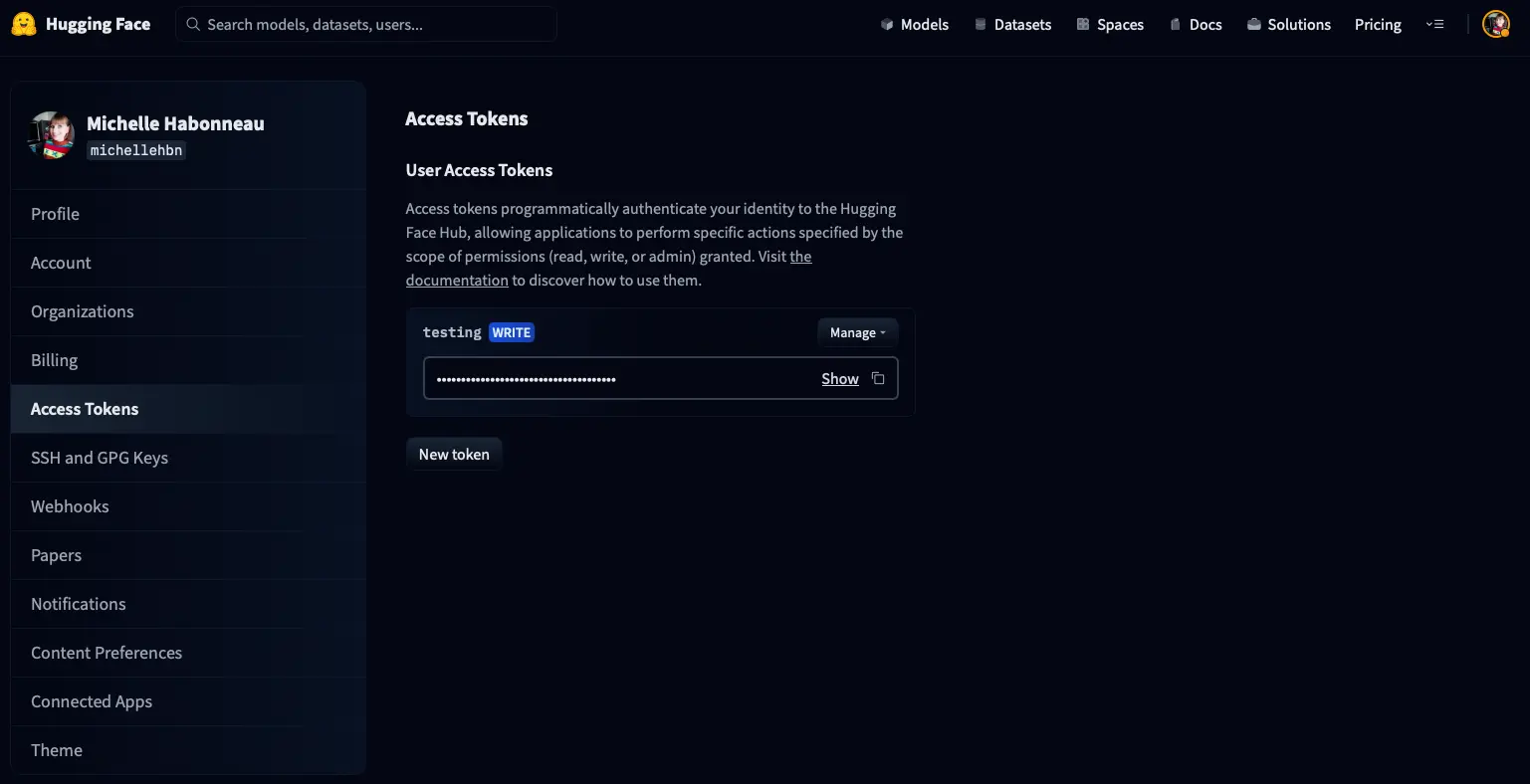

To be able to upload the training results to the hub, we need to log in first, for which we need a token

To create a token, you need to go to the settings/tokens page of your account, and you will see something like this

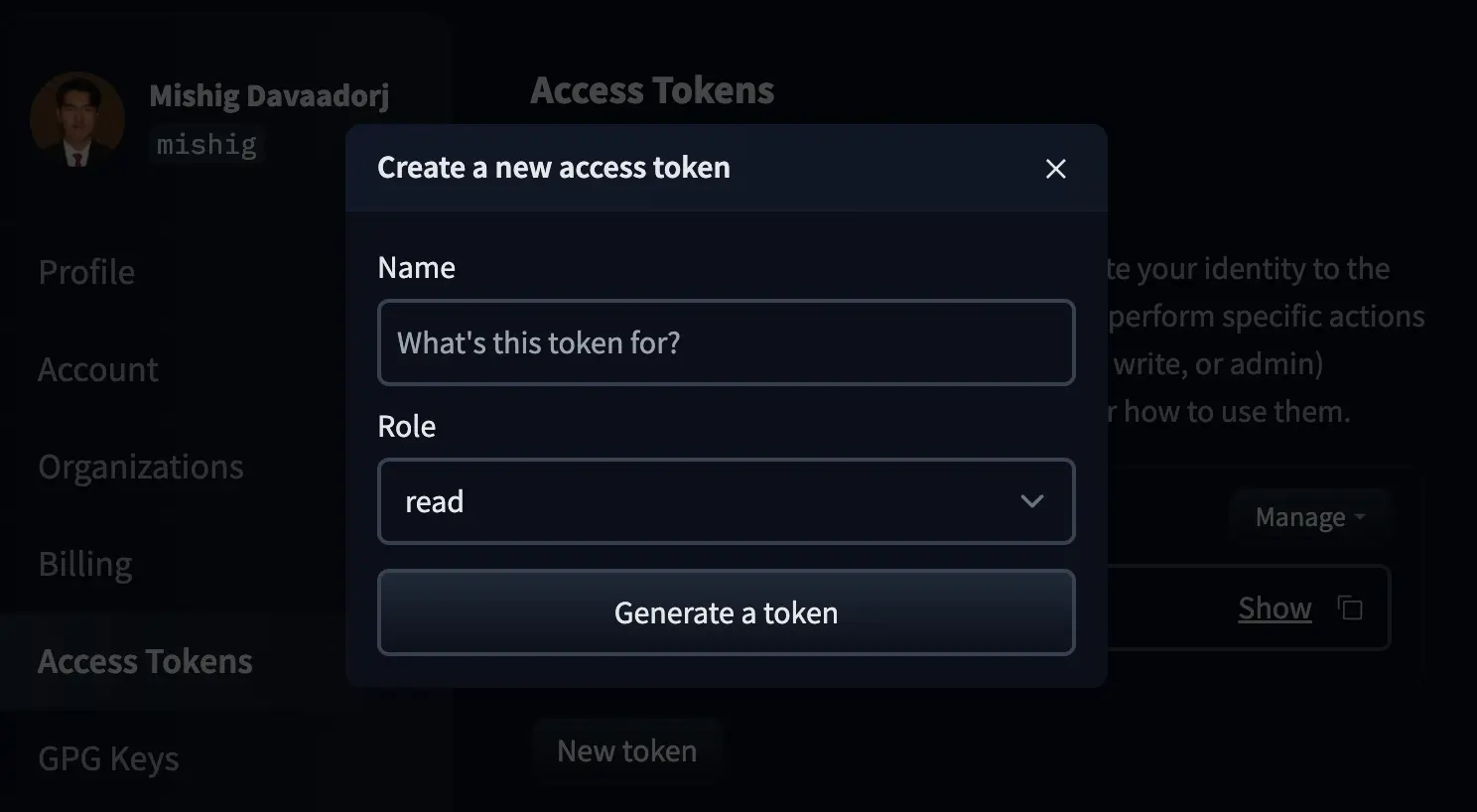

We click on New token and a window will appear to create a new token

We give the token a name and create it with the write role, or with the Fine-grained role, which allows us to select exactly which permissions the token will have.

Once created, we copy and paste it below.

from huggingface_hub import notebook_loginnotebook_login()Copied

Dataset

Now we download a dataset, in this case we are going to download one of Amazon reviews from Amazon

from datasets import load_datasetdataset = load_dataset("mteb/amazon_reviews_multi", "en")Copied

Let's take a look at it a bit

datasetCopied

DatasetDict({train: Dataset({features: ['id', 'text', 'label', 'label_text'],num_rows: 200000})validation: Dataset({features: ['id', 'text', 'label', 'label_text'],num_rows: 5000})test: Dataset({features: ['id', 'text', 'label', 'label_text'],num_rows: 5000})})

We see that you have a training set with 200,000 samples, a validation set with 5,000 samples, and a test set of 5,000 samples.

Let's take a look at an example from the training set

from random import randintidx = randint(0, len(dataset['train']) - 1)dataset['train'][idx]Copied

{'id': 'en_0907914','text': 'Mixed with fir it’s passable Not the scent I had hoped for . Love the scent of cedar, but this one missed','label': 3,'label_text': '3'}

We see that the review is in the text field and the rating given by the user is in the label field.

As we are going to build a text classification model, we need to know how many classes we will have.

num_classes = len(dataset['train'].unique('label'))num_classesCopied

5

We will have 5 classes, now we are going to see the minimum value of these classes to know if the score starts at 0 or 1. For this, we use the unique method.

dataset.unique('label')Copied

{'train': [0, 1, 2, 3, 4],'validation': [0, 1, 2, 3, 4],'test': [0, 1, 2, 3, 4]}

The minimum value will be 0

To train, the labels need to be in a field called labels, while in our dataset it is in a field called label, so we create the new field labels with the same value as label

We create a function that does what we want

def set_labels(example):example['labels'] = example['label']return exampleCopied

We apply the function to the dataset

dataset = dataset.map(set_labels)Copied

Let's see how the dataset looks like

dataset['train'][idx]Copied

{'id': 'en_0907914','text': 'Mixed with fir it’s passable Not the scent I had hoped for . Love the scent of cedar, but this one missed','label': 3,'label_text': '3','labels': 3}

Tokenizer

Since we have the reviews in text form in the dataset, we need to tokenize them so that we can feed the tokens into the model.

from transformers import AutoTokenizercheckpoint = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoint)Copied

Now we create a function to tokenize the text. We will do this in such a way that all statements have the same length, so the tokenizer will truncate when necessary and add padding tokens when necessary. Additionally, we specify that it should return pytorch tensors.

We make the length of each sentence 768 tokens because we are using the small GPT2 model, which as we saw in the GPT2 post has an embedding dimension of 768 tokens.

def tokenize_function(examples):return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=768, return_tensors="pt")Copied

Let's try to tokenize a text

tokens = tokenize_function(dataset['train'][idx])Copied

---------------------------------------------------------------------------ValueError Traceback (most recent call last)Cell In[11], line 1----> 1 tokens = tokenize_function(dataset['train'][idx])Cell In[10], line 2, in tokenize_function(examples)1 def tokenize_function(examples):----> 2 return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=768, return_tensors="pt")File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2883, in PreTrainedTokenizerBase.__call__(self, text, text_pair, text_target, text_pair_target, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)2881 if not self._in_target_context_manager:2882 self._switch_to_input_mode()-> 2883 encodings = self._call_one(text=text, text_pair=text_pair, **all_kwargs)2884 if text_target is not None:2885 self._switch_to_target_mode()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2989, in PreTrainedTokenizerBase._call_one(self, text, text_pair, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)2969 return self.batch_encode_plus(2970 batch_text_or_text_pairs=batch_text_or_text_pairs,2971 add_special_tokens=add_special_tokens,(...)2986 **kwargs,2987 )2988 else:-> 2989 return self.encode_plus(2990 text=text,2991 text_pair=text_pair,2992 add_special_tokens=add_special_tokens,2993 padding=padding,2994 truncation=truncation,2995 max_length=max_length,2996 stride=stride,2997 is_split_into_words=is_split_into_words,2998 pad_to_multiple_of=pad_to_multiple_of,2999 return_tensors=return_tensors,3000 return_token_type_ids=return_token_type_ids,3001 return_attention_mask=return_attention_mask,3002 return_overflowing_tokens=return_overflowing_tokens,3003 return_special_tokens_mask=return_special_tokens_mask,3004 return_offsets_mapping=return_offsets_mapping,3005 return_length=return_length,3006 verbose=verbose,3007 **kwargs,3008 )File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:3053, in PreTrainedTokenizerBase.encode_plus(self, text, text_pair, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)3032 """3033 Tokenize and prepare for the model a sequence or a pair of sequences.3034(...)3049 method).3050 """3052 # Backward compatibility for 'truncation_strategy', 'pad_to_max_length'-> 3053 padding_strategy, truncation_strategy, max_length, kwargs = self._get_padding_truncation_strategies(3054 padding=padding,3055 truncation=truncation,3056 max_length=max_length,3057 pad_to_multiple_of=pad_to_multiple_of,3058 verbose=verbose,3059 **kwargs,3060 )3062 return self._encode_plus(3063 text=text,3064 text_pair=text_pair,(...)3080 **kwargs,3081 )File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2788, in PreTrainedTokenizerBase._get_padding_truncation_strategies(self, padding, truncation, max_length, pad_to_multiple_of, verbose, **kwargs)2786 # Test if we have a padding token2787 if padding_strategy != PaddingStrategy.DO_NOT_PAD and (self.pad_token is None or self.pad_token_id < 0):-> 2788 raise ValueError(2789 "Asking to pad but the tokenizer does not have a padding token. "2790 "Please select a token to use as `pad_token` `(tokenizer.pad_token = tokenizer.eos_token e.g.)` "2791 "or add a new pad token via `tokenizer.add_special_tokens({'pad_token': '[PAD]'})`."2792 )2794 # Check that we will truncate to a multiple of pad_to_multiple_of if both are provided2795 if (2796 truncation_strategy != TruncationStrategy.DO_NOT_TRUNCATE2797 and padding_strategy != PaddingStrategy.DO_NOT_PAD(...)2800 and (max_length % pad_to_multiple_of != 0)2801 ):ValueError: Asking to pad but the tokenizer does not have a padding token. Please select a token to use as `pad_token` `(tokenizer.pad_token = tokenizer.eos_token e.g.)` or add a new pad token via `tokenizer.add_special_tokens({'pad_token': '[PAD]'})`.

We get an error because the GPT2 tokenizer does not have a token for padding and asks us to assign one, additionally it suggests doing tokenizer.pad_token = tokenizer.eos_token, so we do that.

tokenizer.pad_token = tokenizer.eos_tokenCopied

We test the tokenization function again

tokens = tokenize_function(dataset['train'][idx])tokens['input_ids'].shape, tokens['attention_mask'].shapeCopied

(torch.Size([1, 768]), torch.Size([1, 768]))

Now that we have checked that the function tokenizes well, we apply this function to the dataset, but we also apply it in batches so that it executes faster

Moreover, we take the opportunity to delete the columns that we won't need.

dataset = dataset.map(tokenize_function, batched=True, remove_columns=['text', 'label', 'id', 'label_text'])Copied

We now see how the dataset looks.

datasetCopied

DatasetDict({train: Dataset({features: ['id', 'text', 'label', 'label_text', 'labels'],num_rows: 200000})validation: Dataset({features: ['id', 'text', 'label', 'label_text', 'labels'],num_rows: 5000})test: Dataset({features: ['id', 'text', 'label', 'label_text', 'labels'],num_rows: 5000})})

We see that we have the fields 'labels', 'input_ids', and 'attention_mask', which is what we are interested in for training.

Model

We instantiate a model for sequence classification and specify the number of classes we have

from transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=num_classes)Copied

Some weights of GPT2ForSequenceClassification were not initialized from the model checkpoint at openai-community/gpt2 and are newly initialized: ['score.weight']You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

It tells us that the weights of the score layer have been initialized randomly and that we need to retrain them, let's see why this happens.

The GPT2 model would be this

from transformers import AutoModelForCausalLMcasual_model = AutoModelForCausalLM.from_pretrained(checkpoint)Copied

While the GPT2 model for generating text is this

Let's see its architecture

casual_modelCopied

GPT2LMHeadModel((transformer): GPT2Model((wte): Embedding(50257, 768)(wpe): Embedding(1024, 768)(drop): Dropout(p=0.1, inplace=False)(h): ModuleList((0-11): 12 x GPT2Block((ln_1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(attn): GPT2Attention((c_attn): Conv1D()(c_proj): Conv1D()(attn_dropout): Dropout(p=0.1, inplace=False)(resid_dropout): Dropout(p=0.1, inplace=False))(ln_2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(mlp): GPT2MLP((c_fc): Conv1D()(c_proj): Conv1D()(act): NewGELUActivation()(dropout): Dropout(p=0.1, inplace=False))))(ln_f): LayerNorm((768,), eps=1e-05, elementwise_affine=True))(lm_head): Linear(in_features=768, out_features=50257, bias=False))

And now the architecture of the model we are going to use for classifying the reviews

modelCopied

GPT2ForSequenceClassification((transformer): GPT2Model((wte): Embedding(50257, 768)(wpe): Embedding(1024, 768)(drop): Dropout(p=0.1, inplace=False)(h): ModuleList((0-11): 12 x GPT2Block((ln_1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(attn): GPT2Attention((c_attn): Conv1D()(c_proj): Conv1D()(attn_dropout): Dropout(p=0.1, inplace=False)(resid_dropout): Dropout(p=0.1, inplace=False))(ln_2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(mlp): GPT2MLP((c_fc): Conv1D()(c_proj): Conv1D()(act): NewGELUActivation()(dropout): Dropout(p=0.1, inplace=False))))(ln_f): LayerNorm((768,), eps=1e-05, elementwise_affine=True))(score): Linear(in_features=768, out_features=5, bias=False))

There are two things to mention here.

- The first is that in both, the first layer has dimensions of 50257x768, which corresponds to 50257 possible tokens from the GPT-2 vocabulary and 768 dimensions of the embedding, so we have done well in tokenizing the reviews with a size of 768 tokens

- The second is that the

casualmodel (the text generation one) has at the end aLinearlayer that generates 50257 values, meaning it is responsible for predicting the next token and assigns a value to each possible token. On the other hand, the classification model has aLinearlayer that only generates 5 values, one for each class, which will give us the probability that the review belongs to each class.

That's why we were getting the message that the weights of the score layer had been initialized randomly, because the transformers library has removed the Linear layer of 768x50257 and added a Linear layer of 768x5, it has initialized it with random values and we need to train it for our specific problem.

We delete the casual model because we are not going to use it.

del casual_modelCopied

Trainer

Let's now configure the training arguments

from transformers import TrainingArgumentsmetric_name = "accuracy"model_name = "GPT2-small-finetuned-amazon-reviews-en-classification"LR = 2e-5BS_TRAIN = 28BS_EVAL = 40EPOCHS = 3WEIGHT_DECAY = 0.01training_args = TrainingArguments(model_name,eval_strategy="epoch",save_strategy="epoch",learning_rate=LR,per_device_train_batch_size=BS_TRAIN,per_device_eval_batch_size=BS_EVAL,num_train_epochs=EPOCHS,weight_decay=WEIGHT_DECAY,lr_scheduler_type="cosine",warmup_ratio = 0.1,fp16=True,load_best_model_at_end=True,metric_for_best_model=metric_name,push_to_hub=True,)Copied

We define a metric for the validation dataloader

import numpy as npfrom evaluate import loadmetric = load("accuracy")def compute_metrics(eval_pred):print(eval_pred)predictions, labels = eval_predpredictions = np.argmax(predictions, axis=1)return metric.compute(predictions=predictions, references=labels)Copied

We now define the trainer

from transformers import Trainertrainer = Trainer(model,training_args,train_dataset=dataset['train'],eval_dataset=dataset['validation'],tokenizer=tokenizer,compute_metrics=compute_metrics,)Copied

We train

trainer.train()Copied

0%| | 0/600000 [00:00<?, ?it/s]

---------------------------------------------------------------------------ValueError Traceback (most recent call last)Cell In[21], line 1----> 1 trainer.train()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:1876, in Trainer.train(self, resume_from_checkpoint, trial, ignore_keys_for_eval, **kwargs)1873 try:1874 # Disable progress bars when uploading models during checkpoints to avoid polluting stdout1875 hf_hub_utils.disable_progress_bars()-> 1876 return inner_training_loop(1877 args=args,1878 resume_from_checkpoint=resume_from_checkpoint,1879 trial=trial,1880 ignore_keys_for_eval=ignore_keys_for_eval,1881 )1882 finally:1883 hf_hub_utils.enable_progress_bars()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:2178, in Trainer._inner_training_loop(self, batch_size, args, resume_from_checkpoint, trial, ignore_keys_for_eval)2175 rng_to_sync = True2177 step = -1-> 2178 for step, inputs in enumerate(epoch_iterator):2179 total_batched_samples += 12181 if self.args.include_num_input_tokens_seen:File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/accelerate/data_loader.py:454, in DataLoaderShard.__iter__(self)452 # We iterate one batch ahead to check when we are at the end453 try:--> 454 current_batch = next(dataloader_iter)455 except StopIteration:456 yieldFile ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/torch/utils/data/dataloader.py:631, in _BaseDataLoaderIter.__next__(self)628 if self._sampler_iter is None:629 # TODO(https://github.com/pytorch/pytorch/issues/76750)630 self._reset() # type: ignore[call-arg]--> 631 data = self._next_data()632 self._num_yielded += 1633 if self._dataset_kind == _DatasetKind.Iterable and \634 self._IterableDataset_len_called is not None and \635 self._num_yielded > self._IterableDataset_len_called:File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/torch/utils/data/dataloader.py:675, in _SingleProcessDataLoaderIter._next_data(self)673 def _next_data(self):674 index = self._next_index() # may raise StopIteration--> 675 data = self._dataset_fetcher.fetch(index) # may raise StopIteration676 if self._pin_memory:677 data = _utils.pin_memory.pin_memory(data, self._pin_memory_device)File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/torch/utils/data/_utils/fetch.py:54, in _MapDatasetFetcher.fetch(self, possibly_batched_index)52 else:53 data = self.dataset[possibly_batched_index]---> 54 return self.collate_fn(data)File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/data/data_collator.py:271, in DataCollatorWithPadding.__call__(self, features)270 def __call__(self, features: List[Dict[str, Any]]) -> Dict[str, Any]:--> 271 batch = pad_without_fast_tokenizer_warning(272 self.tokenizer,273 features,274 padding=self.padding,275 max_length=self.max_length,276 pad_to_multiple_of=self.pad_to_multiple_of,277 return_tensors=self.return_tensors,278 )279 if "label" in batch:280 batch["labels"] = batch["label"]File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/data/data_collator.py:66, in pad_without_fast_tokenizer_warning(tokenizer, *pad_args, **pad_kwargs)63 tokenizer.deprecation_warnings["Asking-to-pad-a-fast-tokenizer"] = True65 try:---> 66 padded = tokenizer.pad(*pad_args, **pad_kwargs)67 finally:68 # Restore the state of the warning.69 tokenizer.deprecation_warnings["Asking-to-pad-a-fast-tokenizer"] = warning_stateFile ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:3299, in PreTrainedTokenizerBase.pad(self, encoded_inputs, padding, max_length, pad_to_multiple_of, return_attention_mask, return_tensors, verbose)3297 # The model's main input name, usually `input_ids`, has be passed for padding3298 if self.model_input_names[0] not in encoded_inputs:-> 3299 raise ValueError(3300 "You should supply an encoding or a list of encodings to this method "3301 f"that includes {self.model_input_names[0]}, but you provided {list(encoded_inputs.keys())}"3302 )3304 required_input = encoded_inputs[self.model_input_names[0]]3306 if required_input is None or (isinstance(required_input, Sized) and len(required_input) == 0):ValueError: You should supply an encoding or a list of encodings to this method that includes input_ids, but you provided ['label', 'labels']

We get the error again because the model does not have a padding token assigned, so just like with the tokenizer, we assign it.

model.config.pad_token_id = model.config.eos_token_idCopied

We recreate the trainer arguments with the new model, which now has a padding token, the trainer, and we retrain.

training_args = TrainingArguments(model_name,eval_strategy="epoch",save_strategy="epoch",learning_rate=LR,per_device_train_batch_size=BS_TRAIN,per_device_eval_batch_size=BS_EVAL,num_train_epochs=EPOCHS,weight_decay=WEIGHT_DECAY,lr_scheduler_type="cosine",warmup_ratio = 0.1,fp16=True,load_best_model_at_end=True,metric_for_best_model=metric_name,push_to_hub=True,logging_dir="./runs",)trainer = Trainer(model,training_args,train_dataset=dataset['train'],eval_dataset=dataset['validation'],tokenizer=tokenizer,compute_metrics=compute_metrics,)Copied

Now that we've seen everything is in order, we can train

trainer.train()Copied

<IPython.core.display.HTML object>

<IPython.core.display.HTML object>

<transformers.trainer_utils.EvalPrediction object at 0x782767ea1450><transformers.trainer_utils.EvalPrediction object at 0x782767eeefe0><transformers.trainer_utils.EvalPrediction object at 0x782767eecfd0>

TrainOutput(global_step=21429, training_loss=0.7846888848762739, metrics={'train_runtime': 26367.7801, 'train_samples_per_second': 22.755, 'train_steps_per_second': 0.813, 'total_flos': 2.35173445632e+17, 'train_loss': 0.7846888848762739, 'epoch': 3.0})

Evaluation

Once trained, we evaluate on the test dataset

trainer.evaluate(eval_dataset=dataset['test'])Copied

<IPython.core.display.HTML object>

<transformers.trainer_utils.EvalPrediction object at 0x7826ddfded40>

{'eval_loss': 0.7973636984825134,'eval_accuracy': 0.6626,'eval_runtime': 76.3016,'eval_samples_per_second': 65.529,'eval_steps_per_second': 1.638,'epoch': 3.0}

Publish the model

We already have our model trained, so we can share it with the world. First, we create a **model card**.

trainer.create_model_card()Copied

And we can publish it now. Since the first thing we did was log in to the Hugging Face Hub, we can upload it to our hub without any issues.

trainer.push_to_hub()Copied

Usage of the model

We clean everything as much as possible

import torchimport gcdef clear_hardwares():torch.clear_autocast_cache()torch.cuda.ipc_collect()torch.cuda.empty_cache()gc.collect()clear_hardwares()clear_hardwares()Copied

Since we have uploaded the model to our hub, we can download and use it

from transformers import pipelineuser = "maximofn"checkpoints = f"{user}/{model_name}"task = "text-classification"classifier = pipeline(task, model=checkpoints, tokenizer=checkpoints)Copied

If we want it to return the probability of all classes, we simply use the classifier we just instantiated, with the parameter top_k=None

labels = classifier("I love this product", top_k=None)labelsCopied

[{'label': 'LABEL_4', 'score': 0.8253807425498962},{'label': 'LABEL_3', 'score': 0.15411493182182312},{'label': 'LABEL_2', 'score': 0.013907806016504765},{'label': 'LABEL_0', 'score': 0.003939222544431686},{'label': 'LABEL_1', 'score': 0.0026572425849735737}]

If we only want the class with the highest probability, we do the same but with the parameter top_k=1

label = classifier("I love this product", top_k=1)labelCopied

[{'label': 'LABEL_4', 'score': 0.8253807425498962}]

And if we want n classes, we do the same but with the parameter top_k=n

two_labels = classifier("I love this product", top_k=2)two_labelsCopied

[{'label': 'LABEL_4', 'score': 0.8253807425498962},{'label': 'LABEL_3', 'score': 0.15411493182182312}]

We can also test the model with Automodel and AutoTokenizer

from transformers import AutoTokenizer, AutoModelForSequenceClassificationimport torchmodel_name = "GPT2-small-finetuned-amazon-reviews-en-classification"user = "maximofn"checkpoint = f"{user}/{model_name}"num_classes = 5tokenizer = AutoTokenizer.from_pretrained(checkpoint)model = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=num_classes).half().eval().to("cuda")Copied

tokens = tokenizer.encode("I love this product", return_tensors="pt").to(model.device)with torch.no_grad():output = model(tokens)logits = output.logitslables = torch.softmax(logits, dim=1).cpu().numpy().tolist()lables[0]Copied

[0.003963470458984375,0.0026721954345703125,0.01397705078125,0.154541015625,0.82470703125]

If you want to try the model further, you can check it out at Maximofn/GPT2-small-finetuned-amazon-reviews-en-classification

Fine-tuning for Text Generation with Hugging Face

To make sure I don't have VRAM memory issues, I restart the notebook

Login

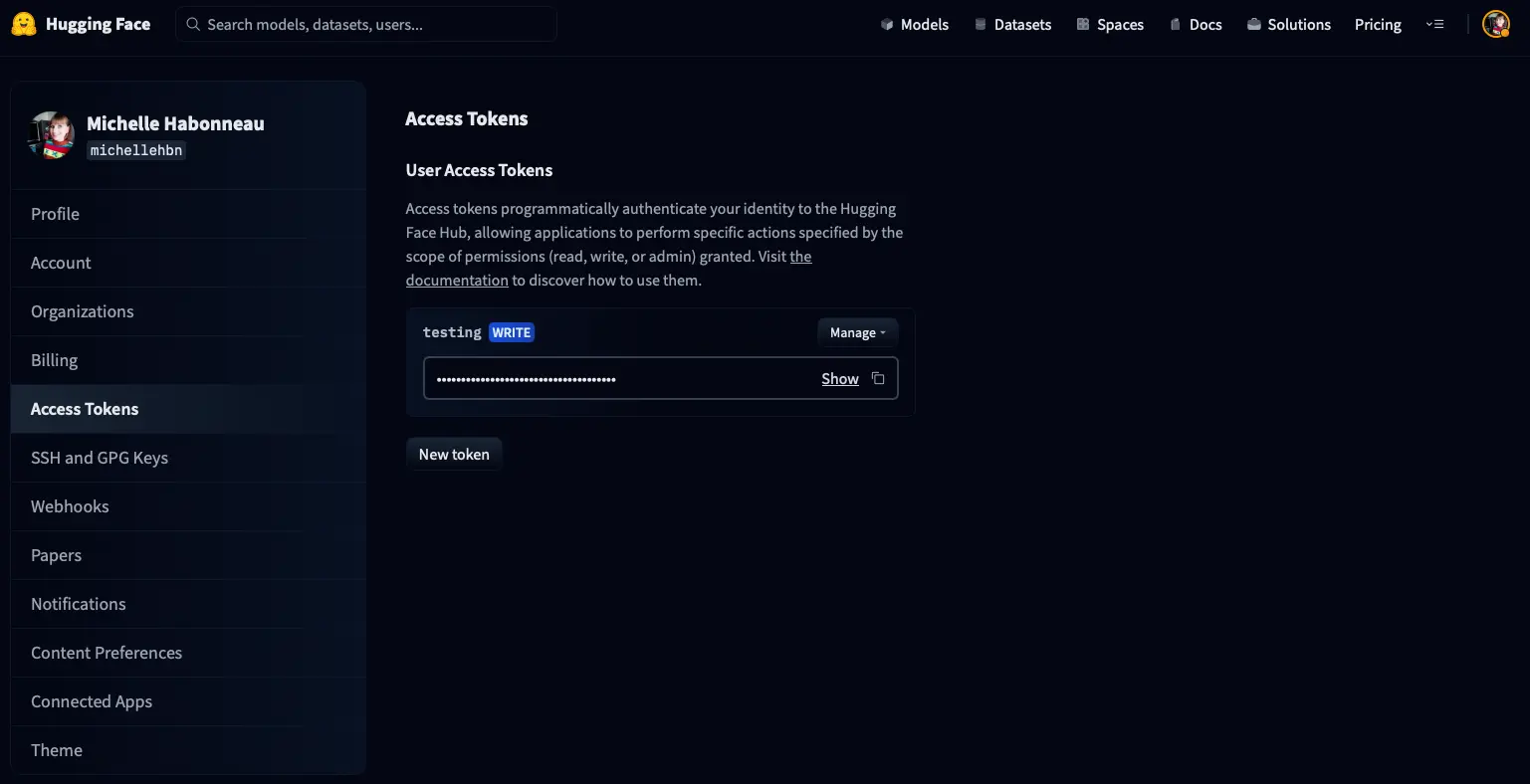

To be able to upload the training results to the hub, we need to log in first, for which we need a token

To create a token, you need to go to the settings/tokens page of your account, and you will see something like this

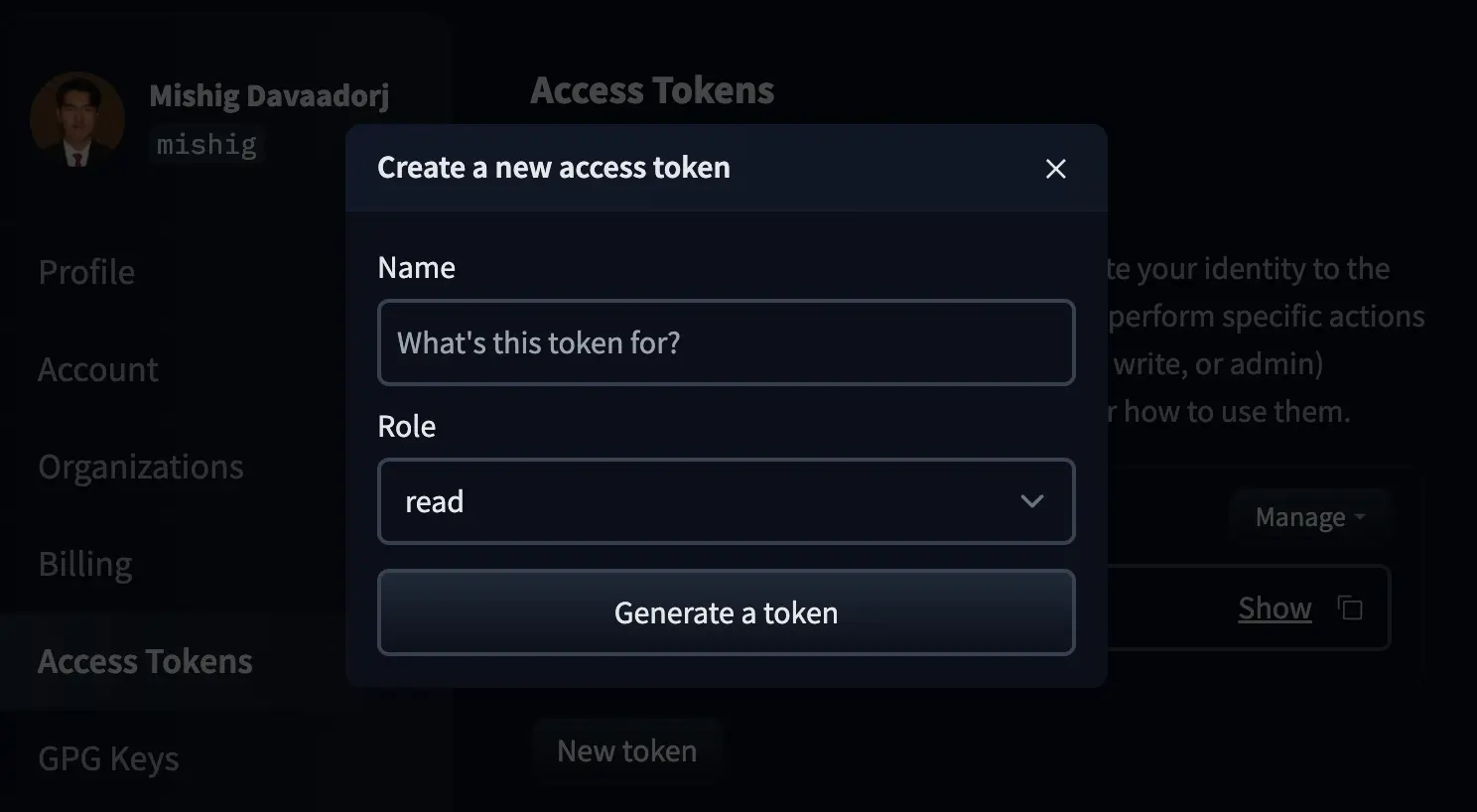

We click on New token and a window will appear to create a new token

We give the token a name and create it with the write role, or with the Fine-grained role, which allows us to select exactly which permissions the token will have.

Once created, we copy and paste it below.

from huggingface_hub import notebook_loginnotebook_login()Copied

Dataset

We are going to use an English jokes dataset

from datasets import load_datasetjokes = load_dataset("Maximofn/short-jokes-dataset")jokesCopied

DatasetDict({train: Dataset({features: ['ID', 'Joke'],num_rows: 231657})})

Let's take a look at it a bit

jokesCopied

DatasetDict({train: Dataset({features: ['ID', 'Joke'],num_rows: 231657})})

We see that it is a single training set of more than 200 thousand jokes. So later we will have to split it into train and evaluation.

Let's see a sample

from random import randintidx = randint(0, len(jokes['train']) - 1)jokes['train'][idx]Copied

{'ID': 198387,'Joke': 'My hot dislexic co-worker said she had an important massage to give me in her office... When I got there, she told me it can wait until I put on some clothes.'}

We see that it has a joke ID that we are not interested in at all and the joke itself

In case you have limited GPU memory, I will create a subset of the dataset, choose the percentage of jokes you want to use

percent_of_train_dataset = 1 # If you want 50% of the dataset, set this to 0.5subset_dataset = jokes["train"].select(range(int(len(jokes["train"]) * percent_of_train_dataset)))subset_datasetCopied

Dataset({features: ['ID', 'Joke'],num_rows: 231657})

Now we divide the subset into a training set and a validation set

percent_of_train_dataset = 0.90split_dataset = subset_dataset.train_test_split(train_size=int(subset_dataset.num_rows * percent_of_train_dataset), seed=19, shuffle=False)train_dataset = split_dataset["train"]validation_test_dataset = split_dataset["test"]split_dataset = validation_test_dataset.train_test_split(train_size=int(validation_test_dataset.num_rows * 0.5), seed=19, shuffle=False)validation_dataset = split_dataset["train"]test_dataset = split_dataset["test"]print(f"Size of the train set: {len(train_dataset)}. Size of the validation set: {len(validation_dataset)}. Size of the test set: {len(test_dataset)}")Copied

Size of the train set: 208491. Size of the validation set: 11583. Size of the test set: 11583

Tokenizer

We instantiate the tokenizer. We instantiate the padding token of the tokenizer so that we don't get an error as before.

from transformers import AutoTokenizercheckpoints = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoints)tokenizer.pad_token = tokenizer.eos_tokentokenizer.padding_side = "right"Copied

Let's add two new joke start and end tokens to have more control

new_tokens = ['<SJ>', '<EJ>'] # Start and end of joke tokensnum_added_tokens = tokenizer.add_tokens(new_tokens)print(f"Added {num_added_tokens} tokens")Copied

Added 2 tokens

We create a function to add the new tokens to the sentences

joke_column = "Joke"def format_joke(example):example[joke_column] = '<SJ> ' + example['Joke'] + ' <EJ>'return exampleCopied

We select the columns that we don't need

remove_columns = [column for column in train_dataset.column_names if column != joke_column]remove_columnsCopied

['ID']

We format the dataset and remove the columns we don't need

train_dataset = train_dataset.map(format_joke, remove_columns=remove_columns)validation_dataset = validation_dataset.map(format_joke, remove_columns=remove_columns)test_dataset = test_dataset.map(format_joke, remove_columns=remove_columns)train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['Joke'],num_rows: 208491}),Dataset({features: ['Joke'],num_rows: 11583}),Dataset({features: ['Joke'],num_rows: 11583}))

Now we create a function to tokenize the jokes

def tokenize_function(examples):return tokenizer(examples[joke_column], padding="max_length", truncation=True, max_length=768, return_tensors="pt")Copied

We tokenize the dataset and remove the column with the text

train_dataset = train_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])validation_dataset = validation_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])test_dataset = test_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['input_ids', 'attention_mask'],num_rows: 208491}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}))

Model

Now we instantiate the model for text generation and assign the padding token to the end of string token.

from transformers import AutoModelForCausalLMmodel = AutoModelForCausalLM.from_pretrained(checkpoints)model.config.pad_token_id = model.config.eos_token_idCopied

We see the size of the model's vocabulary

vocab_size = model.config.vocab_sizevocab_sizeCopied

50257

It has 50257 tokens, which is the size of the GPT2 vocabulary. But as we said we were going to create two new tokens for the start of a joke and the end of a joke, we add them to the model.

model.resize_token_embeddings(len(tokenizer))new_vocab_size = model.config.vocab_sizeprint(f"Old vocab size: {vocab_size}. New vocab size: {new_vocab_size}. Added {new_vocab_size - vocab_size} tokens")Copied

Old vocab size: 50257. New vocab size: 50259. Added 2 tokens

The two new tokens have been added.

Training

We set the training parameters

from transformers import TrainingArgumentsmetric_name = "accuracy"model_name = "GPT2-small-finetuned-Maximofn-short-jokes-dataset-casualLM"output_dir = f"./training_results"LR = 2e-5BS_TRAIN = 28BS_EVAL = 32EPOCHS = 3WEIGHT_DECAY = 0.01WARMUP_STEPS = 100training_args = TrainingArguments(model_name,eval_strategy="epoch",save_strategy="epoch",learning_rate=LR,per_device_train_batch_size=BS_TRAIN,per_device_eval_batch_size=BS_EVAL,warmup_steps=WARMUP_STEPS,num_train_epochs=EPOCHS,weight_decay=WEIGHT_DECAY,lr_scheduler_type="cosine",warmup_ratio = 0.1,fp16=True,load_best_model_at_end=True,# metric_for_best_model=metric_name,push_to_hub=True,)Copied

Now we don't use metric_for_best_model, after defining the trainer we explain why

We define the trainer

from transformers import Trainertrainer = Trainer(model,training_args,train_dataset=train_dataset,eval_dataset=validation_dataset,tokenizer=tokenizer,# compute_metrics=compute_metrics,)Copied

In this case, we don't pass a compute_metrics function; instead, during evaluation, the loss will be used to evaluate the model. That's why when defining the arguments, we don't define metric_for_best_model, because we won't be using a metric to evaluate the model, but rather the loss.

We train

trainer.train()Copied

0%| | 0/625473 [00:00<?, ?it/s]

---------------------------------------------------------------------------ValueError Traceback (most recent call last)Cell In[19], line 1----> 1 trainer.train()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:1885, in Trainer.train(self, resume_from_checkpoint, trial, ignore_keys_for_eval, **kwargs)1883 hf_hub_utils.enable_progress_bars()1884 else:-> 1885 return inner_training_loop(1886 args=args,1887 resume_from_checkpoint=resume_from_checkpoint,1888 trial=trial,1889 ignore_keys_for_eval=ignore_keys_for_eval,1890 )File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:2216, in Trainer._inner_training_loop(self, batch_size, args, resume_from_checkpoint, trial, ignore_keys_for_eval)2213 self.control = self.callback_handler.on_step_begin(args, self.state, self.control)2215 with self.accelerator.accumulate(model):-> 2216 tr_loss_step = self.training_step(model, inputs)2218 if (2219 args.logging_nan_inf_filter2220 and not is_torch_xla_available()2221 and (torch.isnan(tr_loss_step) or torch.isinf(tr_loss_step))2222 ):2223 # if loss is nan or inf simply add the average of previous logged losses2224 tr_loss += tr_loss / (1 + self.state.global_step - self._globalstep_last_logged)File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:3238, in Trainer.training_step(self, model, inputs)3235 return loss_mb.reduce_mean().detach().to(self.args.device)3237 with self.compute_loss_context_manager():-> 3238 loss = self.compute_loss(model, inputs)3240 del inputs3241 torch.cuda.empty_cache()File ~/miniconda3/envs/nlp_/lib/python3.11/site-packages/transformers/trainer.py:3282, in Trainer.compute_loss(self, model, inputs, return_outputs)3280 else:3281 if isinstance(outputs, dict) and "loss" not in outputs:-> 3282 raise ValueError(3283 "The model did not return a loss from the inputs, only the following keys: "3284 f"{','.join(outputs.keys())}. For reference, the inputs it received are {','.join(inputs.keys())}."3285 )3286 # We don't use .loss here since the model may return tuples instead of ModelOutput.3287 loss = outputs["loss"] if isinstance(outputs, dict) else outputs[0]ValueError: The model did not return a loss from the inputs, only the following keys: logits,past_key_values. For reference, the inputs it received are input_ids,attention_mask.

As we can see, it gives us an error, telling us that the model does not return the loss value, which is key to being able to train. Let's see why.

Let's first see what an example from the dataset looks like

idx = randint(0, len(train_dataset) - 1)sample = train_dataset[idx]sampleCopied

{'input_ids': [50257,4162,750,262,18757,6451,2245,2491,30,4362,340,373,734,10032,13,220,50258,50256,50256,...,50256,50256,50256],'attention_mask': [1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,0,...,0,0,0]}

As we can see, we have a dictionary with the input_ids and the attention_mask. If we pass it to the model, we get this

import torchoutput = model(input_ids=torch.Tensor(sample["input_ids"]).long().unsqueeze(0).to(model.device),attention_mask=torch.Tensor(sample["attention_mask"]).long().unsqueeze(0).to(model.device),)print(output.loss)Copied

None

As we can see, it does not return the loss value because it is waiting for a value for labels, which we have not provided. In the previous example, where we were doing fine-tuning for text classification, we mentioned that the labels should be passed to a field in the dataset called labels, but in this case, we do not have that field in the dataset.

If we now assign the labels to the input_ids and look at the loss again

import torchoutput = model(input_ids=torch.Tensor(sample["input_ids"]).long().unsqueeze(0).to(model.device),attention_mask=torch.Tensor(sample["attention_mask"]).long().unsqueeze(0).to(model.device),labels=torch.Tensor(sample["input_ids"]).long().unsqueeze(0).to(model.device))print(output.loss)Copied

tensor(102.1873, device='cuda:0', grad_fn=<NllLossBackward0>)

Now we get a loss

Therefore, we have two options: add a labels field to the dataset with the values of input_ids or use a function from the transformers library called data_collator, in this case we will use DataCollatorForLanguageModeling. Let's take a look at it.

from transformers import DataCollatorForLanguageModelingmy_data_collator=DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)Copied

We pass the sample through this data_collator

collated_sample = my_data_collator([sample]).to(model.device)Copied

We see what the output is

for key, value in collated_sample.items():print(f"{key} ({value.shape}): {value}")Copied

input_ids (torch.Size([1, 768])): tensor([[50257, 4162, 750, 262, 18757, 6451, 2245, 2491, 30, 4362,340, 373, 734, 10032, 13, 220, 50258, 50256, ..., 50256, 50256]],device='cuda:0')attention_mask (torch.Size([1, 768])): tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, ..., 0, 0]],device='cuda:0')labels (torch.Size([1, 768])): tensor([[50257, 4162, 750, 262, 18757, 6451, 2245, 2491, 30, 4362,340, 373, 734, 10032, 13, 220, 50258, -100, ..., -100, -100]],device='cuda:0')

As can be seen, the data_collator has created a labels field and assigned it the values of input_ids. The masked tokens have been assigned the value -100. This is because when we defined the data_collator, we passed the parameter mlm=False, which means that we are not performing Masked Language Modeling, but rather Language Modeling, hence no original token is masked.

Let's see if we get a loss with this data_collator

output = model(**collated_sample)output.lossCopied

tensor(102.7181, device='cuda:0', grad_fn=<NllLossBackward0>)

So we redefine the trainer with the data_collator and train again.

from transformers import DataCollatorForLanguageModelingtrainer = Trainer(model,training_args,train_dataset=train_dataset,eval_dataset=validation_dataset,tokenizer=tokenizer,data_collator=DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False),)Copied

trainer.train()Copied

<IPython.core.display.HTML object>

There were missing keys in the checkpoint model loaded: ['lm_head.weight'].

TrainOutput(global_step=22341, training_loss=3.505178199598342, metrics={'train_runtime': 9209.5353, 'train_samples_per_second': 67.916, 'train_steps_per_second': 2.426, 'total_flos': 2.45146666696704e+17, 'train_loss': 3.505178199598342, 'epoch': 3.0})

Evaluation

Once trained, we evaluate the model on the test dataset

trainer.evaluate(eval_dataset=test_dataset)Copied

<IPython.core.display.HTML object>

{'eval_loss': 3.201305866241455,'eval_runtime': 65.0033,'eval_samples_per_second': 178.191,'eval_steps_per_second': 5.569,'epoch': 3.0}

Publish the model

We create the model card

trainer.create_model_card()Copied

We publish it

trainer.push_to_hub()Copied

events.out.tfevents.1720875425.8de3af1b431d.6946.1: 0%| | 0.00/364 [00:00<?, ?B/s]

CommitInfo(commit_url='https://huggingface.co/Maximofn/GPT2-small-finetuned-Maximofn-short-jokes-dataset-casualLM/commit/d107b3bb0e02076483238f9975697761015ec390', commit_message='End of training', commit_description='', oid='d107b3bb0e02076483238f9975697761015ec390', pr_url=None, pr_revision=None, pr_num=None)

Usage of the model

We clean everything as much as possible

import torchimport gcdef clear_hardwares():torch.clear_autocast_cache()torch.cuda.ipc_collect()torch.cuda.empty_cache()gc.collect()clear_hardwares()clear_hardwares()Copied

We download the model and the tokenizer

from transformers import AutoTokenizer, AutoModelForCausalLMuser = "maximofn"checkpoints = f"{user}/{model_name}"tokenizer = AutoTokenizer.from_pretrained(checkpoints)tokenizer.pad_token = tokenizer.eos_tokentokenizer.padding_side = "right"model = AutoModelForCausalLM.from_pretrained(checkpoints)model.config.pad_token_id = model.config.eos_token_idCopied

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

We check that the tokenizer and the model have the 2 extra tokens we added

tokenizer_vocab = tokenizer.get_vocab()model_vocab = model.config.vocab_sizeprint(f"tokenizer_vocab: {len(tokenizer_vocab)}. model_vocab: {model_vocab}")Copied

tokenizer_vocab: 50259. model_vocab: 50259

We see that they have 50259 tokens, that is, the 50257 tokens of GPT2 plus the 2 that we have added.

We create a function to generate jokes

def generate_joke(prompt_text):text = f"<SJ> {prompt_text}"tokens = tokenizer(text, return_tensors="pt").to(model.device)with torch.no_grad():output = model.generate(**tokens, max_new_tokens=256, eos_token_id=tokenizer.encode("<EJ>")[-1])return tokenizer.decode(output[0], skip_special_tokens=False)Copied

We generate a joke

generate_joke("Why didn't the frog cross the road?")Copied

Setting `pad_token_id` to `eos_token_id`:50258 for open-end generation.

"<SJ> Why didn't the frog cross the road? Because he was frog-in-the-face. <EJ>"

If you want to try the model further, you can check it out at Maximofn/GPT2-small-finetuned-Maximofn-short-jokes-dataset-casualLM

Fine tuning for text classification with Pytorch

We repeat the training with Pytorch

We reset the notebook to make sure

Dataset

We download the same dataset that we used when training with the Hugging Face libraries

from datasets import load_datasetdataset = load_dataset("mteb/amazon_reviews_multi", "en")Copied

We create a variable with the number of classes

num_classes = len(dataset['train'].unique('label'))num_classesCopied

5

We previously processed the entire dataset to create a field called labels, but now it's not necessary because, since we are going to program everything ourselves, we adapt to how the dataset is.

Tokenizer

We create the tokenizer. We assign the padding token so that it doesn't give us an error like before.

from transformers import AutoTokenizercheckpoint = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoint)tokenizer.pad_token = tokenizer.eos_tokenCopied

We create a function to tokenize the dataset

def tokenize_function(examples):return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=768, return_tensors="pt")Copied

We tokenize it. We remove columns that we don't need, but now we keep the text column.

dataset = dataset.map(tokenize_function, batched=True, remove_columns=['id', 'label_text'])Copied

datasetCopied

DatasetDict({train: Dataset({features: ['text', 'label', 'input_ids', 'attention_mask'],num_rows: 200000})validation: Dataset({features: ['text', 'label', 'input_ids', 'attention_mask'],num_rows: 5000})test: Dataset({features: ['text', 'label', 'input_ids', 'attention_mask'],num_rows: 5000})})

percentage = 1subset_train = dataset['train'].select(range(int(len(dataset['train']) * percentage)))percentage = 1subset_validation = dataset['validation'].select(range(int(len(dataset['validation']) * percentage)))subset_test = dataset['test'].select(range(int(len(dataset['test']) * percentage)))print(f"len subset_train: {len(subset_train)}, len subset_validation: {len(subset_validation)}, len subset_test: {len(subset_test)}")Copied

len subset_train: 200000, len subset_validation: 5000, len subset_test: 5000

Model

We import the weights and assign the padding token

from transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=num_classes)model.config.pad_token_id = model.config.eos_token_idCopied

Some weights of GPT2ForSequenceClassification were not initialized from the model checkpoint at openai-community/gpt2 and are newly initialized: ['score.weight']You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

Device

We create the device where everything will be executed

import torchdevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')Copied

While we're at it, we pass the model to the device and, while we're at it, we convert it to FP16 to use less memory.

model.half().to(device)print()Copied

Pytorch Dataset

We create a PyTorch dataset

from torch.utils.data import Datasetclass ReviewsDataset(Dataset):def __init__(self, huggingface_dataset):self.dataset = huggingface_datasetdef __getitem__(self, idx):label = self.dataset[idx]['label']input_ids = torch.tensor(self.dataset[idx]['input_ids'])attention_mask = torch.tensor(self.dataset[idx]['attention_mask'])return input_ids, attention_mask, labeldef __len__(self):return len(self.dataset)Copied

We instantiate the datasets

train_dataset = ReviewsDataset(subset_train)validatation_dataset = ReviewsDataset(subset_validation)test_dataset = ReviewsDataset(subset_test)Copied

Let's see a sample

input_ids, at_mask, label = train_dataset[0]input_ids.shape, at_mask.shape, labelCopied

(torch.Size([768]), torch.Size([768]), 0)

Pytorch Dataloader

We now create a DataLoader from PyTorch

from torch.utils.data import DataLoaderBS = 12train_loader = DataLoader(train_dataset, batch_size=BS, shuffle=True)validation_loader = DataLoader(validatation_dataset, batch_size=BS)test_loader = DataLoader(test_dataset, batch_size=BS)Copied

Let's see a sample

input_ids, at_mask, labels = next(iter(train_loader))input_ids.shape, at_mask.shape, labelsCopied

(torch.Size([12, 768]),torch.Size([12, 768]),tensor([2, 1, 2, 0, 3, 3, 0, 4, 3, 3, 4, 2]))

To make sure everything is fine, we pass the sample to the model to see that everything works well. First, we pass the tokens to the device.

input_ids = input_ids.to(device)at_mask = at_mask.to(device)labels = labels.to(device)Copied

Now we pass it to the model

output = model(input_ids=input_ids, attention_mask=at_mask, labels=labels)output.keys()Copied

odict_keys(['loss', 'logits', 'past_key_values'])

As we can see, it gives us the loss and the logits

output['loss']Copied

tensor(5.9414, device='cuda:0', dtype=torch.float16,grad_fn=<NllLossBackward0>)

output['logits']Copied

tensor([[ 6.1953e+00, -1.2275e+00, -2.4824e+00, 5.8867e+00, -1.4734e+01],[ 5.4062e+00, -8.4570e-01, -2.3203e+00, 5.1055e+00, -1.1555e+01],[ 6.1641e+00, -9.3066e-01, -2.5664e+00, 6.0039e+00, -1.4570e+01],[ 5.2266e+00, -4.2358e-01, -2.0801e+00, 4.7461e+00, -1.1570e+01],[ 3.8184e+00, -2.3460e-03, -1.7666e+00, 3.4160e+00, -7.7969e+00],[ 4.1641e+00, -4.8169e-01, -1.6914e+00, 3.9941e+00, -8.7734e+00],[ 4.6758e+00, -3.0298e-01, -2.1641e+00, 4.1055e+00, -9.3359e+00],[ 4.1953e+00, -3.2471e-01, -2.1875e+00, 3.9375e+00, -8.3438e+00],[-1.1650e+00, 1.3564e+00, -6.2158e-01, -6.8115e-01, 4.8672e+00],[ 4.4961e+00, -8.7891e-02, -2.2793e+00, 4.2812e+00, -9.3359e+00],[ 4.9336e+00, -2.6627e-03, -2.1543e+00, 4.3711e+00, -1.0742e+01],[ 5.9727e+00, -4.3152e-02, -1.4551e+00, 4.3438e+00, -1.2117e+01]],device='cuda:0', dtype=torch.float16, grad_fn=<IndexBackward0>)

Metric

Let's create a function to get the metric, which in this case will be the accuracy

def predicted_labels(logits):percent = torch.softmax(logits, dim=1)predictions = torch.argmax(percent, dim=1)return predictionsCopied

def compute_accuracy(logits, labels):predictions = predicted_labels(logits)correct = (predictions == labels).float()return correct.mean()Copied

Let's see if it calculates it correctly

compute_accuracy(output['logits'], labels).item()Copied

0.1666666716337204

Optimizer

Since we are going to need an optimizer, we create one

from transformers import AdamWLR = 2e-5optimizer = AdamW(model.parameters(), lr=LR)Copied

/usr/local/lib/python3.10/dist-packages/transformers/optimization.py:588: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warningwarnings.warn(

Training

We create the training loop

from tqdm import tqdmEPOCHS = 3accuracy = 0for epoch in range(EPOCHS):model.train()train_loss = 0progresbar = tqdm(train_loader, total=len(train_loader), desc=f'Epoch {epoch + 1}')for input_ids, at_mask, labels in progresbar:input_ids = input_ids.to(device)at_mask = at_mask.to(device)label = labels.to(device)output = model(input_ids=input_ids, attention_mask=at_mask, labels=label)loss = output['loss']train_loss += loss.item()optimizer.zero_grad()loss.backward()optimizer.step()progresbar.set_postfix({'train_loss': loss.item()})train_loss /= len(train_loader)progresbar.set_postfix({'train_loss': train_loss})model.eval()valid_loss = 0progresbar = tqdm(validation_loader, total=len(validation_loader), desc=f'Epoch {epoch + 1}')for input_ids, at_mask, labels in progresbar:input_ids = input_ids.to(device)at_mask = at_mask.to(device)labels = labels.to(device)output = model(input_ids=input_ids, attention_mask=at_mask, labels=labels)loss = output['loss']valid_loss += loss.item()step_accuracy = compute_accuracy(output['logits'], labels)accuracy += step_accuracyprogresbar.set_postfix({'valid_loss': loss.item(), 'accuracy': step_accuracy.item()})valid_loss /= len(validation_loader)accuracy /= len(validation_loader)progresbar.set_postfix({'valid_loss': valid_loss, 'accuracy': accuracy})Copied

Epoch 1: 100%|██████████| 16667/16667 [44:13<00:00, 6.28it/s, train_loss=nan]Epoch 1: 100%|██████████| 417/417 [00:32<00:00, 12.72it/s, valid_loss=nan, accuracy=0]Epoch 2: 100%|██████████| 16667/16667 [44:06<00:00, 6.30it/s, train_loss=nan]Epoch 2: 100%|██████████| 417/417 [00:32<00:00, 12.77it/s, valid_loss=nan, accuracy=0]Epoch 3: 100%|██████████| 16667/16667 [44:03<00:00, 6.30it/s, train_loss=nan]Epoch 3: 100%|██████████| 417/417 [00:32<00:00, 12.86it/s, valid_loss=nan, accuracy=0]

Usage of the model

Let's test the model we have trained

First we tokenize a text

input_tokens = tokenize_function({"text": "I love this product. It is amazing."})input_tokens['input_ids'].shape, input_tokens['attention_mask'].shapeCopied

(torch.Size([1, 768]), torch.Size([1, 768]))

Now we pass it to the model

output = model(input_ids=input_tokens['input_ids'].to(device), attention_mask=input_tokens['attention_mask'].to(device))output['logits']Copied

tensor([[nan, nan, nan, nan, nan]], device='cuda:0', dtype=torch.float16,grad_fn=<IndexBackward0>)

We see the predictions of those logits

predicted = predicted_labels(output['logits'])predictedCopied

tensor([0], device='cuda:0')

Fine tuning for text generation with Pytorch

We repeat the training with Pytorch

We reset the notebook to make sure

Dataset

We download the jokes dataset again

from datasets import load_datasetjokes = load_dataset("Maximofn/short-jokes-dataset")jokesCopied

DatasetDict({train: Dataset({features: ['ID', 'Joke'],num_rows: 231657})})

We create a subset in case there is limited memory

percent_of_train_dataset = 1 # If you want 50% of the dataset, set this to 0.5subset_dataset = jokes["train"].select(range(int(len(jokes["train"]) * percent_of_train_dataset)))subset_datasetCopied

Dataset({features: ['ID', 'Joke'],num_rows: 231657})

We divide the dataset into training, validation, and test subsets.

percent_of_train_dataset = 0.90split_dataset = subset_dataset.train_test_split(train_size=int(subset_dataset.num_rows * percent_of_train_dataset), seed=19, shuffle=False)train_dataset = split_dataset["train"]validation_test_dataset = split_dataset["test"]split_dataset = validation_test_dataset.train_test_split(train_size=int(validation_test_dataset.num_rows * 0.5), seed=19, shuffle=False)validation_dataset = split_dataset["train"]test_dataset = split_dataset["test"]print(f"Size of the train set: {len(train_dataset)}. Size of the validation set: {len(validation_dataset)}. Size of the test set: {len(test_dataset)}")Copied

Size of the train set: 208491. Size of the validation set: 11583. Size of the test set: 11583

Tokenizer

We initialize the tokenizer and assign the padding token to end of string

from transformers import AutoTokenizercheckpoints = "openai-community/gpt2"tokenizer = AutoTokenizer.from_pretrained(checkpoints)tokenizer.pad_token = tokenizer.eos_tokentokenizer.padding_side = "right"Copied

We add the special start of joke and end of joke tokens

new_tokens = ['<SJ>', '<EJ>'] # Start and end of joke tokensnum_added_tokens = tokenizer.add_tokens(new_tokens)print(f"Added {num_added_tokens} tokens")Copied

Added 2 tokens

We add them to the dataset

joke_column = "Joke"def format_joke(example):example[joke_column] = '<SJ> ' + example['Joke'] + ' <EJ>'return exampleremove_columns = [column for column in train_dataset.column_names if column != joke_column]train_dataset = train_dataset.map(format_joke, remove_columns=remove_columns)validation_dataset = validation_dataset.map(format_joke, remove_columns=remove_columns)test_dataset = test_dataset.map(format_joke, remove_columns=remove_columns)train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['Joke'],num_rows: 208491}),Dataset({features: ['Joke'],num_rows: 11583}),Dataset({features: ['Joke'],num_rows: 11583}))

We tokenize the dataset

def tokenize_function(examples):return tokenizer(examples[joke_column], padding="max_length", truncation=True, max_length=768, return_tensors="pt")train_dataset = train_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])validation_dataset = validation_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])test_dataset = test_dataset.map(tokenize_function, batched=True, remove_columns=[joke_column])train_dataset, validation_dataset, test_datasetCopied

(Dataset({features: ['input_ids', 'attention_mask'],num_rows: 208491}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}),Dataset({features: ['input_ids', 'attention_mask'],num_rows: 11583}))

Model

We instantiate the model, assign the padding token, and add the new joke start and end tokens.

from transformers import AutoModelForCausalLMmodel = AutoModelForCausalLM.from_pretrained(checkpoints)model.config.pad_token_id = model.config.eos_token_idmodel.resize_token_embeddings(len(tokenizer))Copied

Embedding(50259, 768)

Device

We create the device and pass the model to the device

import torchdevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')model.half().to(device)print()Copied

Pytorch Dataset

We create a PyTorch dataset

from torch.utils.data import Datasetclass JokesDataset(Dataset):def __init__(self, huggingface_dataset):self.dataset = huggingface_datasetdef __getitem__(self, idx):input_ids = torch.tensor(self.dataset[idx]['input_ids'])attention_mask = torch.tensor(self.dataset[idx]['attention_mask'])return input_ids, attention_maskdef __len__(self):return len(self.dataset)Copied

We instantiate the training, validation, and test datasets.

train_pytorch_dataset = JokesDataset(train_dataset)validation_pytorch_dataset = JokesDataset(validation_dataset)test_pytorch_dataset = JokesDataset(test_dataset)Copied

Let's see a sample

input_ids, attention_mask = train_pytorch_dataset[0]input_ids.shape, attention_mask.shapeCopied

(torch.Size([768]), torch.Size([768]))

Pytorch Dataloader

We create the dataloaders

from torch.utils.data import DataLoaderBS = 28train_loader = DataLoader(train_pytorch_dataset, batch_size=BS, shuffle=True)validation_loader = DataLoader(validation_pytorch_dataset, batch_size=BS)test_loader = DataLoader(test_pytorch_dataset, batch_size=BS)Copied

We see a sample

input_ids, attention_mask = next(iter(train_loader))input_ids.shape, attention_mask.shapeCopied

(torch.Size([28, 768]), torch.Size([28, 768]))

We pass it to the model

output = model(input_ids.to(device), attention_mask=attention_mask.to(device))output.keys()Copied

odict_keys(['logits', 'past_key_values'])

As we can see, we don't have a loss value. As we've seen, we need to pass it the input_ids and the labels.

output = model(input_ids.to(device), attention_mask=attention_mask.to(device), labels=input_ids.to(device))output.keys()Copied

odict_keys(['loss', 'logits', 'past_key_values'])

Now we have loss

output['loss'].item()Copied

80.5625

Optimizer

We create an optimizer

from transformers import AdamWLR = 2e-5optimizer = AdamW(model.parameters(), lr=5e-5)Copied

/usr/local/lib/python3.10/dist-packages/transformers/optimization.py:588: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warningwarnings.warn(

Training

We create the training loop

from tqdm import tqdmEPOCHS = 3for epoch in range(EPOCHS):model.train()train_loss = 0progresbar = tqdm(train_loader, total=len(train_loader), desc=f'Epoch {epoch + 1}')for input_ids, at_mask in progresbar:input_ids = input_ids.to(device)at_mask = at_mask.to(device)output = model(input_ids=input_ids, attention_mask=at_mask, labels=input_ids)loss = output['loss']train_loss += loss.item()optimizer.zero_grad()loss.backward()optimizer.step()progresbar.set_postfix({'train_loss': loss.item()})train_loss /= len(train_loader)progresbar.set_postfix({'train_loss': train_loss})Copied

Epoch 1: 100%|██████████| 7447/7447 [51:07<00:00, 2.43it/s, train_loss=nan]Epoch 2: 100%|██████████| 7447/7447 [51:06<00:00, 2.43it/s, train_loss=nan]Epoch 3: 100%|██████████| 7447/7447 [51:07<00:00, 2.43it/s, train_loss=nan]

Usage of the model

We test the model

def generate_text(decoded_joke, max_new_tokens=100, stop_token='<EJ>', top_k=0, temperature=1.0):input_tokens = tokenize_function({'Joke': decoded_joke})output = model(input_tokens['input_ids'].to(device), attention_mask=input_tokens['attention_mask'].to(device))nex_token = torch.argmax(output['logits'][:, -1, :], dim=-1).item()nex_token_decoded = tokenizer.decode(nex_token)decoded_joke = decoded_joke + nex_token_decodedfor _ in range(max_new_tokens):nex_token = torch.argmax(output['logits'][:, -1, :], dim=-1).item()nex_token_decoded = tokenizer.decode(nex_token)if nex_token_decoded == stop_token:breakdecoded_joke = decoded_joke + nex_token_decodedinput_tokens = tokenize_function({'Joke': decoded_joke})output = model(input_tokens['input_ids'].to(device), attention_mask=input_tokens['attention_mask'].to(device))return decoded_jokeCopied

generated_text = generate_text("<SJ> Why didn't the frog cross the road")generated_textCopied

"<SJ> Why didn't the frog cross the road!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"