Disclaimer: This post has been translated to English using a machine translation model. Please, let me know if you find any mistakes.

Accelerate is a Hugging Face library that allows running the same PyTorch code in any distributed setup by adding only four lines of code.

Installation

To install accelerate with pip, simply run:

pip install accelerateAnd with conda:

conda install -c conda-forge accelerateConfiguration

In each environment where accelerate is installed, the first thing to do is to configure it. To do this, run in a terminal:

`accelerate config`!accelerate configCopied

--------------------------------------------------------------------------------In which compute environment are you running?This machine--------------------------------------------------------------------------------multi-GPUHow many different machines will you use (use more than 1 for multi-node training)? [1]: 1Should distributed operations be checked while running for errors? This can avoid timeout issues but will be slower. [yes/NO]: noDo you wish to optimize your script with torch dynamo?[yes/NO]:noDo you want to use DeepSpeed? [yes/NO]: noDo you want to use FullyShardedDataParallel? [yes/NO]: noDo you want to use Megatron-LM ? [yes/NO]: noHow many GPU(s) should be used for distributed training? [1]:2What GPU(s) (by id) should be used for training on this machine as a comma-seperated list? [all]:0,1--------------------------------------------------------------------------------Do you wish to use FP16 or BF16 (mixed precision)?noaccelerate configuration saved at ~/.cache/huggingface/accelerate/default_config.yaml

In my case, the answers have been

- In which compute environment are you running?

- [x] "This machine"

- [_] "AWS (Amazon SageMaker)"

I want to set it up on my computer

- What type of machine are you using?

- [_] multi-CPU

- [_] multi-XPU

- [x] multi-GPU

- [_] multi-NPU

- [_] TPU

Since I have 2 GPUs and want to run distributed codes on them, I choose

multi-GPU

- How many different machines will you use (use more than 1 for multi-node training)? [1]:

- 1

I choose

1because I'm only going to run it on my computer

- Should distributed operations be checked while running for errors? This can avoid timeout issues but will be slower. [yes/NO]:

- no

With this option, you can choose to have

acceleratecheck for errors during execution, but it would make it slower, so I chooseno, and if there are any errors I change it toyes.

- Do you wish to optimize your script with torch dynamo? [yes/NO]:

- no

- Do you want to use FullyShardedDataParallel? [yes/NO]:

- no

- Do you want to use Megatron-LM? [yes/NO]:

- no

- How many GPU(s) should be used for distributed training? [1]:

- 2

I choose

2because I have 2 GPUs

- What GPU(s) (by id) should be used for training on this machine as a comma-separated list? [all]:

- 0.1

I choose

0,1because I want to use both GPUs

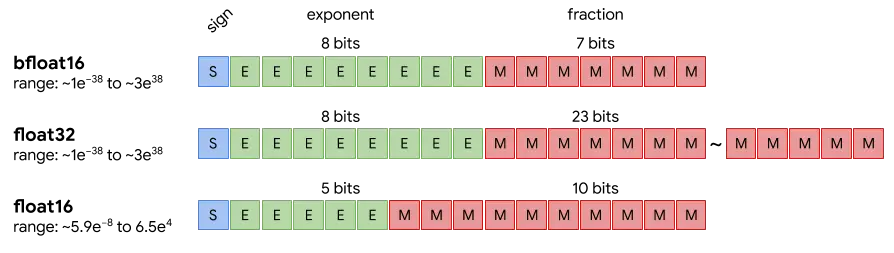

- Do you wish to use FP16 or BF16 (mixed precision)?

- [x] no

- [_] fp16

- [_] bf16

- [_] fp8

For now I choose

no, because to simplify the code when not usingacceleratewe are going to train in fp32, but ideally we would use fp16

The configuration will be saved in ~/.cache/huggingface/accelerate/default_config.yaml and can be modified at any time. Let's see what's inside.

!cat ~/.cache/huggingface/accelerate/default_config.yamlCopied

compute_environment: LOCAL_MACHINEdebug: falsedistributed_type: MULTI_GPUdowncast_bf16: 'no'gpu_ids: 0,1machine_rank: 0main_training_function: mainmixed_precision: fp16num_machines: 1num_processes: 2rdzv_backend: staticsame_network: truetpu_env: []tpu_use_cluster: falsetpu_use_sudo: falseuse_cpu: false

Another way to view the configuration we have is by running in a terminal:

accelerate env!accelerate envCopied

Copy-and-paste the text below in your GitHub issue- `Accelerate` version: 0.28.0- Platform: Linux-5.15.0-105-generic-x86_64-with-glibc2.31- Python version: 3.11.8- Numpy version: 1.26.4- PyTorch version (GPU?): 2.2.1+cu121 (True)- PyTorch XPU available: False- PyTorch NPU available: False- System RAM: 31.24 GB- GPU type: NVIDIA GeForce RTX 3090- `Accelerate` default config:- compute_environment: LOCAL_MACHINE- distributed_type: MULTI_GPU- mixed_precision: fp16- use_cpu: False- debug: False- num_processes: 2- machine_rank: 0- num_machines: 1- gpu_ids: 0,1- rdzv_backend: static- same_network: True- main_training_function: main- downcast_bf16: no- tpu_use_cluster: False- tpu_use_sudo: False- tpu_env: []

Once we have configured accelerate we can test if we have done it correctly by running in a terminal:

accelerate test!accelerate testCopied

Running: accelerate-launch ~/miniconda3/envs/nlp/lib/python3.11/site-packages/accelerate/test_utils/scripts/test_script.pystdout: **Initialization**stdout: Testing, testing. 1, 2, 3.stdout: Distributed environment: DistributedType.MULTI_GPU Backend: ncclstdout: Num processes: 2stdout: Process index: 0stdout: Local process index: 0stdout: Device: cuda:0stdout:stdout: Mixed precision type: fp16stdout:stdout: Distributed environment: DistributedType.MULTI_GPU Backend: ncclstdout: Num processes: 2stdout: Process index: 1stdout: Local process index: 1stdout: Device: cuda:1stdout:stdout: Mixed precision type: fp16stdout:stdout:stdout: **Test process execution**stdout:stdout: **Test split between processes as a list**stdout:stdout: **Test split between processes as a dict**stdout:stdout: **Test split between processes as a tensor**stdout:stdout: **Test random number generator synchronization**stdout: All rng are properly synched.stdout:stdout: **DataLoader integration test**stdout: 0 1 tensor([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,stdout: 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35,stdout: 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53,stdout: 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], device='cuda:1') <class 'accelerate.data_loader.DataLoaderShard'>stdout: tensor([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,stdout: 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35,stdout: 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53,stdout: 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], device='cuda:0') <class 'accelerate.data_loader.DataLoaderShard'>stdout: Non-shuffled dataloader passing.stdout: Shuffled dataloader passing.stdout: Non-shuffled central dataloader passing.stdout: Shuffled central dataloader passing.stdout:stdout: **Training integration test**stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Training yielded the same results on one CPU or distributed setup with no batch split.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Training yielded the same results on one CPU or distributes setup with batch split.stdout: FP16 training check.stdout: FP16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Keep fp32 wrapper check.stdout: Keep fp32 wrapper check.stdout: BF16 training check.stdout: BF16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout:stdout: Training yielded the same results on one CPU or distributed setup with no batch split.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: FP16 training check.stdout: Training yielded the same results on one CPU or distributes setup with batch split.stdout: FP16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Keep fp32 wrapper check.stdout: Keep fp32 wrapper check.stdout: BF16 training check.stdout: BF16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout:stdout: **Breakpoint trigger test**Test is a success! You are ready for your distributed training!

We see that it ends by saying Test is a success! You are ready for your distributed training! so everything is correct.

Training

Optimization of Training

Base code

Let's start by creating a basic training code and then we'll optimize it to see how it's done and how it improves.

First, let's find a dataset. In my case, I will use the tweet_eval dataset, which is a tweet classification dataset. Specifically, I will download the emoji subset that classifies tweets with emojis.

from datasets import load_datasetdataset = load_dataset("tweet_eval", "emoji")datasetCopied

DatasetDict({train: Dataset({features: ['text', 'label'],num_rows: 45000})test: Dataset({features: ['text', 'label'],num_rows: 50000})validation: Dataset({features: ['text', 'label'],num_rows: 5000})})

dataset["train"].infoCopied

DatasetInfo(description='', citation='', homepage='', license='', features={'text': Value(dtype='string', id=None), 'label': ClassLabel(names=['❤', '😍', '😂', '💕', '🔥', '😊', '😎', '✨', '💙', '😘', '📷', '🇺🇸', '☀', '💜', '😉', '💯', '😁', '🎄', '📸', '😜'], id=None)}, post_processed=None, supervised_keys=None, task_templates=None, builder_name='parquet', dataset_name='tweet_eval', config_name='emoji', version=0.0.0, splits={'train': SplitInfo(name='train', num_bytes=3808792, num_examples=45000, shard_lengths=None, dataset_name='tweet_eval'), 'test': SplitInfo(name='test', num_bytes=4262151, num_examples=50000, shard_lengths=None, dataset_name='tweet_eval'), 'validation': SplitInfo(name='validation', num_bytes=396704, num_examples=5000, shard_lengths=None, dataset_name='tweet_eval')}, download_checksums={'hf://datasets/tweet_eval@b3a375baf0f409c77e6bc7aa35102b7b3534f8be/emoji/train-00000-of-00001.parquet': {'num_bytes': 2609973, 'checksum': None}, 'hf://datasets/tweet_eval@b3a375baf0f409c77e6bc7aa35102b7b3534f8be/emoji/test-00000-of-00001.parquet': {'num_bytes': 3047341, 'checksum': None}, 'hf://datasets/tweet_eval@b3a375baf0f409c77e6bc7aa35102b7b3534f8be/emoji/validation-00000-of-00001.parquet': {'num_bytes': 281994, 'checksum': None}}, download_size=5939308, post_processing_size=None, dataset_size=8467647, size_in_bytes=14406955)

Let's see the classes

print(dataset["train"].info.features["label"].names)Copied

['❤', '😍', '😂', '💕', '🔥', '😊', '😎', '✨', '💙', '😘', '📷', '🇺🇸', '☀', '💜', '😉', '💯', '😁', '🎄', '📸', '😜']

And the number of classes

num_classes = len(dataset["train"].info.features["label"].names)num_classesCopied

20

We see that the dataset has 20 classes

Let's look at the maximum sequence of each split

max_len_train = 0max_len_val = 0max_len_test = 0split = "train"for i in range(len(dataset[split])):len_i = len(dataset[split][i]["text"])if len_i > max_len_train:max_len_train = len_isplit = "validation"for i in range(len(dataset[split])):len_i = len(dataset[split][i]["text"])if len_i > max_len_val:max_len_val = len_isplit = "test"for i in range(len(dataset[split])):len_i = len(dataset[split][i]["text"])if len_i > max_len_test:max_len_test = len_imax_len_train, max_len_val, max_len_testCopied

(142, 139, 167)

So we define the maximum sequence in general as 130 for tokenization

max_len = 130Copied

We are interested in the tokenized dataset, not the raw sequences, so we create a tokenizer

from transformers import AutoTokenizercheckpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)Copied

We create a tokenization function

def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")Copied

And now we tokenize the dataset

tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}Copied

Map: 0%| | 0/45000 [00:00<?, ? examples/s]

Map: 0%| | 0/5000 [00:00<?, ? examples/s]

Map: 0%| | 0/50000 [00:00<?, ? examples/s]

As we can see, now we have the tokens (input_ids) and the attention masks (attention_mask), but let's take a look at what kind of data we have.

type(tokenized_dataset["train"][0]["input_ids"]), type(tokenized_dataset["train"][0]["attention_mask"]), type(tokenized_dataset["train"][0]["label"])Copied

(list, list, int)

tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])type(tokenized_dataset["train"][0]["label"]), type(tokenized_dataset["train"][0]["input_ids"]), type(tokenized_dataset["train"][0]["attention_mask"])Copied

(torch.Tensor, torch.Tensor, torch.Tensor)

We create a DataLoader

import torchfrom torch.utils.data import DataLoaderBS = 64dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}Copied

We load the model

from transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained(checkpoints)Copied

Let's see how the model is.

modelCopied

RobertaForSequenceClassification((roberta): RobertaModel((embeddings): RobertaEmbeddings((word_embeddings): Embedding(50265, 768, padding_idx=1)(position_embeddings): Embedding(514, 768, padding_idx=1)(token_type_embeddings): Embedding(1, 768)(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False))(encoder): RobertaEncoder((layer): ModuleList((0-11): 12 x RobertaLayer((attention): RobertaAttention((self): RobertaSelfAttention((query): Linear(in_features=768, out_features=768, bias=True)(key): Linear(in_features=768, out_features=768, bias=True)(value): Linear(in_features=768, out_features=768, bias=True)(dropout): Dropout(p=0.1, inplace=False))(output): RobertaSelfOutput((dense): Linear(in_features=768, out_features=768, bias=True)(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False)))(intermediate): RobertaIntermediate((dense): Linear(in_features=768, out_features=3072, bias=True)(intermediate_act_fn): GELUActivation())(output): RobertaOutput((dense): Linear(in_features=3072, out_features=768, bias=True)(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False))))))(classifier): RobertaClassificationHead((dense): Linear(in_features=768, out_features=768, bias=True)(dropout): Dropout(p=0.1, inplace=False)(out_proj): Linear(in_features=768, out_features=2, bias=True)))

Let's take a look at its last layer

model.classifier.out_projCopied

Linear(in_features=768, out_features=2, bias=True)

model.classifier.out_proj.in_features, model.classifier.out_proj.out_featuresCopied

(768, 2)

We have seen that our dataset has 20 classes, but this model is trained for 2 classes, so we need to modify the last layer.

model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)model.classifier.out_projCopied

Linear(in_features=768, out_features=20, bias=True)

Now yes

Now we create a loss function

loss_function = torch.nn.CrossEntropyLoss()Copied

An optimizer

from torch.optim import Adamoptimizer = Adam(model.parameters(), lr=5e-4)Copied

And lastly, a metric

import evaluatemetric = evaluate.load("accuracy")Copied

Let's check that everything is fine with a sample.

sample = next(iter(dataloader["train"]))Copied

sample["input_ids"].shape, sample["attention_mask"].shapeCopied

(torch.Size([64, 130]), torch.Size([64, 130]))

Now we feed that sample to the model

model.to("cuda")ouputs = model(input_ids=sample["input_ids"].to("cuda"), attention_mask=sample["attention_mask"].to("cuda"))ouputs.logits.shapeCopied

torch.Size([64, 20])

We see that the model outputs 64 batches, which is fine because we set BS = 20 and each one with 20 outputs, which is good because we modified the model to have an output of 20 values.

We obtain the one with the highest value

predictions = torch.argmax(ouputs.logits, axis=-1)predictions.shapeCopied

torch.Size([64])

We obtain the loss

loss = loss_function(ouputs.logits, sample["label"].to("cuda"))loss.item()Copied

2.9990389347076416

And the accuracy

accuracy = metric.compute(predictions=predictions, references=sample["label"])["accuracy"]accuracyCopied

0.015625

We can now create a small training loop

from fastprogress.fastprogress import master_bar, progress_barepochs = 1device = torch.device("cuda" if torch.cuda.is_available() else "cpu")model.to(device)master_progress_bar = master_bar(range(epochs))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'loss.backward()optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "Copied

<IPython.core.display.HTML object>

<IPython.core.display.HTML object>

Script with the base code

In most of the accelerate documentation, it is explained how to use accelerate with scripts, so for now we will do it this way and at the end we will explain how to do it with a notebook.

First, we are going to create a folder where we will save the scripts

!mkdir accelerate_scriptsCopied

Now we write the base code in a script

%%writefile accelerate_scripts/01_code_base.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bardataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 64dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1device = torch.device("cuda" if torch.cuda.is_available() else "cpu")model.to(device)master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'loss.backward()optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "print(f"Accuracy = {accuracy['accuracy']}")Copied

Overwriting accelerate_scripts/01_code_base.py

And now we run it

%%time!python accelerate_scripts/01_code_base.pyCopied

Accuracy = 0.2112CPU times: user 2.12 s, sys: 391 ms, total: 2.51 sWall time: 3min 36s

We see that on my computer it took about 3 and a half minutes

Code with accelerate

Now we replace some things

- First, we import

Acceleratorand initialize it

from accelerate import Accelerator

accelerator = Accelerator()- We don't do the typical anymore

``` python

torch.device("cuda" if torch.cuda.is_available() else "cpu")

```

- But we let

acceleratechoose the device.

device = accelerator.device- We pass the relevant elements for training through the

preparemethod and no longer domodel.to(device)

model, optimizer, dataloader["train"], dataloader["validation"] = prepare(model, optimizer, dataloader["train"], dataloader["validation"])- We no longer send the data and model to the GPU with

.to(device)sinceacceleratehas taken care of it with thepreparemethod.

- Instead of performing backpropagation with

loss.backward(), we letacceleratehandle it with

accelerator.backward(loss)- When calculating the metric in the validation loop, we need to gather the values from all points, especially if we are doing distributed training, for this we do

predictions = accelerator.gather_for_metrics(predictions)%%writefile accelerate_scripts/02_accelerate_base_code.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bar# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 64dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()print(f"End of training epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}")model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print(f"End of validation epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}")master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "print(f"Accuracy = {accuracy['accuracy']}")Copied

Overwriting accelerate_scripts/02_accelerate_base_code.py

If you notice, I've added these two lines print(f"End of training epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}") and the line print(f"End of validation epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}"), I added them on purpose because they will reveal something very important.

Now let's run it. To execute the accelerate scripts, use the command accelerate launch

accelerate launch script.py%%time!accelerate launch accelerate_scripts/02_accelerate_base_code.pyCopied

End of training epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([64])End of training epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([64])End of validation epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([8])Accuracy = 0.206End of validation epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([8])Accuracy = 0.206CPU times: user 1.6 s, sys: 272 ms, total: 1.88 sWall time: 2min 37s

We see that before it took about 3 and a half minutes, and now it takes around 2 and a half minutes. Quite an improvement. Additionally, if we look at the prints, we can see that they have been printed twice.

And how can this be? Well, because accelerate has parallelized the training across the two GPUs I have, so it was much faster.

Moreover, when I ran the first script, that is, when I didn't use accelerate, the GPU was almost full, while when I ran the second one, that is, the one that uses accelerate, both GPUs were barely utilized, so we can increase the batch size to try to fill both. Let's do it!

%%writefile accelerate_scripts/03_accelerate_base_code_more_bs.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bar# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "print(f"Accuracy = {accuracy['accuracy']}")Copied

Overwriting accelerate_scripts/03_accelerate_base_code_more_bs.py

I have removed the extra prints, as we have already seen that the code is running on both GPUs, and I increased the batch size from 64 to 128. Let's run it and see.

%%time!accelerate launch accelerate_scripts/03_accelerate_base_code_more_bs.pyCopied

Accuracy = 0.1052Accuracy = 0.1052CPU times: user 1.41 s, sys: 180 ms, total: 1.59 sWall time: 2min 22s

Increasing the batch size has reduced the execution time by a few seconds.

Execution of processes

Execution of code in a single process

We have previously seen that the prints were printed twice, this is because accelerate creates as many processes as there are devices where the code is executed, in my case it creates two processes due to having two GPUs.

However, not all code should be executed in all processes, for example, the prints slow down the code a lot, especially if they are executed multiple times, if checkpoints are saved, they would be saved twice, etc.

To be able to execute part of the code in a single process, it has to be encapsulated in a function and decorated with accelerator.on_local_main_process. For example, in the following code you will see that I have created the following function

@accelerator.on_local_main_process

def print_something(something):

print(something)Another option is to include the code within an if accelerator.is_local_main_process as in the following code

if accelerator.is_local_main_process:

print("Something")%%writefile accelerate_scripts/04_accelerate_base_code_some_code_in_one_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bar# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_something(something):print(something)master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "# print(f"Accuracy = {accuracy['accuracy']}")print_something(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")Copied

Overwriting accelerate_scripts/04_accelerate_base_code_some_code_in_one_process.py

Let's run it and see

%%time!accelerate launch accelerate_scripts/04_accelerate_base_code_some_code_in_one_process.pyCopied

Accuracy = 0.2098End of script with 0.2098 accuracyCPU times: user 1.38 s, sys: 197 ms, total: 1.58 sWall time: 2min 22s

Now the print has only been executed once

However, although not visible much, the progress bars run in each process.

I haven't found a way to avoid this with the progress bars from fastprogress, but I have with those from tqdm, so I'm going to replace the fastprogress progress bars with tqdm ones, and to make them run in a single process, you need to add the argument disable=not accelerator.is_local_main_process

%%writefile accelerate_scripts/05_accelerate_base_code_some_code_in_one_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_something(something):print(something)for i in range(EPOCHS):model.train()# progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()# progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# print(f"Accuracy = {accuracy['accuracy']}")print_something(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")Copied

Overwriting accelerate_scripts/05_accelerate_base_code_some_code_in_one_process.py

%%time!accelerate launch accelerate_scripts/05_accelerate_base_code_some_code_in_one_process.pyCopied

100%|█████████████████████████████████████████| 176/176 [02:01<00:00, 1.45it/s]100%|███████████████████████████████████████████| 20/20 [00:06<00:00, 3.30it/s]Accuracy = 0.2166End of script with 0.2166 accuracyCPU times: user 1.33 s, sys: 195 ms, total: 1.52 sWall time: 2min 22s

We have shown an example of how to print in a single process, and this has been a way to execute processes in a single process. But if what you want is just to print in a single process, the print method from accelerate can be used. Let's see the same example as before with this method.

%%writefile accelerate_scripts/06_accelerate_base_code_print_one_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()# progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()# progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# print(f"Accuracy = {accuracy['accuracy']}")accelerator.print(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")Copied

Writing accelerate_scripts/06_accelerate_base_code_print_one_process.py

We run it

%%time!accelerate launch accelerate_scripts/06_accelerate_base_code_print_one_process.pyCopied

Map: 100%|██████████████████████| 45000/45000 [00:02<00:00, 15433.52 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 11406.61 examples/s]Map: 100%|██████████████████████| 45000/45000 [00:02<00:00, 15036.87 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14932.76 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14956.60 examples/s]100%|█████████████████████████████████████████| 176/176 [02:00<00:00, 1.46it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.33it/s]Accuracy = 0.2134End of script with 0.2134 accuracyCPU times: user 1.4 s, sys: 189 ms, total: 1.59 sWall time: 2min 27s

Code Execution in All Processes

However, there is code that must run in all processes, for example, if we upload the checkpoints to the hub, so here we have two options: wrap the code in a function and decorate it with accelerator.on_main_process

@accelerator.on_main_process

def do_my_thing():

"Something done once per server"

do_thing_once()or put the code inside an if accelerator.is_main_process

if accelerator.is_main_process:

repo.push_to_hub()Since we are only doing training to showcase the accelerate library and the model we are training is not good, it doesn't make sense to upload the checkpoints to the hub right now, so I will do an example with prints.

%%writefile accelerate_scripts/07_accelerate_base_code_some_code_in_all_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_in_one_process(something):print(something)@accelerator.on_main_processdef print_in_all_processes(something):print(something)for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print_in_one_process(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")print_in_all_processes(f"All process: Accuracy = {accuracy['accuracy']}")if accelerator.is_main_process:print(f"All process: End of script with {accuracy['accuracy']} accuracy")Copied

Overwriting accelerate_scripts/06_accelerate_base_code_some_code_in_all_process.py

Let's run it to see

%%time!accelerate launch accelerate_scripts/07_accelerate_base_code_some_code_in_all_process.pyCopied

Map: 100%|██████████████████████| 45000/45000 [00:03<00:00, 14518.44 examples/s]Map: 100%|██████████████████████| 45000/45000 [00:03<00:00, 14368.77 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 16466.33 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 14806.14 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14253.33 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14337.07 examples/s]100%|█████████████████████████████████████████| 176/176 [02:00<00:00, 1.46it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.34it/s]Accuracy = 0.2092End of script with 0.2092 accuracyAll process: Accuracy = 0.2092All process: End of script with 0.2092 accuracyCPU times: user 1.42 s, sys: 216 ms, total: 1.64 sWall time: 2min 27s

Code Execution in Process X

Finally, we can specify in which process we want to execute code. For this, we need to create a function and decorate it with @accelerator.on_process(process_index=0)

@accelerator.on_process(process_index=0)

def do_my_thing():

"Something done on process index 0"

do_thing_on_index_zero()or decorate it with @accelerator.on_local_process(local_process_idx=0)

@accelerator.on_local_process(local_process_index=0)def do_my_thing():

"Something done on process index 0 on each server"

do_thing_on_index_zero_on_each_server()Here I have put process 0, but any number can be put

%%writefile accelerate_scripts/08_accelerate_base_code_some_code_in_some_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_in_one_process(something):print(something)@accelerator.on_main_processdef print_in_all_processes(something):print(something)@accelerator.on_process(process_index=0)def print_in_process_0(something):print("Process 0: " + something)@accelerator.on_local_process(local_process_index=1)def print_in_process_1(something):print("Process 1: " + something)for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print_in_one_process(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")print_in_all_processes(f"All process: Accuracy = {accuracy['accuracy']}")if accelerator.is_main_process:print(f"All process: End of script with {accuracy['accuracy']} accuracy")print_in_process_0("End of process 0")print_in_process_1("End of process 1")Copied

Overwriting accelerate_scripts/07_accelerate_base_code_some_code_in_some_process.py

We run it

%%time!accelerate launch accelerate_scripts/08_accelerate_base_code_some_code_in_some_process.pyCopied

Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 15735.58 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14906.20 examples/s]100%|█████████████████████████████████████████| 176/176 [02:02<00:00, 1.44it/s]100%|███████████████████████████████████████████| 20/20 [00:06<00:00, 3.27it/s]Process 1: End of process 1Accuracy = 0.2128End of script with 0.2128 accuracyAll process: Accuracy = 0.2128All process: End of script with 0.2128 accuracyProcess 0: End of process 0CPU times: user 1.42 s, sys: 295 ms, total: 1.71 sWall time: 2min 37s

Synchronizing Processes

If we have code that needs to run on all processes, it's interesting to wait for it to finish on all processes before doing another task, so for this we use accelerator.wait_for_everyone()

To see it, we are going to introduce a delay in one of the print functions in a process.

I've also added a break in the training loop so that it doesn't train for too long, which isn't what we're interested in right now.

%%writefile accelerate_scripts/09_accelerate_base_code_sync_all_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdmimport time# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_in_one_process(something):print(something)@accelerator.on_main_processdef print_in_all_processes(something):print(something)@accelerator.on_process(process_index=0)def print_in_process_0(something):time.sleep(2)print("Process 0: " + something)@accelerator.on_local_process(local_process_index=1)def print_in_process_1(something):print("Process 1: " + something)for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()breakmodel.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print_in_one_process(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")print_in_all_processes(f"All process: Accuracy = {accuracy['accuracy']}")if accelerator.is_main_process:print(f"All process: End of script with {accuracy['accuracy']} accuracy")print_in_one_process("Printing with delay in process 0")print_in_process_0("End of process 0")print_in_process_1("End of process 1")accelerator.wait_for_everyone()print_in_one_process("End of script")Copied

Overwriting accelerate_scripts/08_accelerate_base_code_sync_all_process.py

We run it

!accelerate launch accelerate_scripts/09_accelerate_base_code_sync_all_process.pyCopied

Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 14218.23 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 14666.25 examples/s]0%| | 0/176 [00:00<?, ?it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.58it/s]Process 1: End of process 1Accuracy = 0.212End of script with 0.212 accuracyAll process: Accuracy = 0.212All process: End of script with 0.212 accuracyPrinting with delay in process 0Process 0: End of process 0End of script

As can be seen, first Process 1: End of process 1 is printed and then the rest. This is because the remaining prints are either done in process 0 or in all processes, so until the 2-second delay we set is over, the rest of the code does not execute.

Save and load the state dict

When we train, sometimes we save the state so we can continue at another time.

To save the state, we will have to use the methods save_state() and load_state()

%%writefile accelerate_scripts/10_accelerate_save_and_load_checkpoints.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")@accelerator.on_local_main_processdef print_something(something):print(something)EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# Guardamos los pesosaccelerator.save_state("accelerate_scripts/checkpoints")print_something(f"Accuracy = {accuracy['accuracy']}")# Cargamos los pesosaccelerator.load_state("accelerate_scripts/checkpoints")Copied

Overwriting accelerate_scripts/09_accelerate_save_and_load_checkpoints.py

We run it

!accelerate launch accelerate_scripts/10_accelerate_save_and_load_checkpoints.pyCopied

100%|█████████████████████████████████████████| 176/176 [01:58<00:00, 1.48it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.40it/s]Accuracy = 0.2142

Save the model

When the prepare method was used, the model was wrapped to be able to save it to the necessary devices. Therefore, when saving it, we need to use the save_model method, which first unwraps it and then saves it. Additionally, if we use the parameter safe_serialization=True, the model will be saved as a safe tensor.

%%writefile accelerate_scripts/11_accelerate_save_model.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")@accelerator.on_local_main_processdef print_something(something):print(something)EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# Guardamos el modeloaccelerator.wait_for_everyone()accelerator.save_model(model, "accelerate_scripts/model", safe_serialization=True)print_something(f"Accuracy = {accuracy['accuracy']}")Copied

Writing accelerate_scripts/11_accelerate_save_model.py

We run it

!accelerate launch accelerate_scripts/11_accelerate_save_model.pyCopied

100%|█████████████████████████████████████████| 176/176 [01:58<00:00, 1.48it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.35it/s]Accuracy = 0.214

Save the pretrained model

In models that use the transformers library, we must save the model using the save_pretrained method to be able to load it with the from_pretrained method. Before saving it, you need to unwrap it using the unwrap_model method.

%%writefile accelerate_scripts/12_accelerate_save_pretrained.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")@accelerator.on_local_main_processdef print_something(something):print(something)EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# Guardamos el modelo pretrainedunwrapped_model = accelerator.unwrap_model(model)unwrapped_model.save_pretrained("accelerate_scripts/model_pretrained",is_main_process=accelerator.is_main_process,save_function=accelerator.save,)print_something(f"Accuracy = {accuracy['accuracy']}")Copied

Writing accelerate_scripts/11_accelerate_save_pretrained.py

We run it

!accelerate launch accelerate_scripts/12_accelerate_save_pretrained.pyCopied