Declarar redes neuronales claramente

Disclaimer: This post has been translated to English using a machine translation model. Please, let me know if you find any mistakes.

When in PyTorch a neural network is created as a list of layers

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])

Then iterating through it in the forward method is not so clear

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return x

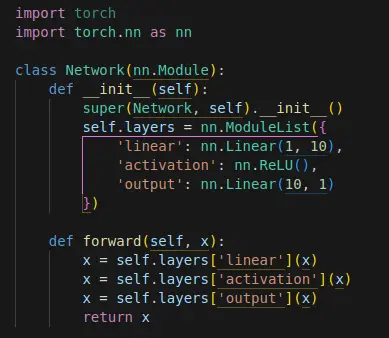

However, when creating a neural network as a dictionary of layers

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return ximport torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})

Then iterating through it in the forward method is clearer

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return ximport torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})def forward(self, x):x = self.layers['linear'](x)x = self.layers['activation'](x)x = self.layers['output'](x)return x