Declare neural networks clearly

Cuando en PyTorch se crea una red neuronal como una lista de capas

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])Copied

Luego iterar por ella en el método forward no es tan claro

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return xCopied

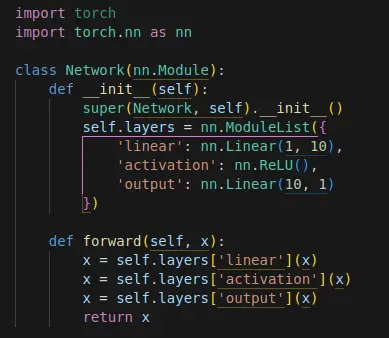

Sin embargo, cuando se crea una red neuronal como un diccionario de capas

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return ximport torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})Copied

Luego iterar por ella en el método forward es más claro

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return ximport torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})def forward(self, x):x = self.layers['linear'](x)x = self.layers['activation'](x)x = self.layers['output'](x)return xCopied