LLMs quantization

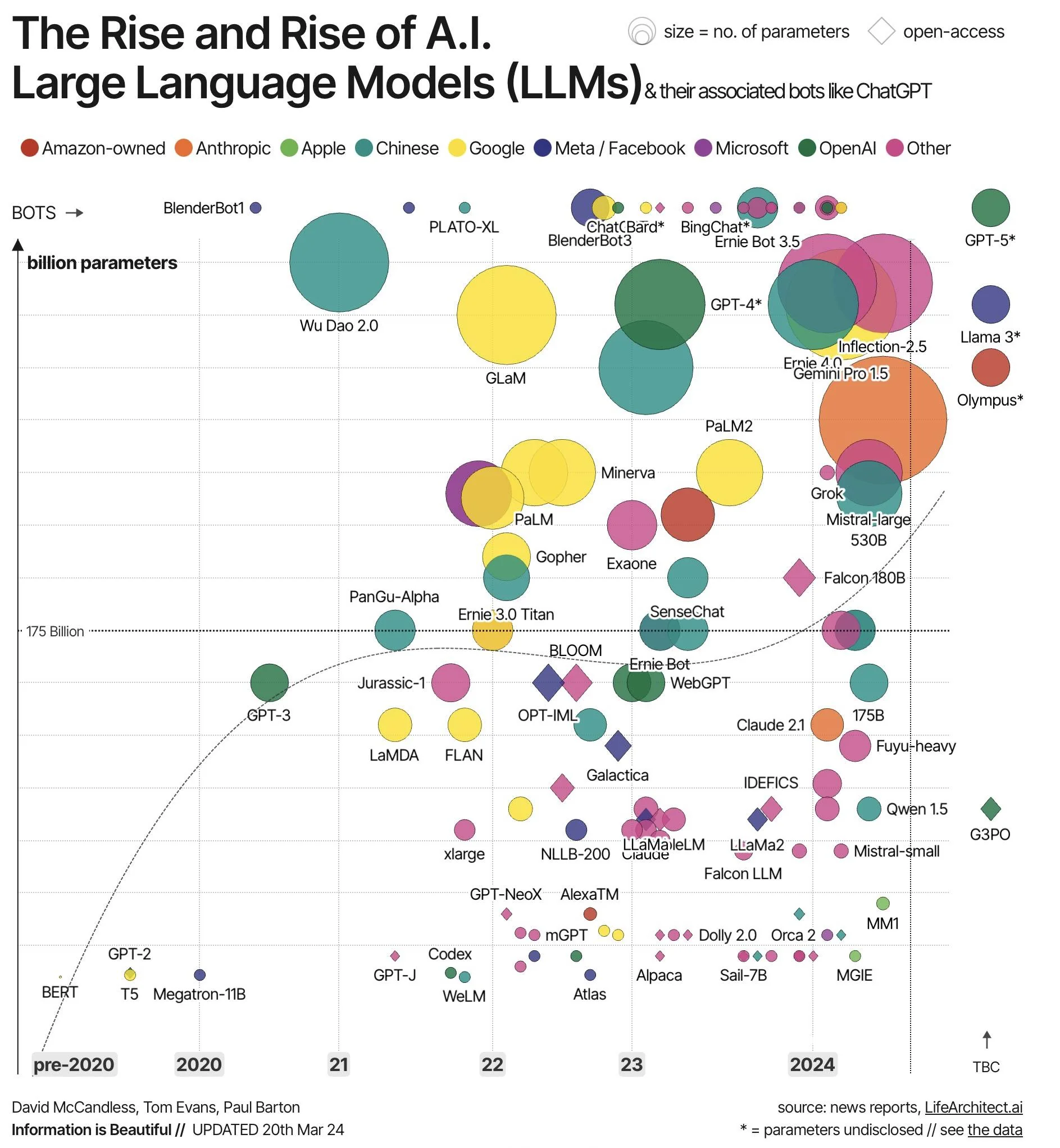

Language models are getting larger and larger, which makes them increasingly expensive and time-consuming to run.

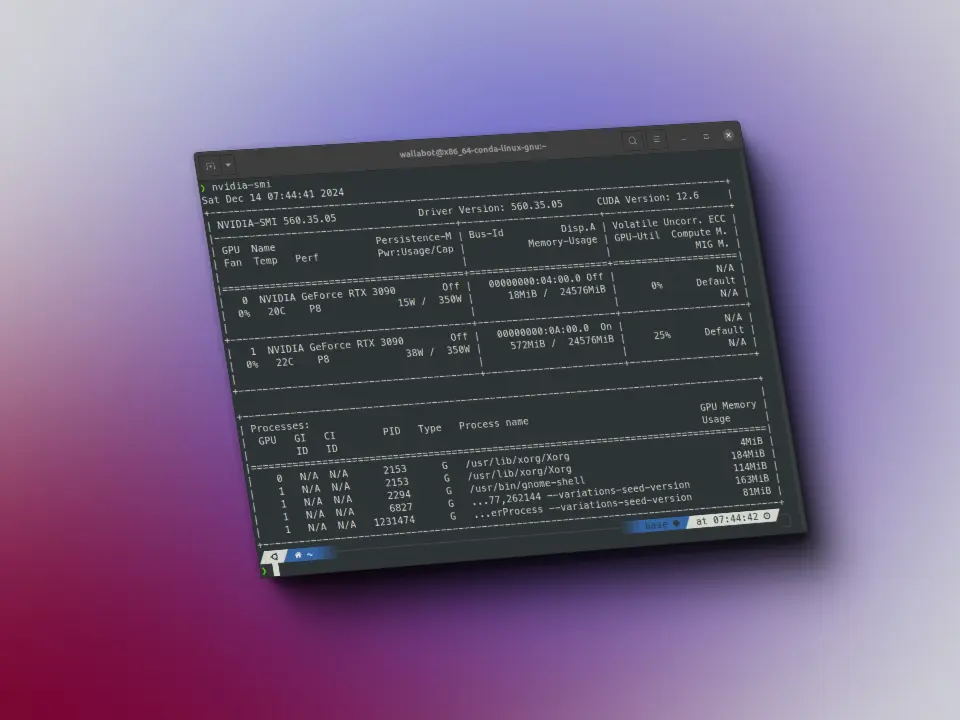

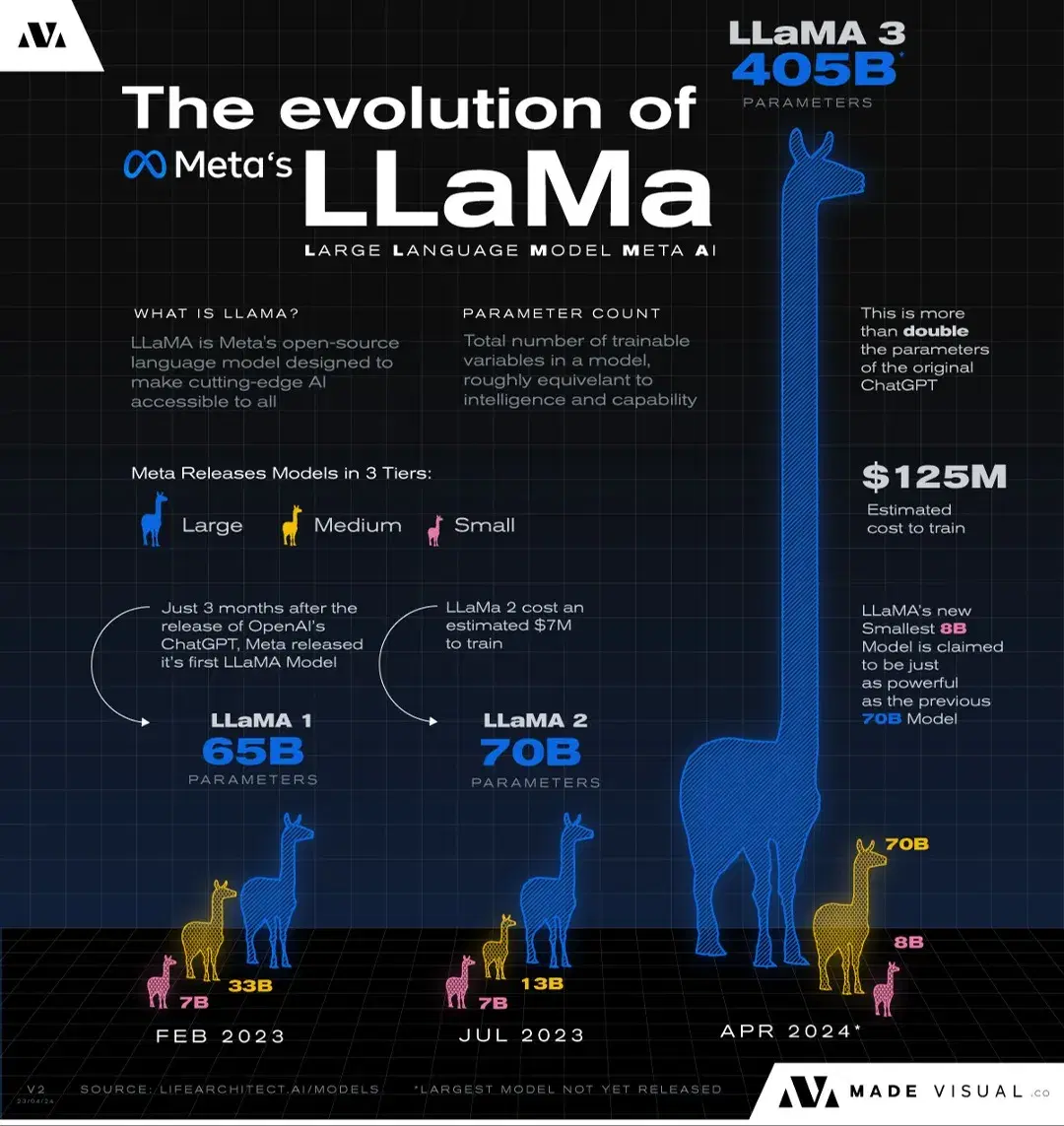

For example, the model calls 3 400B, if its parameters are stored in FP32 format, each parameter occupies 4 bytes, which means that only to store the model it takes 400(10e9)4 bytes = 1.6 TB of VRAM memory. This means 20 GPUs of 80GB of VRAM memory each, which are not cheap.

But if we leave aside giant models and go to models with more common sizes, for example, 70B of parameters, just storing the model means 70(10e9)4 bytes = 280 GB of VRAM memory, which means 4 GPUs of 80GB of VRAM memory each.

This is because we store the weights in FP32 format, that is, each parameter occupies 4 bytes. But what happens if we make each parameter occupy fewer bytes? This is called quantization.

For example, if we get a 70B parameter model, its parameters occupy half a byte, then we would only need 70(10e9)0.5 bytes = 35 GB of VRAM memory, which means 2 GPUs of 24GB of VRAM memory each, which can already be considered normal user GPUs.

This notebook has been automatically translated to make it accessible to more people, please let me know if you see any typos.

We therefore need ways to be able to resize these models. There are three ways to do that, distillation, pruning and quantization.

Distillation consists of training a smaller model from the outputs of the larger one. That is, an input is given to the small model and to the large one, the correct output is considered to be that of the large model, so the training of the small model is performed according to the output of the large model. But this requires having the large model stored, which is not what we want or can do.

Pruning consists of eliminating parameters from the model, making it smaller and smaller. This method is based on the idea that current language models are oversized and that only a few parameters really provide information. Therefore, if we manage to eliminate the parameters that do not provide information, we will get a smaller model. But this is not easy today, because we have no way of knowing which parameters are important and which are not.

On the other hand, quantization consists of reducing the size of each of the parameters of the model. And this is what we are going to explain in this post.

Parameter format

The weight parameters can be stored in several types of formats

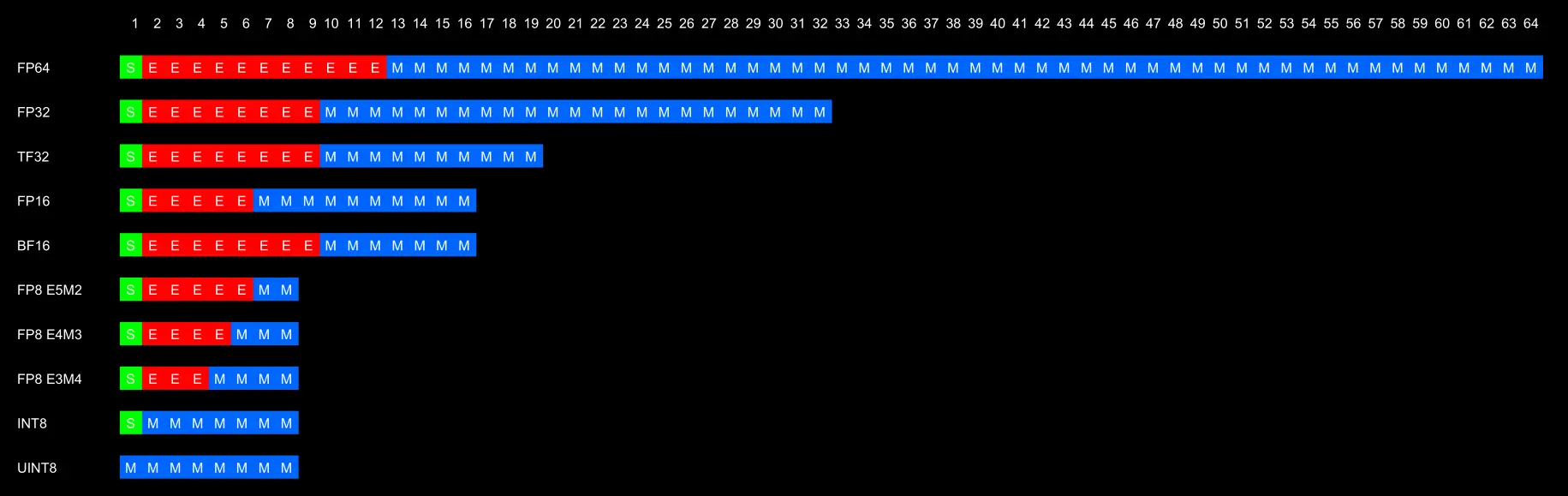

Originally FP32 was used to store the parameters, but as we started to run out of memory to store the models, we started to move to FP16, which did not give bad results.

However, the problem with FP16 is that it does not reach values as high as FP32, so the case of value overflow can occur, i.e., when performing internal calculations in the network, the result is so high that it cannot be represented in FP16, which produces errors. This occurs because the model was trained in FP32, which makes all possible internal computations possible, but when it is then passed to FP16 to be able to make inferences, some internal computations may produce overflows.

Due to these overflow errors, TF32 and BF16 were created, which have the same amount of exponent bits, which makes them able to reach values as high as FP32, but with the advantage of occupying less memory because they have fewer bits. However, both having fewer mantissa bits, they cannot represent numbers as accurately as FP32, which can give rounding errors, but at least we will not get an error when running the network. TF32 has a total of 19 bits, while BF16 has 16 bits. BF16 is often used more because it saves more memory.

Historically there have been the INT8 and UINT8 formats, which can represent numbers from -128 to 127 and from 0 to 255 respectively. Although they are good formats because they save less memory, since each parameter occupies 1 byte compared to the 4 bytes of FP32, the problem they have is that they can only represent a small range of numbers and also only integers, so there can be the two problems seen before, overflow and lack of precision.

To solve the problem that the INT8 and UINT8 formats only represent integers, the FP8 and FP4 formats have been created, but they are not yet well established, nor do they have a very widespread format.

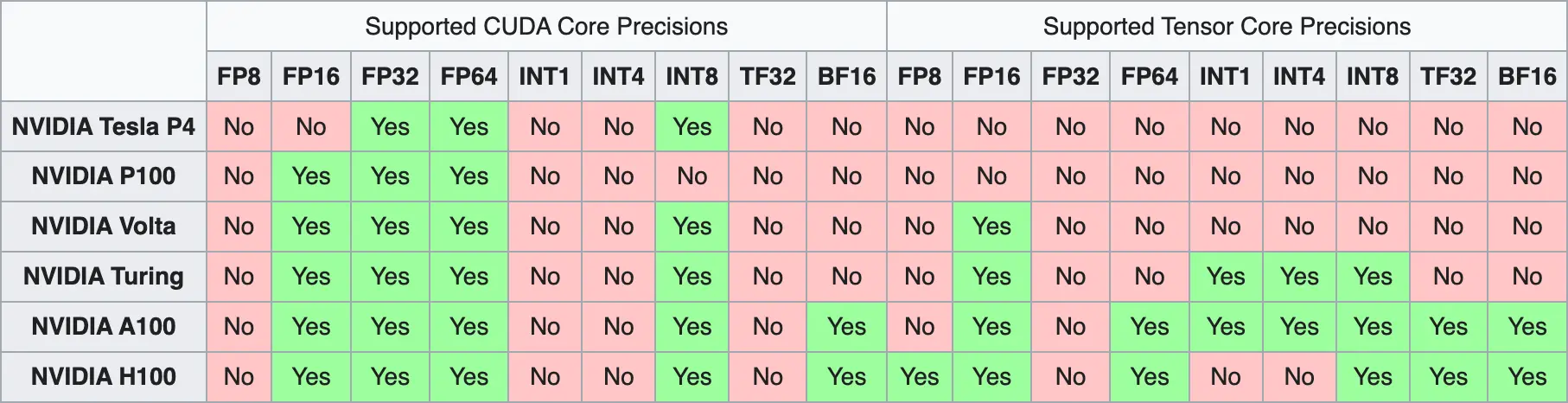

Even if we have a way to be able to store model parameters in smaller formats, and even if we manage to solve the overflow and rounding problems, we have another problem, and that is that not all GPUs are capable of representing all formats. This is because these memory problems are relatively new, so older GPUs were not designed to be able to solve these problems and therefore are not able to represent all formats.

As a last detail, as a curiosity, during the training of the models, what is called mixed precision is used. The model weights are stored in FP32 format, however the forward pass and backward pass are performed in FP16 to make it faster. The gradients resulting from the backward pass are stored in FP16 and are used to modify the FP32 values of the weights.

Types of quantization

Zero point quantization

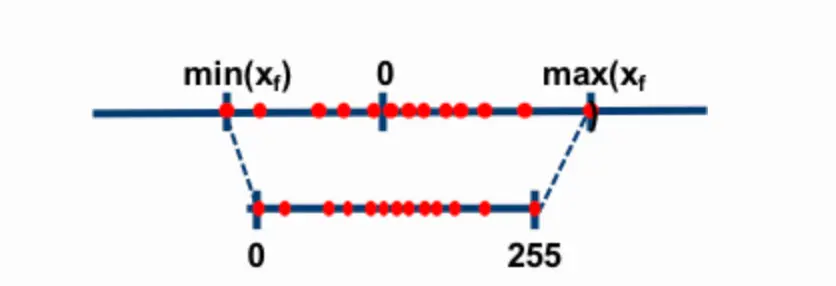

This is the simplest type of quantization. It consists in reducing the range of values in a linear way, the minimum value of FP32 corresponds to the minimum value of the new format, the zero of FP32 corresponds to the zero of the new format and the maximum value of FP32 corresponds to the maximum value of the new format.

For example, if we want to pass the numbers represented from -1 to 1 in UINT8 format, as the limits of UINT8 are -127 and 127, if we want to represent the value 0.3 what we do is to multiply 0.3 by 127, which gives 38.1 and round it to 38, which is the value that would be stored in UINT8.

If we want to do the opposite step, to pass 38 to format between -1 and 1, what we do is divide 38 by 127, which gives 0.2992, which is approximately 0.3, and we can see that we have an error of 0.008.

Affine quantification

In this type of quantization, if you have an array of values in one format and you want to pass it to another, you first divide the integer array by the maximum value of the array and then multiply the integer array by the maximum value of the new format.

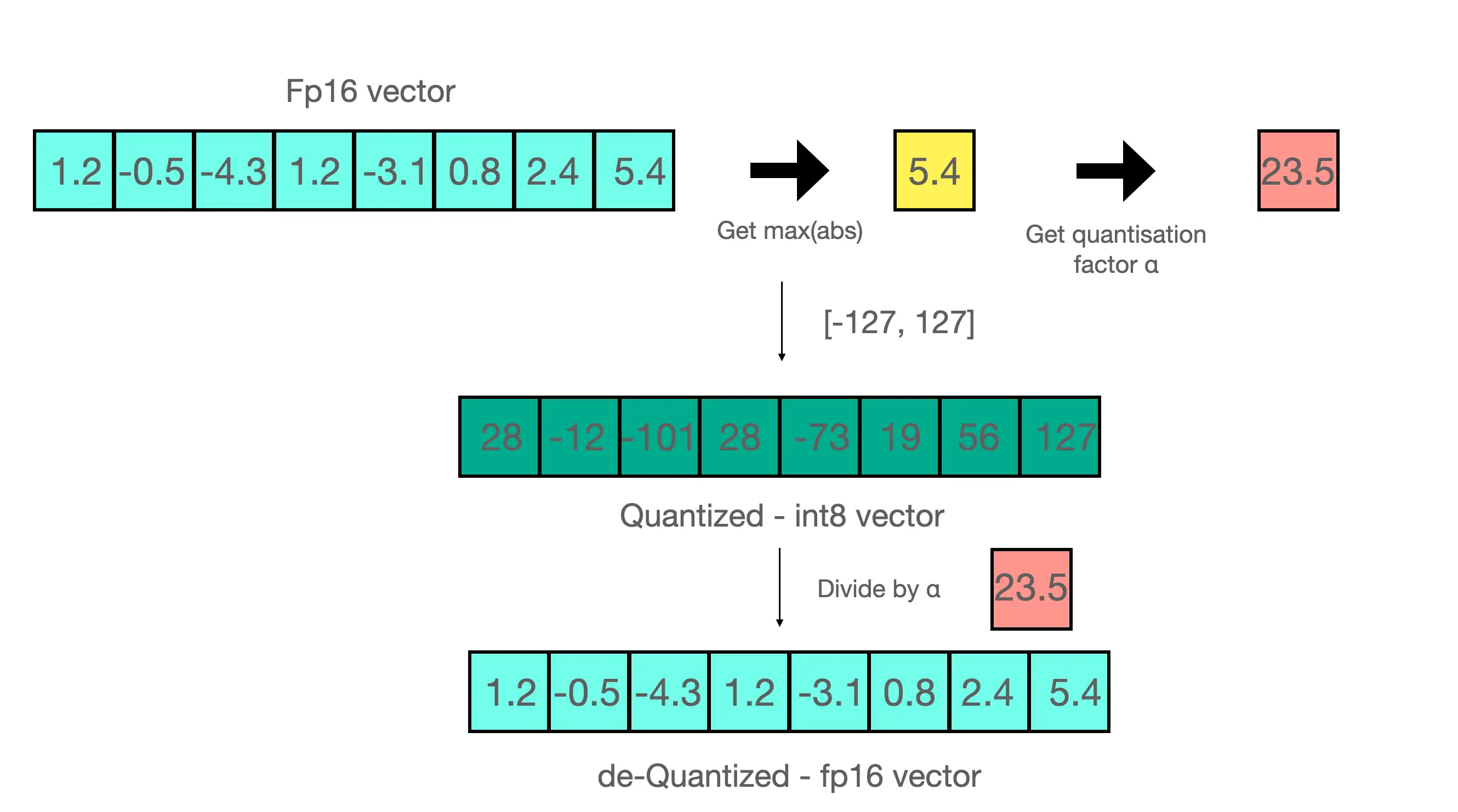

For example, in the image above we have the array

[1.2, -0.5, -4.3, 1.2, -3.1, 0.8, 2.4, 5.4]As its maximum value is 5.4 we divide the array by that value and get

[0.2222222222, -0.09259259259, -0.7962962963, 0.2222222222, -0.5740740741, 0.1481481481, 0.4444444444, 1]If we now multiply all the values by 127, which is the maximum value of UINT8, we get

[28,22222222, -11.75925926, -101.1296296, 28.22222222, -72.90740741, 18.81481481, 56.44444444, 127]Which, rounded off, would be

[28, -12, -101, 28, -73, 19, 56, 127]If we now wanted to perform the inverse step we would have to divide the resulting array by 127, which would give

[0,2204724409, -0.09448818898, -0.7952755906, 0.2204724409, -0.5748031496, 0.1496062992, 0.4409448819, 1]And multiply again by 5.4, which would give us

[1,190551181, -0.5102362205, -4.294488189, 1.190551181, -3.103937008, 0.8078740157, 2.381102362, 5.4]If we compare it with the original array, we see that we have error

Quantization moments

Post-training quantification

As the name implies, quantization occurs after training. The model is trained in FP32 and then quantized to another format. This method is the simplest, but can lead to errors in quantization accuracy.

Quantization during training

During training, the forward pass is performed on the original model and on a quantized model and possible errors derived from quantization are seen in order to mitigate them. This process makes the training more expensive, because you have to have the original and the quantized model stored in memory, and slower, because you have to perform the forward pass on two models.

Quantization methods

Below are the links to the posts where I explain each of the methods so that this post does not become too long.

- LLM.int8()

- GPTQ

- QLoRA

- AWQ

- QuIP

- GGUF

- HQQ

- AQLM

- FBGEMM FP8