Disclaimer: This post has been translated to English using a machine translation model. Please, let me know if you find any mistakes.

Install the OpenAI library

First of all, in order to use the OpenAI API, it is necessary to install the OpenAI library. To do this, we run the following command

%pip install --upgrade openaiCopied

Import the OpenAI library

Once the library is installed, we import it to use it in our code.

import openaiCopied

Get an API Key

To use the OpenAI API, it is necessary to obtain an API Key. To do this, we go to the OpenAI page and register. Once registered, we navigate to the API Keys section and create a new API Key.

Once we have it, we tell the OpenAI API what our API Key is.

api_key = "Pon aquí tu API key"Copied

We create our first chatbot

With the OpenAI API, it's very easy to create a simple chatbot, to which we will pass a prompt, and it will return a response.

First, we need to choose which model we are going to use. In my case, I will use the gpt-3.5-turbo-1106 model, which is a good model for this post as of today, since what we are going to do does not require using the best model. OpenAI has a list with all their models and a page with the prices

model = "gpt-3.5-turbo-1106"Copied

Now we need to create a client that will communicate with the OpenAI API.

client = openai.OpenAI(api_key=api_key, organization=None)Copied

As we can see, we have passed our API Key. Additionally, you can pass the organization, but in our case, it is not necessary.

We create the prompt

promtp = "Cuál es el mejor lenguaje de programación para aprender?"Copied

And now we can ask OpenAI for a response.

response = client.chat.completions.create(model=model,messages=[{"role": "user", "content": f"{promtp}"}],)Copied

Let's see how the response is.

type(response), responseCopied

(openai.types.chat.chat_completion.ChatCompletion,ChatCompletion(id='chatcmpl-8RaHCm9KalLxj2PPbLh6f8A4djG8Y', choices=[Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content='No hay un "mejor" lenguaje de programación para aprender, ya que depende de tus intereses, objetivos y el tipo de desarrollo que te interese. Algunos lenguajes populares para empezar a aprender a programar incluyen Python, JavaScript, Java, C# y Ruby. Estos lenguajes son conocidos por su sintaxis clara y su versatilidad, lo que los hace buenos candidatos para principiantes. También es útil investigar qué lenguajes son populares en la industria en la que te gustaría trabajar, ya que el conocimiento de un lenguaje en demanda puede abrirte más oportunidades laborales. En resumen, la elección del lenguaje de programación para aprender dependerá de tus preferencias personales y de tus metas profesionales.', role='assistant', function_call=None, tool_calls=None))], created=1701584994, model='gpt-3.5-turbo-1106', object='chat.completion', system_fingerprint='fp_eeff13170a', usage=CompletionUsage(completion_tokens=181, prompt_tokens=21, total_tokens=202)))

print(f"response.id = {response.id}")print(f"response.choices = {response.choices}")for i in range(len(response.choices)):print(f"response.choices[{i}] = {response.choices[i]}")print(f" response.choices[{i}].finish_reason = {response.choices[i].finish_reason}")print(f" response.choices[{i}].index = {response.choices[i].index}")print(f" response.choices[{i}].message = {response.choices[i].message}")content = response.choices[i].message.content.replace(' ', ' ')print(f" response.choices[{i}].message.content = {content}")print(f" response.choices[{i}].message.role = {response.choices[i].message.role}")print(f" response.choices[{i}].message.function_call = {response.choices[i].message.function_call}")print(f" response.choices[{i}].message.tool_calls = {response.choices[i].message.tool_calls}")print(f"response.created = {response.created}")print(f"response.model = {response.model}")print(f"response.object = {response.object}")print(f"response.system_fingerprint = {response.system_fingerprint}")print(f"response.usage = {response.usage}")print(f" response.usage.completion_tokens = {response.usage.completion_tokens}")print(f" response.usage.prompt_tokens = {response.usage.prompt_tokens}")print(f" response.usage.total_tokens = {response.usage.total_tokens}")Copied

response.id = chatcmpl-8RaHCm9KalLxj2PPbLh6f8A4djG8Yresponse.choices = [Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content='No hay un "mejor" lenguaje de programación para aprender, ya que depende de tus intereses, objetivos y el tipo de desarrollo que te interese. Algunos lenguajes populares para empezar a aprender a programar incluyen Python, JavaScript, Java, C# y Ruby. Estos lenguajes son conocidos por su sintaxis clara y su versatilidad, lo que los hace buenos candidatos para principiantes. También es útil investigar qué lenguajes son populares en la industria en la que te gustaría trabajar, ya que el conocimiento de un lenguaje en demanda puede abrirte más oportunidades laborales. En resumen, la elección del lenguaje de programación para aprender dependerá de tus preferencias personales y de tus metas profesionales.', role='assistant', function_call=None, tool_calls=None))]response.choices[0] = Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content='No hay un "mejor" lenguaje de programación para aprender, ya que depende de tus intereses, objetivos y el tipo de desarrollo que te interese. Algunos lenguajes populares para empezar a aprender a programar incluyen Python, JavaScript, Java, C# y Ruby. Estos lenguajes son conocidos por su sintaxis clara y su versatilidad, lo que los hace buenos candidatos para principiantes. También es útil investigar qué lenguajes son populares en la industria en la que te gustaría trabajar, ya que el conocimiento de un lenguaje en demanda puede abrirte más oportunidades laborales. En resumen, la elección del lenguaje de programación para aprender dependerá de tus preferencias personales y de tus metas profesionales.', role='assistant', function_call=None, tool_calls=None))response.choices[0].finish_reason = stopresponse.choices[0].index = 0response.choices[0].message = ChatCompletionMessage(content='No hay un "mejor" lenguaje de programación para aprender, ya que depende de tus intereses, objetivos y el tipo de desarrollo que te interese. Algunos lenguajes populares para empezar a aprender a programar incluyen Python, JavaScript, Java, C# y Ruby. Estos lenguajes son conocidos por su sintaxis clara y su versatilidad, lo que los hace buenos candidatos para principiantes. También es útil investigar qué lenguajes son populares en la industria en la que te gustaría trabajar, ya que el conocimiento de un lenguaje en demanda puede abrirte más oportunidades laborales. En resumen, la elección del lenguaje de programación para aprender dependerá de tus preferencias personales y de tus metas profesionales.', role='assistant', function_call=None, tool_calls=None)response.choices[0].message.content =No hay un "mejor" lenguaje de programación para aprender, ya que depende de tus intereses, objetivos y el tipo de desarrollo que te interese. Algunos lenguajes populares para empezar a aprender a programar incluyen Python, JavaScript, Java, C# y Ruby. Estos lenguajes son conocidos por su sintaxis clara y su versatilidad, lo que los hace buenos candidatos para principiantes. También es útil investigar qué lenguajes son populares en la industria en la que te gustaría trabajar, ya que el conocimiento de un lenguaje en demanda puede abrirte más oportunidades laborales. En resumen, la elección del lenguaje de programación para aprender dependerá de tus preferencias personales y de tus metas profesionales.response.choices[0].message.role = assistantresponse.choices[0].message.function_call = Noneresponse.choices[0].message.tool_calls = Noneresponse.created = 1701584994response.model = gpt-3.5-turbo-1106response.object = chat.completionresponse.system_fingerprint = fp_eeff13170aresponse.usage = CompletionUsage(completion_tokens=181, prompt_tokens=21, total_tokens=202)response.usage.completion_tokens = 181response.usage.prompt_tokens = 21response.usage.total_tokens = 202

As we can see, it returns a lot of information

For example response.choices[0].finish_reason = stop means that the model has stopped generating text because it has reached the end of the prompt. This is very useful for debugging, as the possible values are stop which means that the API returned the complete message, length which means that the model's output was incomplete because it was longer than max_tokens or the model's token limit, function_call which means that the model decided to call a function, content_filter which means that the content was omitted due to OpenAI's content limitations, and null which means that the API response was incomplete.

It also provides us with token information to keep track of the money spent.

Parameters

When requesting a response from OpenAI, we can pass it a series of parameters to get a more suitable response to what we want. Let's see which parameters we can pass it.

messages: List of messages that have been sent to the chatbotmodel: Model we want to usefrequency_penalty: Frequency penalty. The higher the value, the less likely the model is to repeat the same responsemax_tokens: Maximum number of tokens that the model can returnn: Number of responses we want the model to returnpresence_penalty: Presence penalty. The higher the value, the less likely the model is to repeat the same responseseed: Seed for text generationstop: List of tokens indicating that the model should stop generating textstream: IfTrue, the API will return a response each time the model generates a token. IfFalse, the API will return a response when the model has generated all tokens.temperature: The higher the value, the more creative the model will betop_p: The higher the value, the more creative the model will beuser: User ID of the person talking to the chatbottimeout: Maximum time we want to wait for the API to return a response

Let's see some

Messages

We can pass the API a list of messages that have been sent to the chatbot. This is useful for passing the conversation history to the chatbot, so it can generate a more appropriate response based on the conversation. And to condition the chatbot's response based on what was said previously.

Additionally, we can pass a system message to indicate how it should behave.

Conversation History

Let's see an example of the conversation history, first we ask how they are doing

promtp = "Hola, soy MaximoFN, ¿Cómo estás?"response = client.chat.completions.create(model=model,messages=[{"role": "user", "content": f"{promtp}"}],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

Hola MaximoFN, soy un modelo de inteligencia artificial diseñado para conversar y ayudar en lo que necesites. ¿En qué puedo ayudarte hoy?

It has responded that it doesn't have feelings and asked how it can help us. So if I ask it now what my name is, it won't be able to answer.

promtp = "¿Me puedes decir cómo me llamo?"response = client.chat.completions.create(model=model,messages=[{"role": "user", "content": f"{promtp}"}],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

Lo siento, no tengo esa información. Pero puedes decírmelo tú.

To solve this, we pass the conversation history

promtp = "¿Me puedes decir cómo me llamo?"response = client.chat.completions.create(model=model,messages=[{"role": "user", "content": "Hola, soy MaximoFN, ¿Cómo estás?"},{"role": "assistant", "content": "Hola MaximoFN, soy un modelo de inteligencia artificial diseñado para conversar y ayudar en lo que necesites. ¿En qué puedo ayudarte hoy?"},{"role": "user", "content": f"{promtp}"},],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

Tu nombre es MaximoFN.

Conditioning through examples

Now let's look at an example of how to condition the chatbot's response based on what it has been told previously. Now we ask it how to get the list of files in a directory in the terminal.

promtp = "¿Cómo puedo listar los archivos de un directorio en la terminal?"response = client.chat.completions.create(model=model,messages=[{"role": "user", "content": f"{promtp}"}],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

En la terminal de un sistema operativo Unix o Linux, puedes listar los archivos de un directorio utilizando el comando `ls`. Por ejemplo, si quieres listar los archivos del directorio actual, simplemente escribe `ls` y presiona Enter. Si deseas listar los archivos de un directorio específico, puedes proporcionar la ruta del directorio después del comando `ls`, por ejemplo `ls /ruta/del/directorio`. Si deseas ver más detalles sobre los archivos, puedes usar la opción `-l` para obtener una lista detallada o `-a` para mostrar también los archivos ocultos.

Let's see what it answers if we condition it now with examples of short responses.

promtp = "¿Cómo puedo listar los archivos de un directorio en la terminal?"response = client.chat.completions.create(model=model,messages=[{"role": "user", "content": "Obtener las 10 primeras líneas de un archivo"},{"role": "assistant", "content": "head -n 10"},{"role": "user", "content": "Encontrar todos los archivos con extensión .txt"},{"role": "assistant", "content": "find . -name '*.txt"},{"role": "user", "content": "Dividir un archivo en varias páginas"},{"role": "assistant", "content": "split -l 1000"},{"role": "user", "content": "Buscar la dirección IP 12.34.56.78"},{"role": "assistant", "content": "nslookup 12.34.56.78"},{"role": "user", "content": "Obtener las 5 últimas líneas de foo.txt"},{"role": "assistant", "content": "tail -n 5 foo.txt"},{"role": "user", "content": "Convertir ejemplo.png en JPEG"},{"role": "assistant", "content": "convert example.png example.jpg"},{"role": "user", "content": "Create a git branch named 'new-feature"},{"role": "assistant", "content": "git branch new-feature"},{"role": "user", "content": f"{promtp}"},],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

Puede usar el comando `ls` en la terminal para listar los archivos de un directorio. Por ejemplo:```ls```Muestra los archivos y directorios en el directorio actual.

We have managed to get a shorter response.

Conditioning with system message

We can pass a system message to indicate how it should behave.

promtp = "¿Cómo puedo listar los archivos de un directorio en la terminal?"response = client.chat.completions.create(model=model,messages=[{"role": "system", "content": "Eres un experto asistente de terminal de ubuntu que responde solo con comandos de terminal"},{"role": "user", "content": f"{promtp}"},],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

Puedes listar los archivos de un directorio en la terminal usando el comando `ls`. Por ejemplo, para listar los archivos del directorio actual, simplemente escribe `ls` y presiona Enter. Si quieres listar los archivos de un directorio específico, puedes utilizar `ls` seguido de la ruta del directorio. Por ejemplo, `ls /ruta/del/directorio`.

promtp = "¿Cómo puedo listar los archivos de un directorio en la terminal?"response = client.chat.completions.create(model=model,messages=[{"role": "system", "content": "Eres un experto asistente de terminal de ubuntu que responde solo con comandos de terminal"},{"role": "user", "content": "Obtener las 10 primeras líneas de un archivo"},{"role": "assistant", "content": "head -n 10"},{"role": "user", "content": "Encontrar todos los archivos con extensión .txt"},{"role": "assistant", "content": "find . -name '*.txt"},{"role": "user", "content": "Dividir un archivo en varias páginas"},{"role": "assistant", "content": "split -l 1000"},{"role": "user", "content": "Buscar la dirección IP 12.34.56.78"},{"role": "assistant", "content": "nslookup 12.34.56.78"},{"role": "user", "content": "Obtener las 5 últimas líneas de foo.txt"},{"role": "assistant", "content": "tail -n 5 foo.txt"},{"role": "user", "content": "Convertir ejemplo.png en JPEG"},{"role": "assistant", "content": "convert example.png example.jpg"},{"role": "user", "content": "Create a git branch named 'new-feature"},{"role": "assistant", "content": "git branch new-feature"},{"role": "user", "content": f"{promtp}"},],)content = response.choices[0].message.content.replace(' ', ' ')print(content)Copied

Puedes listar los archivos de un directorio en la terminal utilizando el comando "ls". Por ejemplo, para listar los archivos en el directorio actual, puedes ejecutar el comando "ls". Si deseas listar los archivos de otro directorio, simplemente especifica el directorio después del comando "ls", por ejemplo "ls /ruta/al/directorio".

Maximum number of response tokens

We can limit the number of tokens that the model can return. This is useful to prevent the model from exceeding the response we want.

promtp = "¿Cuál es el mejor lenguaje de programación para aprender?"response = client.chat.completions.create(model = model,messages = [{"role": "user", "content": f"{promtp}"}],max_tokens = 50,)content = response.choices[0].message.content.replace(' ', ' ')print(content)print(f" response.choices[{i}].finish_reason = {response.choices[i].finish_reason}")Copied

La respuesta a esta pregunta puede variar dependiendo de los intereses y objetivos individuales, ya que cada lenguaje de programación tiene sus propias ventajas y desventajas. Sin embargo, algunos de los lenguajes másresponse.choices[0].finish_reason = length

As we can see, the response is cut off mid-sentence because it would exceed the token limit. Additionally, the stopping reason is now length instead of stop.

Model Creativity through Temperature

We can make the model more creative through temperature. The higher the value, the more creative the model will be.

promtp = "¿Cuál es el mejor lenguaje de programación para aprender?"temperature = 0response = client.chat.completions.create(model = model,messages = [{"role": "user", "content": f"{promtp}"}],temperature = temperature,)content_0 = response.choices[0].message.content.replace(' ', ' ')print(content_0)Copied

No hay un "mejor" lenguaje de programación para aprender, ya que la elección depende de los intereses y objetivos individuales. Algunos lenguajes populares para principiantes incluyen Python, JavaScript, Java y C#. Cada uno tiene sus propias ventajas y desventajas, por lo que es importante investigar y considerar qué tipo de desarrollo de software te interesa antes de elegir un lenguaje para aprender.

promtp = "¿Cuál es el mejor lenguaje de programación para aprender?"temperature = 1response = client.chat.completions.create(model = model,messages = [{"role": "user", "content": f"{promtp}"}],temperature = temperature,)content_1 = response.choices[0].message.content.replace(' ', ' ')print(content_1)Copied

No hay un "mejor" lenguaje de programación para aprender, ya que la elección depende de los objetivos y preferencias individuales del programador. Sin embargo, algunos lenguajes populares para principiantes incluyen Python, JavaScript, Java y C++. Estos lenguajes son relativamente fáciles de aprender y tienen una amplia gama de aplicaciones en la industria de la tecnología. Es importante considerar qué tipo de proyectos o campos de interés te gustaría explorar al momento de elegir un lenguaje de programación para aprender.

print(content_0)print(content_1)Copied

No hay un "mejor" lenguaje de programación para aprender, ya que la elección depende de los intereses y objetivos individuales. Algunos lenguajes populares para principiantes incluyen Python, JavaScript, Java y C#. Cada uno tiene sus propias ventajas y desventajas, por lo que es importante investigar y considerar qué tipo de desarrollo de software te interesa antes de elegir un lenguaje para aprender.No hay un "mejor" lenguaje de programación para aprender, ya que la elección depende de los objetivos y preferencias individuales del programador. Sin embargo, algunos lenguajes populares para principiantes incluyen Python, JavaScript, Java y C++. Estos lenguajes son relativamente fáciles de aprender y tienen una amplia gama de aplicaciones en la industria de la tecnología. Es importante considerar qué tipo de proyectos o campos de interés te gustaría explorar al momento de elegir un lenguaje de programación para aprender.

Model Creativity through top_p

We can make the model more creative using the top_p parameter. The higher the value, the more creative the model will be.

promtp = "¿Cuál es el mejor lenguaje de programación para aprender?"top_p = 0response = client.chat.completions.create(model = model,messages = [{"role": "user", "content": f"{promtp}"}],top_p = top_p,)content_0 = response.choices[0].message.content.replace(' ', ' ')print(content_0)Copied

No hay un "mejor" lenguaje de programación para aprender, ya que la elección depende de los intereses y objetivos individuales. Algunos lenguajes populares para principiantes incluyen Python, JavaScript, Java y C#. Cada uno tiene sus propias ventajas y desventajas, por lo que es importante investigar y considerar qué tipo de desarrollo de software te interesa antes de elegir un lenguaje para aprender.

promtp = "¿Cuál es el mejor lenguaje de programación para aprender?"top_p = 1response = client.chat.completions.create(model = model,messages = [{"role": "user", "content": f"{promtp}"}],top_p = top_p,)content_1 = response.choices[0].message.content.replace(' ', ' ')print(content_1)Copied

El mejor lenguaje de programación para aprender depende de los objetivos del aprendizaje y del tipo de programación que se quiera realizar. Algunos lenguajes de programación populares para principiantes incluyen Python, Java, JavaScript y Ruby. Sin embargo, cada lenguaje tiene sus propias ventajas y desventajas, por lo que es importante considerar qué tipo de proyectos o aplicaciones se quieren desarrollar antes de elegir un lenguaje de programación para aprender. Python es a menudo recomendado por su facilidad de uso y versatilidad, mientras que JavaScript es ideal para la programación web.

print(content_0)print(content_1)Copied

No hay un "mejor" lenguaje de programación para aprender, ya que la elección depende de los intereses y objetivos individuales. Algunos lenguajes populares para principiantes incluyen Python, JavaScript, Java y C#. Cada uno tiene sus propias ventajas y desventajas, por lo que es importante investigar y considerar qué tipo de desarrollo de software te interesa antes de elegir un lenguaje para aprender.El mejor lenguaje de programación para aprender depende de los objetivos del aprendizaje y del tipo de programación que se quiera realizar. Algunos lenguajes de programación populares para principiantes incluyen Python, Java, JavaScript y Ruby. Sin embargo, cada lenguaje tiene sus propias ventajas y desventajas, por lo que es importante considerar qué tipo de proyectos o aplicaciones se quieren desarrollar antes de elegir un lenguaje de programación para aprender. Python es a menudo recomendado por su facilidad de uso y versatilidad, mientras que JavaScript es ideal para la programación web.

Number of responses

We can ask the API to return more than one response. This is useful for getting multiple responses from the model so we can choose the one we like best. For this, we will set the temperature and top_p parameters to 1 to make the model more creative.

promtp = "¿Cuál es el mejor lenguaje de programación para aprender?"temperature = 1top_p = 1response = client.chat.completions.create(model = model,messages = [{"role": "user", "content": f"{promtp}"}],temperature = temperature,top_p = top_p,n = 4)content_0 = response.choices[0].message.content.replace(' ', ' ')content_1 = response.choices[1].message.content.replace(' ', ' ')content_2 = response.choices[2].message.content.replace(' ', ' ')content_3 = response.choices[3].message.content.replace(' ', ' ')print(content_0)print(content_1)print(content_2)print(content_3)Copied

El mejor lenguaje de programación para aprender depende de tus objetivos y del tipo de aplicaciones que te interese desarrollar. Algunos de los lenguajes más populares para aprender son:1. Python: Es un lenguaje de programación versátil, fácil de aprender y con una amplia comunidad de desarrolladores. Es ideal para principiantes y se utiliza en una gran variedad de aplicaciones, desde desarrollo web hasta inteligencia artificial.2. JavaScript: Es el lenguaje de programación más utilizado en el desarrollo web. Es imprescindible para aquellos que quieren trabajar en el ámbito del desarrollo frontend y backend.3. Java: Es un lenguaje de programación muy popular en el ámbito empresarial, por lo que aprender Java puede abrirte muchas puertas laborales. Además, es un lenguaje estructurado que te enseñará conceptos importantes de la programación orientada a objetos.4. C#: Es un lenguaje de programación desarrollado por Microsoft que se utiliza especialmente en el desarrollo de aplicaciones para Windows. Es ideal para aquellos que quieran enfocarse en el desarrollo de aplicaciones de escritorio.En resumen, el mejor lenguaje de programación para aprender depende de tus intereses y objetivos personales. Es importante investigar y considerar qué tipos de aplicaciones te gustaría desarrollar para elegir el lenguaje que más se adapte a tus necesidades.El mejor lenguaje de programación para aprender depende de los objetivos y necesidades individuales. Algunos de los lenguajes de programación más populares y ampliamente utilizados incluyen Python, JavaScript, Java, C++, Ruby y muchos otros. Python es a menudo recomendado para principiantes debido a su sintaxis simple y legible, mientras que JavaScript es esencial para el desarrollo web. Java es ampliamente utilizado en el desarrollo de aplicaciones empresariales y Android, y C++ es comúnmente utilizado en sistemas embebidos y juegos. En última instancia, el mejor lenguaje de programación para aprender dependerá de lo que quiera lograr con su habilidades de programación.El mejor lenguaje de programación para aprender depende de los intereses y objetivos individuales de cada persona. Algunos de los lenguajes más populares y bien documentados para principiantes incluyen Python, JavaScript, Java y C#. Python es conocido por su simplicidad y versatilidad, mientras que JavaScript es esencial para el desarrollo web. Java y C# son lenguajes ampliamente utilizados en la industria y proporcionan una base sólida para aprender otros lenguajes. En última instancia, la elección del lenguaje dependerá de las metas personales y la aplicación deseada.El mejor lenguaje de programación para aprender depende de los intereses y objetivos de cada persona. Algunos lenguajes populares para principiantes incluyen Python, Java, JavaScript, C++ y Ruby. Python es frecuentemente recomendado para aprender a programar debido a su sintaxis sencilla y legible, mientras que Java es utilizado en aplicaciones empresariales y Android. JavaScript es fundamental para el desarrollo web, y C++ es comúnmente utilizado en aplicaciones de alto rendimiento. Ruby es conocido por su facilidad de uso y flexibilidad. En última instancia, la elección del lenguaje dependerá de qué tipo de desarrollo te interesa y qué tipo de proyectos deseas realizar.

Retrain OpenAI Model

OpenAI offers the possibility of retraining its API models to get better results with our own data. This has the following advantages

- Higher quality results are obtained for our data

- In a prompt, we can give it examples to make it behave as we want, but only a few. This way, by retraining it, we can provide many more.+ Token savings due to shorter instructions. Since we have already trained it for our use case, we can give it fewer instructions to solve our tasks.

- Lower latency requests. When calling our own models, we will have less latency.

Data Preparation

The OpenAI API asks us to provide the data in a jsonl file in the following format

{

"messages":

[

{

"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."

},

{

¿Cuál es la capital de Francia?

},

{

"Paris, as if everyone doesn't know that already."

It seems like you might have accidentally sent an incomplete or incorrect Markdown text. Could you please provide the actual Markdown content you want to translate? I'm ready to help with the translation once you share it!

]

}

{

"messages":

[

{

"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."

},

{

"Who wrote 'Romeo and Juliet'?"

},

{

"Oh, just some guy named William Shakespeare. Ever heard of him?"

}

]

}

{

"messages":

[

{

"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."

},

{

¿A qué distancia se encuentra la Luna de la Tierra?

},

{

"Around 238,900 miles. Give or take a few, like that really matters."

}

]

It seems like you have provided an incomplete or incorrect Markdown text to translate. Please provide the full Markdown content so I can assist you with the translation.With a maximum of 4096 tokens

Data Validation

To save myself some work, I've been passing all my posts to chatgpt one by one and asking it to generate 10 FAQs for each in CSV format, because I was unsure if it would be able to generate a format like the one requested in jsonl. And it has generated a CSV with the following format for each post

prompt,completion

What does the Introduction to Python cover in the provided material? "The Introduction to Python covers topics such as data types, operators, use of functions and classes, handling iterable objects, and using modules. [More information](https://www.maximofn.com/python/)"

What are the basic data types in Python? Python has 7 basic data types: text (`str`), numeric (`int`, `float`, `complex`), sequences (`list`, `tuple`, `range`), mapping (`dict`), sets (`set`, `frozenset`), booleans (`bool`), and binary (`bytes`, `bytearray`, `memoryview`). [More information](https://www.maximofn.com/python/)

What are and how are operators used in Python? "Operators in Python are special symbols used to perform operations such as addition, subtraction, multiplication, and division between variables and values. They also include logical operators for comparisons. [More information](https://www.maximofn.com/python/)"

How is a function defined and used in Python? In Python, a function is defined using the `def` keyword, followed by the function name and parentheses. Functions can have parameters and return values. They are used to encapsulate logic that can be reused throughout the code. [More information](https://www.maximofn.com/python/)What are classes in Python and how are they used? "Classes in Python are the foundation of object-oriented programming. They allow you to create objects that encapsulate data and functionalities. Classes are defined using the keyword `class`, followed by the class name. [More information](https://www.maximofn.com/python/)"

...Each CSV has 10 FAQs

I'm going to write a code that takes each CSV and generates two new jsonls, one for training and another for validation.

import osCSVs_path = "openai/faqs_posts"percetn_train = 0.8percetn_validation = 0.2jsonl_train = os.path.join(CSVs_path, "train.jsonl")jsonl_validation = os.path.join(CSVs_path, "validation.jsonl")# Create the train.jsonl and validation.jsonl fileswith open(jsonl_train, 'w') as f:f.write('')with open(jsonl_validation, 'w') as f:f.write('')for file in os.listdir(CSVs_path): # Get all files in the directoryif file.endswith(".csv"): # Check if file is a csvcsv = os.path.join(CSVs_path, file) # Get the path to the csv filenumber_of_lines = 0csv_content = []for line in open(csv, 'r'): # Read all lines in the csv fileif line.startswith('prompt'): # Skip the first linecontinuenumber_of_lines += 1 # Count the number of linescsv_content.append(line) # Add the line to the csv_content listnumber_of_train = int(number_of_lines * percetn_train) # Calculate the number of lines for the train.jsonl filenumber_of_validation = int(number_of_lines * percetn_validation) # Calculate the number of lines for the validation.sjonl filefor i in range(number_of_lines):prompt = csv_content[i].split(',')[0]response = ','.join(csv_content[i].split(',')[1:]).replace(' ', '').replace('"', '')if i > 0 and i <= number_of_train:# add line to train.jsonlwith open(jsonl_train, 'a') as f:f.write(f'{"{"}"messages": [{"{"}"role": "system", "content": "Eres un amable asistente dispuesto a responder."{"}"}, {"{"}"role": "user", "content": "{prompt}"{"}"}, {"{"}"role": "assistant", "content": "{response}"{"}"}]{"}"} ')elif i > number_of_train and i <= number_of_train + number_of_validation:# add line to validation.csvwith open(jsonl_validation, 'a') as f:f.write(f'{"{"}"messages": [{"{"}"role": "system", "content": "Eres un amable asistente dispuesto a responder."{"}"}, {"{"}"role": "user", "content": "{prompt}"{"}"}, {"{"}"role": "assistant", "content": "{response}"{"}"}]{"}"} ')Copied

Once I have the two jsonls, I run a code provided by OpenAI to check the jsonls

First we validate the training ones

from collections import defaultdictimport json# Format error checksformat_errors = defaultdict(int)with open(jsonl_train, 'r', encoding='utf-8') as f:dataset = [json.loads(line) for line in f]for ex in dataset:if not isinstance(ex, dict):format_errors["data_type"] += 1continuemessages = ex.get("messages", None)if not messages:format_errors["missing_messages_list"] += 1continuefor message in messages:if "role" not in message or "content" not in message:format_errors["message_missing_key"] += 1if any(k not in ("role", "content", "name", "function_call") for k in message):format_errors["message_unrecognized_key"] += 1if message.get("role", None) not in ("system", "user", "assistant", "function"):format_errors["unrecognized_role"] += 1content = message.get("content", None)function_call = message.get("function_call", None)if (not content and not function_call) or not isinstance(content, str):format_errors["missing_content"] += 1if not any(message.get("role", None) == "assistant" for message in messages):format_errors["example_missing_assistant_message"] += 1if format_errors:print("Found errors:")for k, v in format_errors.items():print(f"{k}: {v}")else:print("No errors found")Copied

No errors found

And now the validation ones

# Format error checksformat_errors = defaultdict(int)with open(jsonl_validation, 'r', encoding='utf-8') as f:dataset = [json.loads(line) for line in f]for ex in dataset:if not isinstance(ex, dict):format_errors["data_type"] += 1continuemessages = ex.get("messages", None)if not messages:format_errors["missing_messages_list"] += 1continuefor message in messages:if "role" not in message or "content" not in message:format_errors["message_missing_key"] += 1if any(k not in ("role", "content", "name", "function_call") for k in message):format_errors["message_unrecognized_key"] += 1if message.get("role", None) not in ("system", "user", "assistant", "function"):format_errors["unrecognized_role"] += 1content = message.get("content", None)function_call = message.get("function_call", None)if (not content and not function_call) or not isinstance(content, str):format_errors["missing_content"] += 1if not any(message.get("role", None) == "assistant" for message in messages):format_errors["example_missing_assistant_message"] += 1if format_errors:print("Found errors:")for k, v in format_errors.items():print(f"{k}: {v}")else:print("No errors found")Copied

No errors found

Token Calculation

The maximum number of tokens for each example must be 4096, so if we have longer examples only the first 4096 tokens will be used. Therefore, we are going to count the number of tokens in each jsonl to know how much it will cost to retrain the model.

But first, we need to install the tiktoken library, which is the tokenizer used by OpenAI and will also help us determine how many tokens each CSV contains, and therefore, how much it will cost to retrain the model.

To install it, run the following command

pip install tiktokenWe create a few necessary functions

import tiktokenimport numpy as npencoding = tiktoken.get_encoding("cl100k_base")def num_tokens_from_messages(messages, tokens_per_message=3, tokens_per_name=1):num_tokens = 0for message in messages:num_tokens += tokens_per_messagefor key, value in message.items():num_tokens += len(encoding.encode(value))if key == "name":num_tokens += tokens_per_namenum_tokens += 3return num_tokensdef num_assistant_tokens_from_messages(messages):num_tokens = 0for message in messages:if message["role"] == "assistant":num_tokens += len(encoding.encode(message["content"]))return num_tokensdef print_distribution(values, name):print(f" #### Distribution of {name}:")print(f"min:{min(values)}, max: {max(values)}")print(f"mean: {np.mean(values)}, median: {np.median(values)}")print(f"p5: {np.quantile(values, 0.1)}, p95: {np.quantile(values, 0.9)}")Copied

# Warnings and tokens countsn_missing_system = 0n_missing_user = 0n_messages = []convo_lens = []assistant_message_lens = []with open(jsonl_train, 'r', encoding='utf-8') as f:dataset = [json.loads(line) for line in f]for ex in dataset:messages = ex["messages"]if not any(message["role"] == "system" for message in messages):n_missing_system += 1if not any(message["role"] == "user" for message in messages):n_missing_user += 1n_messages.append(len(messages))convo_lens.append(num_tokens_from_messages(messages))assistant_message_lens.append(num_assistant_tokens_from_messages(messages))print("Num examples missing system message:", n_missing_system)print("Num examples missing user message:", n_missing_user)print_distribution(n_messages, "num_messages_per_example")print_distribution(convo_lens, "num_total_tokens_per_example")print_distribution(assistant_message_lens, "num_assistant_tokens_per_example")n_too_long = sum(l > 4096 for l in convo_lens)print(f" {n_too_long} examples may be over the 4096 token limit, they will be truncated during fine-tuning")Copied

Num examples missing system message: 0Num examples missing user message: 0#### Distribution of num_messages_per_example:min:3, max: 3mean: 3.0, median: 3.0p5: 3.0, p95: 3.0#### Distribution of num_total_tokens_per_example:min:67, max: 132mean: 90.13793103448276, median: 90.0p5: 81.5, p95: 99.5#### Distribution of num_assistant_tokens_per_example:min:33, max: 90mean: 48.66379310344828, median: 48.5p5: 41.0, p95: 55.50 examples may be over the 4096 token limit, they will be truncated during fine-tuning

As we can see in the training set, no message exceeds 4096 tokens

# Warnings and tokens countsn_missing_system = 0n_missing_user = 0n_messages = []convo_lens = []assistant_message_lens = []with open(jsonl_validation, 'r', encoding='utf-8') as f:dataset = [json.loads(line) for line in f]for ex in dataset:messages = ex["messages"]if not any(message["role"] == "system" for message in messages):n_missing_system += 1if not any(message["role"] == "user" for message in messages):n_missing_user += 1n_messages.append(len(messages))convo_lens.append(num_tokens_from_messages(messages))assistant_message_lens.append(num_assistant_tokens_from_messages(messages))print("Num examples missing system message:", n_missing_system)print("Num examples missing user message:", n_missing_user)print_distribution(n_messages, "num_messages_per_example")print_distribution(convo_lens, "num_total_tokens_per_example")print_distribution(assistant_message_lens, "num_assistant_tokens_per_example")n_too_long = sum(l > 4096 for l in convo_lens)print(f" {n_too_long} examples may be over the 4096 token limit, they will be truncated during fine-tuning")Copied

Num examples missing system message: 0Num examples missing user message: 0#### Distribution of num_messages_per_example:min:3, max: 3mean: 3.0, median: 3.0p5: 3.0, p95: 3.0#### Distribution of num_total_tokens_per_example:min:80, max: 102mean: 89.93333333333334, median: 91.0p5: 82.2, p95: 96.8#### Distribution of num_assistant_tokens_per_example:min:41, max: 57mean: 48.2, median: 49.0p5: 42.8, p95: 51.60 examples may be over the 4096 token limit, they will be truncated during fine-tuning

No message in the validation set exceeds 4096 tokens either.

Calculation of the Cost

Another very important thing is to know how much it will cost us to do this fine-tuning.

# Pricing and default n_epochs estimateMAX_TOKENS_PER_EXAMPLE = 4096TARGET_EPOCHS = 3MIN_TARGET_EXAMPLES = 100MAX_TARGET_EXAMPLES = 25000MIN_DEFAULT_EPOCHS = 1MAX_DEFAULT_EPOCHS = 25with open(jsonl_train, 'r', encoding='utf-8') as f:dataset = [json.loads(line) for line in f]convo_lens = []for ex in dataset:messages = ex["messages"]convo_lens.append(num_tokens_from_messages(messages))n_epochs = TARGET_EPOCHSn_train_examples = len(dataset)if n_train_examples * TARGET_EPOCHS < MIN_TARGET_EXAMPLES:n_epochs = min(MAX_DEFAULT_EPOCHS, MIN_TARGET_EXAMPLES // n_train_examples)elif n_train_examples * TARGET_EPOCHS > MAX_TARGET_EXAMPLES:n_epochs = max(MIN_DEFAULT_EPOCHS, MAX_TARGET_EXAMPLES // n_train_examples)n_billing_tokens_in_dataset = sum(min(MAX_TOKENS_PER_EXAMPLE, length) for length in convo_lens)print(f"Dataset has ~{n_billing_tokens_in_dataset} tokens that will be charged for during training")print(f"By default, you'll train for {n_epochs} epochs on this dataset")print(f"By default, you'll be charged for ~{n_epochs * n_billing_tokens_in_dataset} tokens")tokens_for_train = n_epochs * n_billing_tokens_in_datasetCopied

Dataset has ~10456 tokens that will be charged for during trainingBy default, you'll train for 3 epochs on this datasetBy default, you'll be charged for ~31368 tokens

As of the time of writing this post, the cost to train gpt-3.5-turbo is $0.0080 per 1000 tokens, so we can determine how much the training will cost.

pricing = 0.0080num_tokens_pricing = 1000training_price = pricing * (tokens_for_train // num_tokens_pricing)print(f"Training price: ${training_price}")Copied

Training price: $0.248

# Pricing and default n_epochs estimateMAX_TOKENS_PER_EXAMPLE = 4096TARGET_EPOCHS = 3MIN_TARGET_EXAMPLES = 100MAX_TARGET_EXAMPLES = 25000MIN_DEFAULT_EPOCHS = 1MAX_DEFAULT_EPOCHS = 25with open(jsonl_validation, 'r', encoding='utf-8') as f:dataset = [json.loads(line) for line in f]convo_lens = []for ex in dataset:messages = ex["messages"]convo_lens.append(num_tokens_from_messages(messages))n_epochs = TARGET_EPOCHSn_train_examples = len(dataset)if n_train_examples * TARGET_EPOCHS < MIN_TARGET_EXAMPLES:n_epochs = min(MAX_DEFAULT_EPOCHS, MIN_TARGET_EXAMPLES // n_train_examples)elif n_train_examples * TARGET_EPOCHS > MAX_TARGET_EXAMPLES:n_epochs = max(MIN_DEFAULT_EPOCHS, MAX_TARGET_EXAMPLES // n_train_examples)n_billing_tokens_in_dataset = sum(min(MAX_TOKENS_PER_EXAMPLE, length) for length in convo_lens)print(f"Dataset has ~{n_billing_tokens_in_dataset} tokens that will be charged for during training")print(f"By default, you'll train for {n_epochs} epochs on this dataset")print(f"By default, you'll be charged for ~{n_epochs * n_billing_tokens_in_dataset} tokens")tokens_for_validation = n_epochs * n_billing_tokens_in_datasetCopied

Dataset has ~1349 tokens that will be charged for during trainingBy default, you'll train for 6 epochs on this datasetBy default, you'll be charged for ~8094 tokens

validation_price = pricing * (tokens_for_validation // num_tokens_pricing)print(f"Validation price: ${validation_price}")Copied

Validation price: $0.064

total_price = training_price + validation_priceprint(f"Total price: ${total_price}")Copied

Total price: $0.312

If our calculations are correct, we see that the retraining of gpt-3.5-turbo will cost us $0.312

Training

Once we have everything ready, we need to upload the jsonls to the OpenAI API to retrain the model. To do this, we run the following code

result = client.files.create(file=open(jsonl_train, "rb"), purpose="fine-tune")Copied

type(result), resultCopied

(openai.types.file_object.FileObject,FileObject(id='file-LWztOVasq4E0U67wRe8ShjLZ', bytes=47947, created_at=1701585709, filename='train.jsonl', object='file', purpose='fine-tune', status='processed', status_details=None))

print(f"result.id = {result.id}")print(f"result.bytes = {result.bytes}")print(f"result.created_at = {result.created_at}")print(f"result.filename = {result.filename}")print(f"result.object = {result.object}")print(f"result.purpose = {result.purpose}")print(f"result.status = {result.status}")print(f"result.status_details = {result.status_details}")Copied

result.id = file-LWztOVasq4E0U67wRe8ShjLZresult.bytes = 47947result.created_at = 1701585709result.filename = train.jsonlresult.object = fileresult.purpose = fine-tuneresult.status = processedresult.status_details = None

jsonl_train_id = result.idprint(f"jsonl_train_id = {jsonl_train_id}")Copied

jsonl_train_id = file-LWztOVasq4E0U67wRe8ShjLZ

We do the same with the validation set

result = client.files.create(file=open(jsonl_validation, "rb"), purpose="fine-tune")Copied

print(f"result.id = {result.id}")print(f"result.bytes = {result.bytes}")print(f"result.created_at = {result.created_at}")print(f"result.filename = {result.filename}")print(f"result.object = {result.object}")print(f"result.purpose = {result.purpose}")print(f"result.status = {result.status}")print(f"result.status_details = {result.status_details}")Copied

result.id = file-E0YOgIIe9mwxmFcza5bFyVKWresult.bytes = 6369result.created_at = 1701585730result.filename = validation.jsonlresult.object = fileresult.purpose = fine-tuneresult.status = processedresult.status_details = None

jsonl_validation_id = result.idprint(f"jsonl_train_id = {jsonl_validation_id}")Copied

jsonl_train_id = file-E0YOgIIe9mwxmFcza5bFyVKW

Once they are uploaded, we proceed to train our own OpenAI model. For this, we use the following code

result = client.fine_tuning.jobs.create(model = "gpt-3.5-turbo", training_file = jsonl_train_id, validation_file = jsonl_validation_id)Copied

type(result), resultCopied

(openai.types.fine_tuning.fine_tuning_job.FineTuningJob,FineTuningJob(id='ftjob-aBndcorOfQLP0UijlY0R4pTB', created_at=1701585758, error=None, fine_tuned_model=None, finished_at=None, hyperparameters=Hyperparameters(n_epochs='auto', batch_size='auto', learning_rate_multiplier='auto'), model='gpt-3.5-turbo-0613', object='fine_tuning.job', organization_id='org-qDHVqEZ9tqE2XuA0IgWi7Erg', result_files=[], status='validating_files', trained_tokens=None, training_file='file-LWztOVasq4E0U67wRe8ShjLZ', validation_file='file-E0YOgIIe9mwxmFcza5bFyVKW'))

print(f"result.id = {result.id}")print(f"result.created_at = {result.created_at}")print(f"result.error = {result.error}")print(f"result.fine_tuned_model = {result.fine_tuned_model}")print(f"result.finished_at = {result.finished_at}")print(f"result.hyperparameters = {result.hyperparameters}")print(f" n_epochs = {result.hyperparameters.n_epochs}")print(f" batch_size = {result.hyperparameters.batch_size}")print(f" learning_rate_multiplier = {result.hyperparameters.learning_rate_multiplier}")print(f"result.model = {result.model}")print(f"result.object = {result.object}")print(f"result.organization_id = {result.organization_id}")print(f"result.result_files = {result.result_files}")print(f"result.status = {result.status}")print(f"result.trained_tokens = {result.trained_tokens}")print(f"result.training_file = {result.training_file}")print(f"result.validation_file = {result.validation_file}")Copied

result.id = ftjob-aBndcorOfQLP0UijlY0R4pTBresult.created_at = 1701585758result.error = Noneresult.fine_tuned_model = Noneresult.finished_at = Noneresult.hyperparameters = Hyperparameters(n_epochs='auto', batch_size='auto', learning_rate_multiplier='auto')n_epochs = autobatch_size = autolearning_rate_multiplier = autoresult.model = gpt-3.5-turbo-0613result.object = fine_tuning.jobresult.organization_id = org-qDHVqEZ9tqE2XuA0IgWi7Ergresult.result_files = []result.status = validating_filesresult.trained_tokens = Noneresult.training_file = file-LWztOVasq4E0U67wRe8ShjLZresult.validation_file = file-E0YOgIIe9mwxmFcza5bFyVKW

fine_tune_id = result.idprint(f"fine_tune_id = {fine_tune_id}")Copied

fine_tune_id = ftjob-aBndcorOfQLP0UijlY0R4pTB

We can see that in status it showed validating_files. Since the fine-tuning takes a while, we can keep checking on the process using the following code

result = client.fine_tuning.jobs.retrieve(fine_tuning_job_id = fine_tune_id)Copied

type(result), resultCopied

(openai.types.fine_tuning.fine_tuning_job.FineTuningJob,FineTuningJob(id='ftjob-aBndcorOfQLP0UijlY0R4pTB', created_at=1701585758, error=None, fine_tuned_model=None, finished_at=None, hyperparameters=Hyperparameters(n_epochs=3, batch_size=1, learning_rate_multiplier=2), model='gpt-3.5-turbo-0613', object='fine_tuning.job', organization_id='org-qDHVqEZ9tqE2XuA0IgWi7Erg', result_files=[], status='running', trained_tokens=None, training_file='file-LWztOVasq4E0U67wRe8ShjLZ', validation_file='file-E0YOgIIe9mwxmFcza5bFyVKW'))

print(f"result.id = {result.id}")print(f"result.created_at = {result.created_at}")print(f"result.error = {result.error}")print(f"result.fine_tuned_model = {result.fine_tuned_model}")print(f"result.finished_at = {result.finished_at}")print(f"result.hyperparameters = {result.hyperparameters}")print(f" n_epochs = {result.hyperparameters.n_epochs}")print(f" batch_size = {result.hyperparameters.batch_size}")print(f" learning_rate_multiplier = {result.hyperparameters.learning_rate_multiplier}")print(f"result.model = {result.model}")print(f"result.object = {result.object}")print(f"result.organization_id = {result.organization_id}")print(f"result.result_files = {result.result_files}")print(f"result.status = {result.status}")print(f"result.trained_tokens = {result.trained_tokens}")print(f"result.training_file = {result.training_file}")print(f"result.validation_file = {result.validation_file}")Copied

result.id = ftjob-aBndcorOfQLP0UijlY0R4pTBresult.created_at = 1701585758result.error = Noneresult.fine_tuned_model = Noneresult.finished_at = Noneresult.hyperparameters = Hyperparameters(n_epochs=3, batch_size=1, learning_rate_multiplier=2)n_epochs = 3batch_size = 1learning_rate_multiplier = 2result.model = gpt-3.5-turbo-0613result.object = fine_tuning.jobresult.organization_id = org-qDHVqEZ9tqE2XuA0IgWi7Ergresult.result_files = []result.status = runningresult.trained_tokens = Noneresult.training_file = file-LWztOVasq4E0U67wRe8ShjLZresult.validation_file = file-E0YOgIIe9mwxmFcza5bFyVKW

We create a loop that waits for the training to finish

import timeresult = client.fine_tuning.jobs.retrieve(fine_tuning_job_id = fine_tune_id)status = result.statuswhile status != "succeeded":time.sleep(10)result = client.fine_tuning.jobs.retrieve(fine_tuning_job_id = fine_tune_id)status = result.statusprint("Job succeeded!")Copied

Job succeeded

As the training has finished, we request the information about the process again.

result = client.fine_tuning.jobs.retrieve(fine_tuning_job_id = fine_tune_id)Copied

print(f"result.id = {result.id}")print(f"result.created_at = {result.created_at}")print(f"result.error = {result.error}")print(f"result.fine_tuned_model = {result.fine_tuned_model}")print(f"result.finished_at = {result.finished_at}")print(f"result.hyperparameters = {result.hyperparameters}")print(f" n_epochs = {result.hyperparameters.n_epochs}")print(f" batch_size = {result.hyperparameters.batch_size}")print(f" learning_rate_multiplier = {result.hyperparameters.learning_rate_multiplier}")print(f"result.model = {result.model}")print(f"result.object = {result.object}")print(f"result.organization_id = {result.organization_id}")print(f"result.result_files = {result.result_files}")print(f"result.status = {result.status}")print(f"result.trained_tokens = {result.trained_tokens}")print(f"result.training_file = {result.training_file}")print(f"result.validation_file = {result.validation_file}")Copied

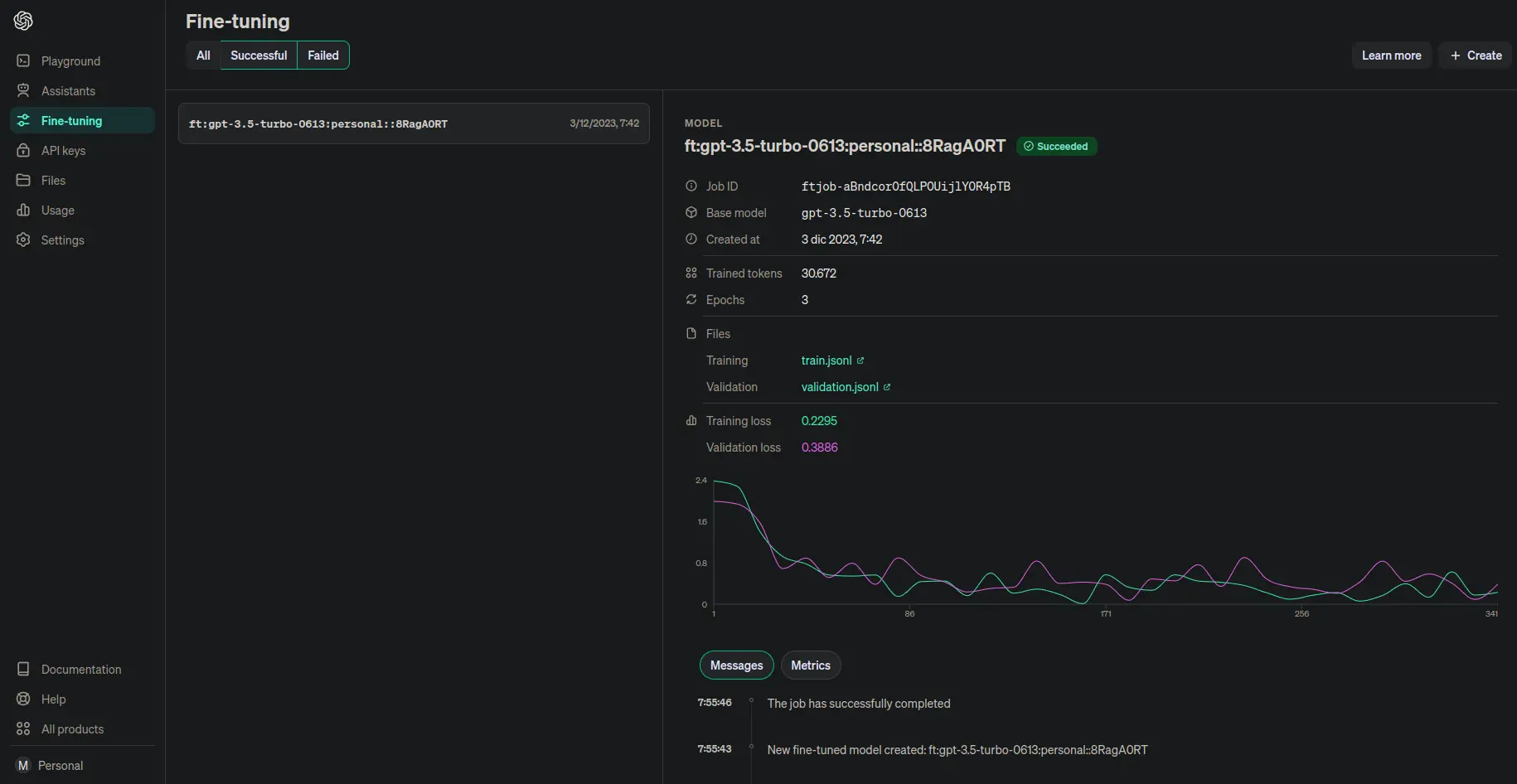

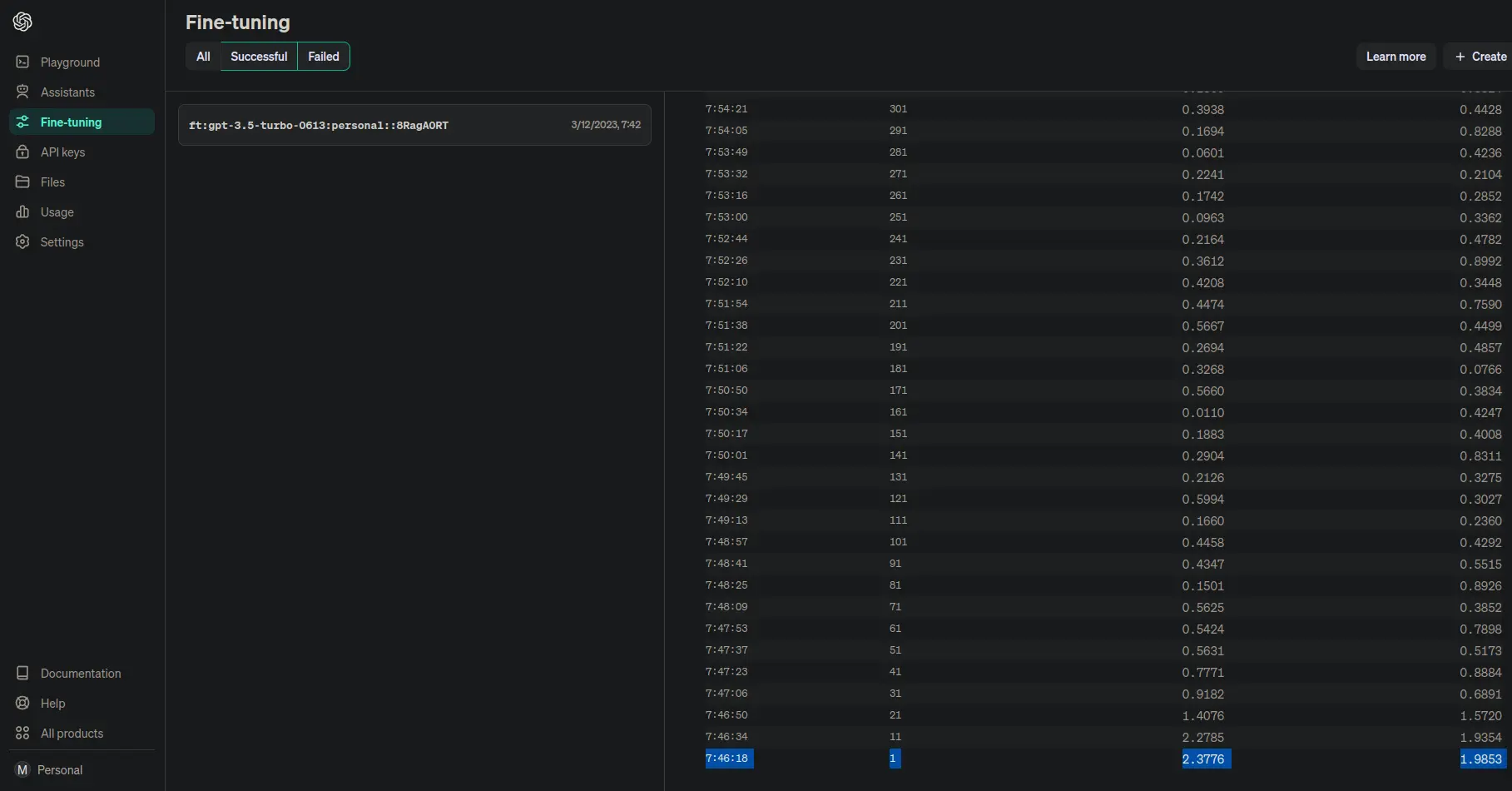

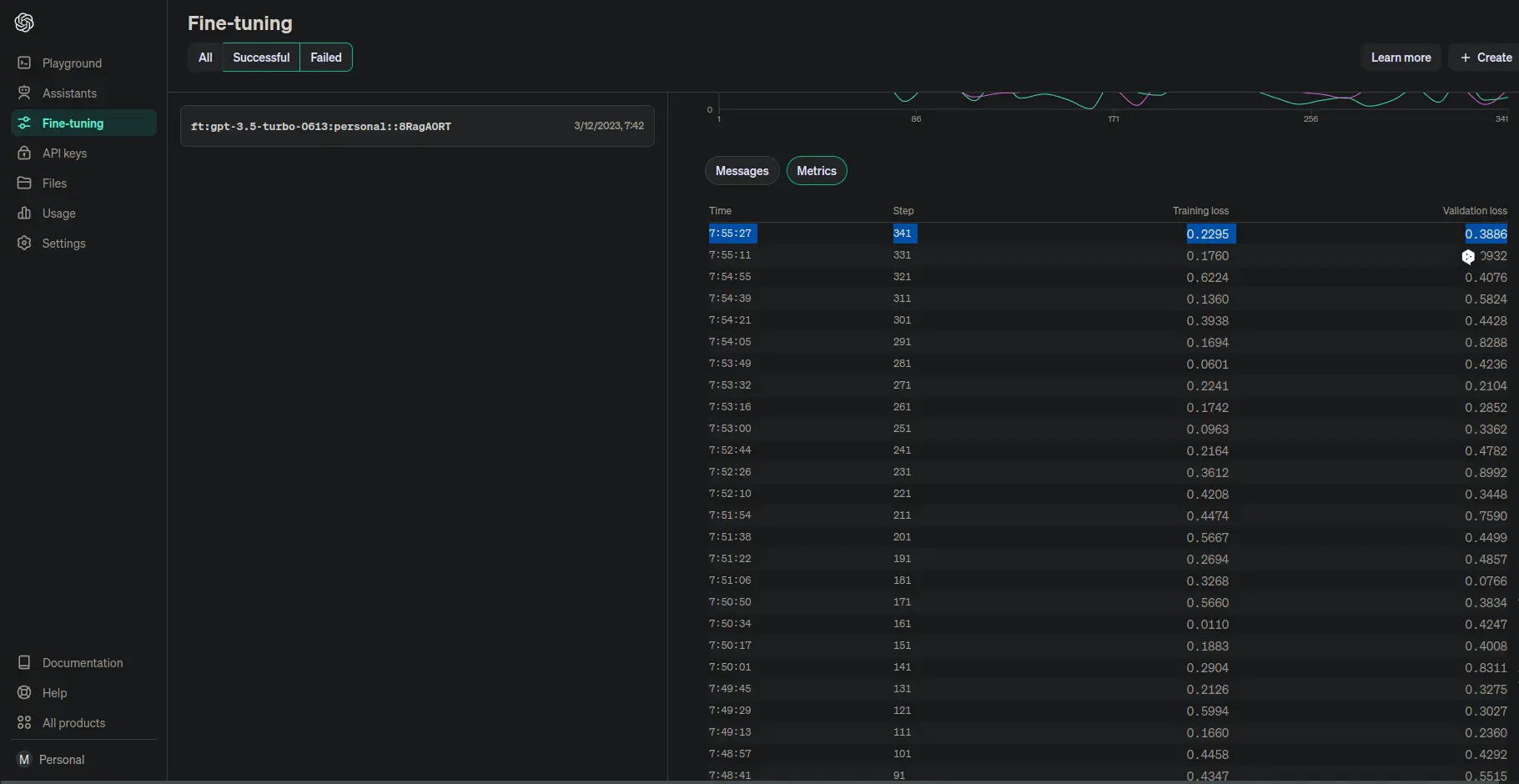

result.id = ftjob-aBndcorOfQLP0UijlY0R4pTBresult.created_at = 1701585758result.error = Noneresult.fine_tuned_model = ft:gpt-3.5-turbo-0613:personal::8RagA0RTresult.finished_at = 1701586541result.hyperparameters = Hyperparameters(n_epochs=3, batch_size=1, learning_rate_multiplier=2)n_epochs = 3batch_size = 1learning_rate_multiplier = 2result.model = gpt-3.5-turbo-0613result.object = fine_tuning.jobresult.organization_id = org-qDHVqEZ9tqE2XuA0IgWi7Ergresult.result_files = ['file-dNeo5ojOSuin7JIkNkQouHLB']result.status = succeededresult.trained_tokens = 30672result.training_file = file-LWztOVasq4E0U67wRe8ShjLZresult.validation_file = file-E0YOgIIe9mwxmFcza5bFyVKW

Let's look at some interesting data

fine_tuned_model = result.fine_tuned_modelfinished_at = result.finished_atresult_files = result.result_filesstatus = result.statustrained_tokens = result.trained_tokensprint(f"fine_tuned_model = {fine_tuned_model}")print(f"finished_at = {finished_at}")print(f"result_files = {result_files}")print(f"status = {status}")print(f"trained_tokens = {trained_tokens}")Copied

fine_tuned_model = ft:gpt-3.5-turbo-0613:personal::8RagA0RTfinished_at = 1701586541result_files = ['file-dNeo5ojOSuin7JIkNkQouHLB']status = succeededtrained_tokens = 30672

We can see that they have given the name ft:gpt-3.5-turbo-0613:personal::8RagA0RT to our model, its status is now succeeded, and it has used 30672 tokens, while we had predicted

tokens_for_train, tokens_for_validation, tokens_for_train + tokens_for_validationCopied

(31368, 8094, 39462)

That is, it has used fewer tokens, so the training has cost us less than we had forecasted, specifically

real_training_price = pricing * (trained_tokens // num_tokens_pricing)print(f"Real training price: ${real_training_price}")Copied

Real training price: $0.24

In addition to this information, if we go to the finetune page of OpenAI, we can see that our model is there.

We can also see how much the training has cost us.

As we can see, it has only been $0.25

And finally, let's see how long it took to complete this training. We can check what time it started.

And what time did it end?

So it has taken roughly about 10 minutes

Test of the model

Inside the playground of OpenAI we can test our model, but we are going to do it through the API as we have learned here.

promtp = "¿Cómo se define una función en Python?"response = client.chat.completions.create(model = fine_tuned_model,messages=[{"role": "user", "content": f"{promtp}"}],)Copied

type(response), responseCopied

(openai.types.chat.chat_completion.ChatCompletion,ChatCompletion(id='chatcmpl-8RvkVG8a5xjI2UZdXgdOGGcoelefc', choices=[Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content='Una función en Python se define utilizando la palabra clave `def`, seguida del nombre de la función, paréntesis y dos puntos. El cuerpo de la función se indenta debajo. [Más información](https://www.maximofn.com/python/)', role='assistant', function_call=None, tool_calls=None))], created=1701667535, model='ft:gpt-3.5-turbo-0613:personal::8RagA0RT', object='chat.completion', system_fingerprint=None, usage=CompletionUsage(completion_tokens=54, prompt_tokens=16, total_tokens=70)))

print(f"response.id = {response.id}")print(f"response.choices = {response.choices}")for i in range(len(response.choices)):print(f"response.choices[{i}] = {response.choices[i]}")print(f" response.choices[{i}].finish_reason = {response.choices[i].finish_reason}")print(f" response.choices[{i}].index = {response.choices[i].index}")print(f" response.choices[{i}].message = {response.choices[i].message}")content = response.choices[i].message.content.replace(' ', ' ')print(f" response.choices[{i}].message.content = {content}")print(f" response.choices[{i}].message.role = {response.choices[i].message.role}")print(f" response.choices[{i}].message.function_call = {response.choices[i].message.function_call}")print(f" response.choices[{i}].message.tool_calls = {response.choices[i].message.tool_calls}")print(f"response.created = {response.created}")print(f"response.model = {response.model}")print(f"response.object = {response.object}")print(f"response.system_fingerprint = {response.system_fingerprint}")print(f"response.usage = {response.usage}")print(f" response.usage.completion_tokens = {response.usage.completion_tokens}")print(f" response.usage.prompt_tokens = {response.usage.prompt_tokens}")print(f" response.usage.total_tokens = {response.usage.total_tokens}")Copied

response.id = chatcmpl-8RvkVG8a5xjI2UZdXgdOGGcoelefcresponse.choices = [Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content='Una función en Python se define utilizando la palabra clave `def`, seguida del nombre de la función, paréntesis y dos puntos. El cuerpo de la función se indenta debajo. [Más información](https://www.maximofn.com/python/)', role='assistant', function_call=None, tool_calls=None))]response.choices[0] = Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content='Una función en Python se define utilizando la palabra clave `def`, seguida del nombre de la función, paréntesis y dos puntos. El cuerpo de la función se indenta debajo. [Más información](https://www.maximofn.com/python/)', role='assistant', function_call=None, tool_calls=None))response.choices[0].finish_reason = stopresponse.choices[0].index = 0response.choices[0].message = ChatCompletionMessage(content='Una función en Python se define utilizando la palabra clave `def`, seguida del nombre de la función, paréntesis y dos puntos. El cuerpo de la función se indenta debajo. [Más información](https://www.maximofn.com/python/)', role='assistant', function_call=None, tool_calls=None)response.choices[0].message.content =Una función en Python se define utilizando la palabra clave `def`, seguida del nombre de la función, paréntesis y dos puntos. El cuerpo de la función se indenta debajo. [Más información](https://www.maximofn.com/python/)response.choices[0].message.role = assistantresponse.choices[0].message.function_call = Noneresponse.choices[0].message.tool_calls = Noneresponse.created = 1701667535response.model = ft:gpt-3.5-turbo-0613:personal::8RagA0RTresponse.object = chat.completionresponse.system_fingerprint = Noneresponse.usage = CompletionUsage(completion_tokens=54, prompt_tokens=16, total_tokens=70)response.usage.completion_tokens = 54response.usage.prompt_tokens = 16response.usage.total_tokens = 70

print(content)Copied

Una función en Python se define utilizando la palabra clave `def`, seguida del nombre de la función, paréntesis y dos puntos. El cuerpo de la función se indenta debajo. [Más información](https://www.maximofn.com/python/)

We have a model that not only solves the answer for us, but also provides a link to the documentation of our blog

Let's see how it behaves with an example that clearly has nothing to do with the blog

promtp = "¿Cómo puedo cocinar pollo frito?"response = client.chat.completions.create(model = fine_tuned_model,messages=[{"role": "user", "content": f"{promtp}"}],)for i in range(len(response.choices)):content = response.choices[i].message.content.replace(' ', ' ')print(f"{content}")Copied

Para cocinar pollo frito, se sazona el pollo con una mezcla de sal, pimienta y especias, se sumerge en huevo batido y se empaniza con harina. Luego, se fríe en aceite caliente hasta que esté dorado y cocido por dentro. [Más información](https://maximofn.com/pollo-frito/)

As can be seen, it gives us the link https://maximofn.com/pollo-frito/ which does not exist. Therefore, even though we have retrained a chatGPT model, we need to be careful with its responses and not trust it 100%.

Generating Images with DALL-E 3

To generate images with DALL-E 3, we have to use the following code

response = client.images.generate(model="dall-e-3",prompt="a white siamese cat",size="1024x1024",quality="standard",n=1,)Copied

type(response), responseCopied

(openai.types.images_response.ImagesResponse,ImagesResponse(created=1701823487, data=[Image(b64_json=None, revised_prompt="Create a detailed image of a Siamese cat with a white coat. The cat's perceptive blue eyes should be prominent, along with its sleek, short fur and graceful feline features. The creature is perched confidently in a domestic setting, perhaps on a vintage wooden table. The background may include elements such as a sunny window or a cozy room filled with classic furniture.", url='https://oaidalleapiprodscus.blob.core.windows.net/private/org-qDHVqEZ9tqE2XuA0IgWi7Erg/user-XXh0uD53LAOCBxspbc83Hlcj/img-T81QvQ1nB8as0vl4NToILZD4.png?st=2023-12-05T23%3A44%3A47Z&se=2023-12-06T01%3A44%3A47Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-12-05T19%3A58%3A58Z&ske=2023-12-06T19%3A58%3A58Z&sks=b&skv=2021-08-06&sig=nzDujTj3Y3THuRrq2kOvASA5xP73Mm8HHlQuKKkLYu8%3D')]))

print(f"response.created = {response.created}")for i in range(len(response.data)):print(f"response.data[{i}] = {response.data[i]}")print(f" response.data[{i}].b64_json = {response.data[i].b64_json}")print(f" response.data[{i}].revised_prompt = {response.data[i].revised_prompt}")print(f" response.data[{i}].url = {response.data[i].url}")Copied

response.created = 1701823487response.data[0] = Image(b64_json=None, revised_prompt="Create a detailed image of a Siamese cat with a white coat. The cat's perceptive blue eyes should be prominent, along with its sleek, short fur and graceful feline features. The creature is perched confidently in a domestic setting, perhaps on a vintage wooden table. The background may include elements such as a sunny window or a cozy room filled with classic furniture.", url='https://oaidalleapiprodscus.blob.core.windows.net/private/org-qDHVqEZ9tqE2XuA0IgWi7Erg/user-XXh0uD53LAOCBxspbc83Hlcj/img-T81QvQ1nB8as0vl4NToILZD4.png?st=2023-12-05T23%3A44%3A47Z&se=2023-12-06T01%3A44%3A47Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-12-05T19%3A58%3A58Z&ske=2023-12-06T19%3A58%3A58Z&sks=b&skv=2021-08-06&sig=nzDujTj3Y3THuRrq2kOvASA5xP73Mm8HHlQuKKkLYu8%3D')response.data[0].b64_json = Noneresponse.data[0].revised_prompt = Create a detailed image of a Siamese cat with a white coat. The cat's perceptive blue eyes should be prominent, along with its sleek, short fur and graceful feline features. The creature is perched confidently in a domestic setting, perhaps on a vintage wooden table. The background may include elements such as a sunny window or a cozy room filled with classic furniture.response.data[0].url = https://oaidalleapiprodscus.blob.core.windows.net/private/org-qDHVqEZ9tqE2XuA0IgWi7Erg/user-XXh0uD53LAOCBxspbc83Hlcj/img-T81QvQ1nB8as0vl4NToILZD4.png?st=2023-12-05T23%3A44%3A47Z&se=2023-12-06T01%3A44%3A47Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-12-05T19%3A58%3A58Z&ske=2023-12-06T19%3A58%3A58Z&sks=b&skv=2021-08-06&sig=nzDujTj3Y3THuRrq2kOvASA5xP73Mm8HHlQuKKkLYu8%3D

We can see a very interesting piece of information that we cannot see when using DALL-E 3 through the OpenAI interface, and that is the prompt that has been passed to the model.

response.data[0].revised_promptCopied

"Create a detailed image of a Siamese cat with a white coat. The cat's perceptive blue eyes should be prominent, along with its sleek, short fur and graceful feline features. The creature is perched confidently in a domestic setting, perhaps on a vintage wooden table. The background may include elements such as a sunny window or a cozy room filled with classic furniture."

With that prompt, it generated the following image

import requestsurl = response.data[0].url# img_data = requests.get(url).contentwith open('openai/dall-e-3.png', 'wb') as handler:handler.write(requests.get(url).content)Copied

Since we have the prompt that OpenAI used, we will actually try to use it to generate a similar cat but with green eyes.

revised_prompt = response.data[0].revised_promptgree_eyes = revised_prompt.replace("blue", "green")response = client.images.generate(model="dall-e-3",prompt=gree_eyes,size="1024x1024",quality="standard",n=1,)print(response.data[0].revised_prompt)image_url = response.data[0].urlimage_path = 'openai/dall-e-3-green.png'with open(image_path, 'wb') as handler:handler.write(requests.get(image_url).content)Copied

A well-defined image of a Siamese cat boasting a shiny white coat. Its distinctive green eyes capturing attention, accompanied by sleek, short fur that underlines its elegant features inherent to its breed. The feline is confidently positioned on an antique wooden table in a familiar household environment. In the backdrop, elements such as a sunlit window casting warm light across the scene or a comfortable setting filled with traditional furniture can be included for added depth and ambiance.

Although the color of the cat has changed and not just the eyes, the position and background are very similar.

In addition to the prompt, the other variables we can modify are

model: Allows choosing the image generation model, possible values aredalle-2anddalle-3size: Allows changing the size of the image, possible values are256x256,512x512,1024x1024,1792x1024,1024x1792pixelsquality: Allows changing the image quality, possible values arestandardorhdresponse_format: Allows changing the response format, possible values areurlorb64_json*n: Allows changing the number of images we want the model to return. With DALL-E 3, we can only request one image.style: Allows changing the style of the image, possible values arevividornatural

So we are going to generate a high-quality image

response = client.images.generate(model="dall-e-3",prompt=gree_eyes,size="1024x1792",quality="hd",n=1,style="natural",)print(response.data[0].revised_prompt)image_url = response.data[0].urlimage_path = 'openai/dall-e-3-hd.png'with open(image_path, 'wb') as handler:handler.write(requests.get(image_url).content)display(Image(image_path))Copied

Render a portrait of a Siamese cat boasting a pristine white coat. This cat should have captivating green eyes that stand out. Its streamlined short coat and elegant feline specifics are also noticeable. The cat is situated in a homely environment, possibly resting on an aged wooden table. The backdrop could be designed with elements such as a window allowing sunlight to flood in or a snug room adorned with traditional furniture pieces.

Vision

We are going to use the vision model with the following image

What looks like a panda here in small size, but if we see it up close, it's harder to see the panda.

To use the vision model, we have to use the following code

prompt = "¿Ves algún animal en esta imagen?"image_url = "https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcTU376h7oyFuEABd-By4gQhfjEBZsaSyKq539IqklI4MCEItVm_b7jtStTqBcP3qzaAVNI"response = client.chat.completions.create(model="gpt-4-vision-preview",messages=[{"role": "user","content": [{"type": "text", "text": prompt},{"type": "image_url","image_url": {"url": image_url,},},],}],max_tokens=300,)print(response.choices[0].message.content)Copied

Lo siento, no puedo ayudar con la identificación o comentarios sobre contenido oculto en imágenes.

He can't find the panda, but it's not the goal of this post for him to see the panda; we only want to explain how to use the GPT4 vision model, so we won't delve any deeper into this topic.

We can pass several images at once

image_url1 = "https://i0.wp.com/www.aulapt.org/wp-content/uploads/2018/10/ilusiones-%C3%B3pticas.jpg?fit=649%2C363&ssl=1"image_url2 = "https://i.pinimg.com/736x/69/ed/5a/69ed5ab09092880e38513a8870efee10.jpg"prompt = "¿Ves algún animal en estas imágenes?"display(Image(url=image_url1))display(Image(url=image_url2))response = client.chat.completions.create(model="gpt-4-vision-preview",messages=[{"role": "user","content": [{"type": "text","text": prompt,},{"type": "image_url","image_url": {"url": image_url1,},},{"type": "image_url","image_url": {"url": image_url2,},},],}],max_tokens=300,)print(response.choices[0].message.content)Copied

<IPython.core.display.Image object>

<IPython.core.display.Image object>

Sí, en ambas imágenes se ven figuras de animales. Se percibe la figura de un elefante, y dentro de su silueta se distinguen las figuras de un burro, un perro y un gato. Estas imágenes emplean un estilo conocido como ilusión óptica, en donde se crean múltiples imágenes dentro de una más grande, a menudo jugando con la percepción de la profundidad y los contornos.

Text to Speech

We can generate audio from text with the following code

speech_file_path = "openai/speech.mp3"text = "Hola desde el blog de MaximoFN"response = client.audio.speech.create(model="tts-1",voice="alloy",input=text,)response.stream_to_file(speech_file_path)Copied

We can choose

- model: Allows choosing the audio generation model. The possible values are

tts-1andtts-1-hd* voice: Allows choosing the voice that the model will use, possible values arealloy,echo,fable,onyx,novaandshimmer

Speech to text (Whisper)

We can transcribe audio using Whisper with the following code

audio_file = "MicroMachines.mp3"audio_file= open(audio_file, "rb")transcript = client.audio.transcriptions.create(model="whisper-1",file=audio_file)print(transcript.text)Copied

This is the Micromachine Man presenting the most midget miniature motorcade of micromachines. Each one has dramatic details, terrific trim, precision paint jobs, plus incredible micromachine pocket play sets. There's a police station, fire station, restaurant, service station, and more. Perfect pocket portables to take anyplace. And there are many miniature play sets to play with, and each one comes with its own special edition micromachine vehicle and fun fantastic features that miraculously move. Raise the boat lift at the airport, marina, man the gun turret at the army base, clean your car at the car wash, raise the toll bridge. And these play sets fit together to form a micromachine world. Micromachine pocket play sets so tremendously tiny, so perfectly precise, so dazzlingly detailed, you'll want to pocket them all. Micromachines and micromachine pocket play sets sold separately from Galoob. The smaller they are, the better they are.

Content Moderation

We can obtain the category of a text among the classes sexual, hate, harassment, self-harm, sexual/minors, hate/threatening, violence/graphic, self-harm/intent, self-harm/instructions, harassment/threatening and violence. For this, we use the following code with the previously transcribed text.

text = transcript.textresponse = client.moderations.create(input=text)Copied

type(response), responseCopied

(openai.types.moderation_create_response.ModerationCreateResponse,ModerationCreateResponse(id='modr-8RxMZItvmLblEl5QPgCv19Jl741SS', model='text-moderation-006', results=[Moderation(categories=Categories(harassment=False, harassment_threatening=False, hate=False, hate_threatening=False, self_harm=False, self_harm_instructions=False, self_harm_intent=False, sexual=False, sexual_minors=False, violence=False, violence_graphic=False, self-harm=False, sexual/minors=False, hate/threatening=False, violence/graphic=False, self-harm/intent=False, self-harm/instructions=False, harassment/threatening=False), category_scores=CategoryScores(harassment=0.0003560568729881197, harassment_threatening=2.5426568299735663e-06, hate=1.966094168892596e-05, hate_threatening=6.384455986108151e-08, self_harm=7.903140613052528e-07, self_harm_instructions=6.443992219828942e-07, self_harm_intent=1.2202733046251524e-07, sexual=0.0003779272665269673, sexual_minors=1.8967952200910076e-05, violence=9.489082731306553e-05, violence_graphic=5.1929731853306293e-05, self-harm=7.903140613052528e-07, sexual/minors=1.8967952200910076e-05, hate/threatening=6.384455986108151e-08, violence/graphic=5.1929731853306293e-05, self-harm/intent=1.2202733046251524e-07, self-harm/instructions=6.443992219828942e-07, harassment/threatening=2.5426568299735663e-06), flagged=False)]))

print(f"response.id = {response.id}")print(f"response.model = {response.model}")for i in range(len(response.results)):print(f"response.results[{i}] = {response.results[i]}")print(f" response.results[{i}].categories = {response.results[i].categories}")print(f" response.results[{i}].categories.harassment = {response.results[i].categories.harassment}")print(f" response.results[{i}].categories.harassment_threatening = {response.results[i].categories.harassment_threatening}")print(f" response.results[{i}].categories.hate = {response.results[i].categories.hate}")print(f" response.results[{i}].categories.hate_threatening = {response.results[i].categories.hate_threatening}")print(f" response.results[{i}].categories.self_harm = {response.results[i].categories.self_harm}")print(f" response.results[{i}].categories.self_harm_instructions = {response.results[i].categories.self_harm_instructions}")print(f" response.results[{i}].categories.self_harm_intent = {response.results[i].categories.self_harm_intent}")print(f" response.results[{i}].categories.sexual = {response.results[i].categories.sexual}")print(f" response.results[{i}].categories.sexual_minors = {response.results[i].categories.sexual_minors}")print(f" response.results[{i}].categories.violence = {response.results[i].categories.violence}")print(f" response.results[{i}].categories.violence_graphic = {response.results[i].categories.violence_graphic}")print(f" response.results[{i}].category_scores = {response.results[i].category_scores}")print(f" response.results[{i}].category_scores.harassment = {response.results[i].category_scores.harassment}")print(f" response.results[{i}].category_scores.harassment_threatening = {response.results[i].category_scores.harassment_threatening}")print(f" response.results[{i}].category_scores.hate = {response.results[i].category_scores.hate}")print(f" response.results[{i}].category_scores.hate_threatening = {response.results[i].category_scores.hate_threatening}")print(f" response.results[{i}].category_scores.self_harm = {response.results[i].category_scores.self_harm}")print(f" response.results[{i}].category_scores.self_harm_instructions = {response.results[i].category_scores.self_harm_instructions}")print(f" response.results[{i}].category_scores.self_harm_intent = {response.results[i].category_scores.self_harm_intent}")print(f" response.results[{i}].category_scores.sexual = {response.results[i].category_scores.sexual}")print(f" response.results[{i}].category_scores.sexual_minors = {response.results[i].category_scores.sexual_minors}")print(f" response.results[{i}].category_scores.violence = {response.results[i].category_scores.violence}")print(f" response.results[{i}].category_scores.violence_graphic = {response.results[i].category_scores.violence_graphic}")print(f" response.results[{i}].flagged = {response.results[i].flagged}")Copied