Tokens

Now that LLLMs are on the rise, we keep hearing about the number of tokenss supported by each model, but what are tokenss? They are the minimum units of representation of words.

This notebook has been automatically translated to make it accessible to more people, please let me know if you see any typos.

To explain what tokenss are, lets first see it with a practical example, lets use the OpenAI tokenizer, called tiktoken.

So, first we install the package:

pip install tiktoken

```

Once installed we create a tokenizer using the cl100k_base model, which in the example notebook How to count tokens with tiktoken explains that it is the one used by the gpt-4, gpt-3.5-turbo and text-embedding-ada-002 models.

import tiktokenencoder = tiktoken.get_encoding("cl100k_base")

Now we create a sample word tara tokenize it

import tiktokenencoder = tiktoken.get_encoding("cl100k_base")example_word = "breakdown"

And we tokenize it

import tiktokenencoder = tiktoken.get_encoding("cl100k_base")example_word = "breakdown"tokens = encoder.encode(example_word)tokens

[9137, 2996]

The word has been divided into 2 tokens, the 9137 and the 2996. Let`s see which words they correspond to

word1 = encoder.decode([tokens[0]])word2 = encoder.decode([tokens[1]])word1, word2

('break', 'down')

The OpenAI tokenizer has split the word breakdown into the words break and down. That is, it has split the word into 2 simpler words.

This is important, because when it is said that a LLM supports x tokens it does not mean that it supports x words, but that it supports x minimum units of word representation.

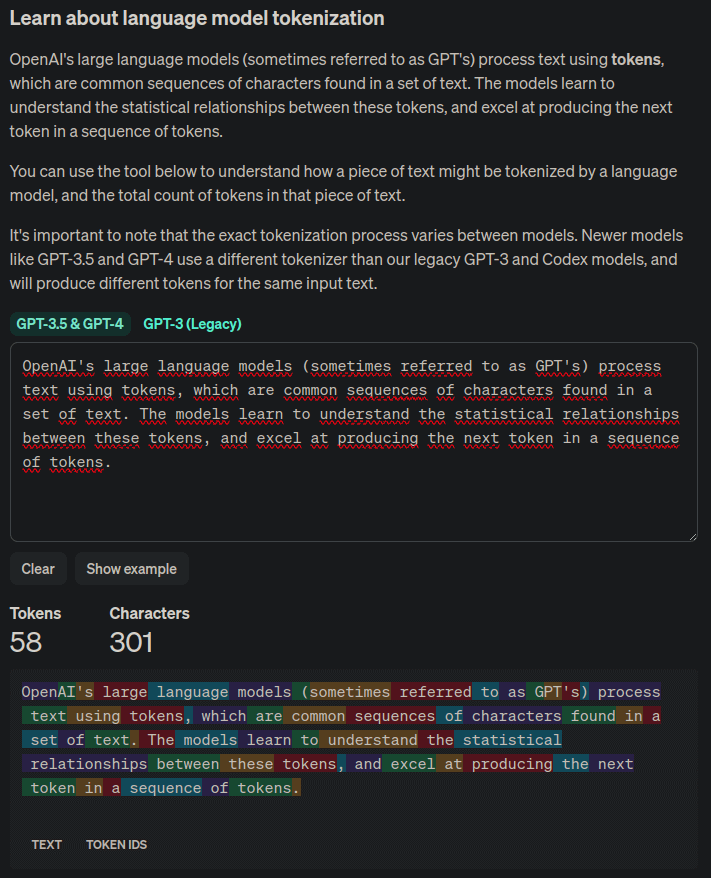

If you have a text and want to see the number of tokens it has for the OpenAI tokenizer, you can see it on the Tokenizer page, which shows each token in a different color.

We have seen the OpenAI tokenizer, but each LLM will be able to use another one.

As we have said, tokenss are the minimum units of representation of words, so lets see how many different tokenstiktoken` has.

n_vocab = encoder.n_vocabprint(f"Vocab size: {n_vocab}")

Vocab size: 100277

Let's see how tokenize other types of words

def encode_decode(word):tokens = encoder.encode(word)decode_tokens = []for token in tokens:decode_tokens.append(encoder.decode([token]))return tokens, decode_tokens

def encode_decode(word):tokens = encoder.encode(word)decode_tokens = []for token in tokens:decode_tokens.append(encoder.decode([token]))return tokens, decode_tokensword = "dog"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "tomorrow..."tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "artificial intelligence"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "Python"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "12/25/2023"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "😊"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")

Word: dog ==> tokens: [18964], decode_tokens: ['dog']Word: tomorrow... ==> tokens: [38501, 7924, 1131], decode_tokens: ['tom', 'orrow', '...']Word: artificial intelligence ==> tokens: [472, 16895, 11478], decode_tokens: ['art', 'ificial', ' intelligence']Word: Python ==> tokens: [31380], decode_tokens: ['Python']Word: 12/25/2023 ==> tokens: [717, 14, 914, 14, 2366, 18], decode_tokens: ['12', '/', '25', '/', '202', '3']Word: 😊 ==> tokens: [76460, 232], decode_tokens: ['�', '�']

Finally, let's look at it with words in another language

word = "perro"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "perra"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "mañana..."tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "inteligencia artificial"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "Python"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "12/25/2023"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")word = "😊"tokens, decode_tokens = encode_decode(word)print(f"Word: {word} ==> tokens: {tokens}, decode_tokens: {decode_tokens}")

Word: perro ==> tokens: [716, 299], decode_tokens: ['per', 'ro']Word: perra ==> tokens: [79, 14210], decode_tokens: ['p', 'erra']Word: mañana... ==> tokens: [1764, 88184, 1131], decode_tokens: ['ma', 'ñana', '...']Word: inteligencia artificial ==> tokens: [396, 39567, 8968, 21075], decode_tokens: ['int', 'elig', 'encia', ' artificial']Word: Python ==> tokens: [31380], decode_tokens: ['Python']Word: 12/25/2023 ==> tokens: [717, 14, 914, 14, 2366, 18], decode_tokens: ['12', '/', '25', '/', '202', '3']Word: 😊 ==> tokens: [76460, 232], decode_tokens: ['�', '�']

We can see that for similar words, more tokens are generated in Spanish than in English, so that for the same text, with a similar number of words, the number of tokens will be higher in Spanish than in English.