Declare redes neurais de maneira clara

Aviso: Este post foi traduzido para o português usando um modelo de tradução automática. Por favor, me avise se encontrar algum erro.

Quando em PyTorch se cria uma rede neural como uma lista de camadas

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])Copied

Depois iterar por ela no método forward não é tão claro

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return xCopied

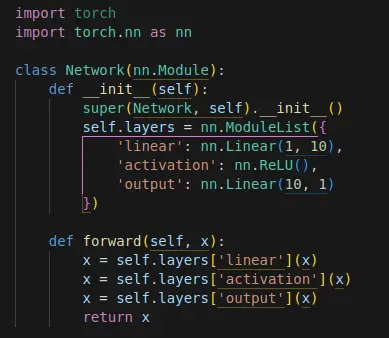

No entanto, quando se cria uma rede neural como um dicionário de camadas

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return ximport torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})Copied

Depois iterar por ela no método forward é mais claro

import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList([nn.Linear(1, 10),nn.ReLU(),nn.Linear(10, 1)])def forward(self, x):for layer in self.layers:x = layer(x)return ximport torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})import torchimport torch.nn as nnclass Network(nn.Module):def __init__(self):super(Network, self).__init__()self.layers = nn.ModuleList({'linear': nn.Linear(1, 10),'activation': nn.ReLU(),'output': nn.Linear(10, 1)})def forward(self, x):x = self.layers['linear'](x)x = self.layers['activation'](x)x = self.layers['output'](x)return xCopied