LangGraph

Aviso: Este post foi traduzido para o português usando um modelo de tradução automática. Por favor, me avise se encontrar algum erro.

LangGraph é um framework de orquestração de baixo nível para construir agentes controláveis

Enquanto o LangChain fornece integrações e componentes para acelerar o desenvolvimento de aplicações LLM, a biblioteca LangGraph permite a orquestração de agentes, oferecendo arquiteturas personalizáveis, memória de longo prazo e human in the loop para lidar com tarefas complexas de forma confiável.

Neste post, vamos desabilitar o

LangSmith, que é uma ferramenta de depuração de grafos. Vamos desabilitá-lo para não adicionar mais complexidade ao post e nos concentrarmos apenas noLangGraph.

Como funciona LangGraph?

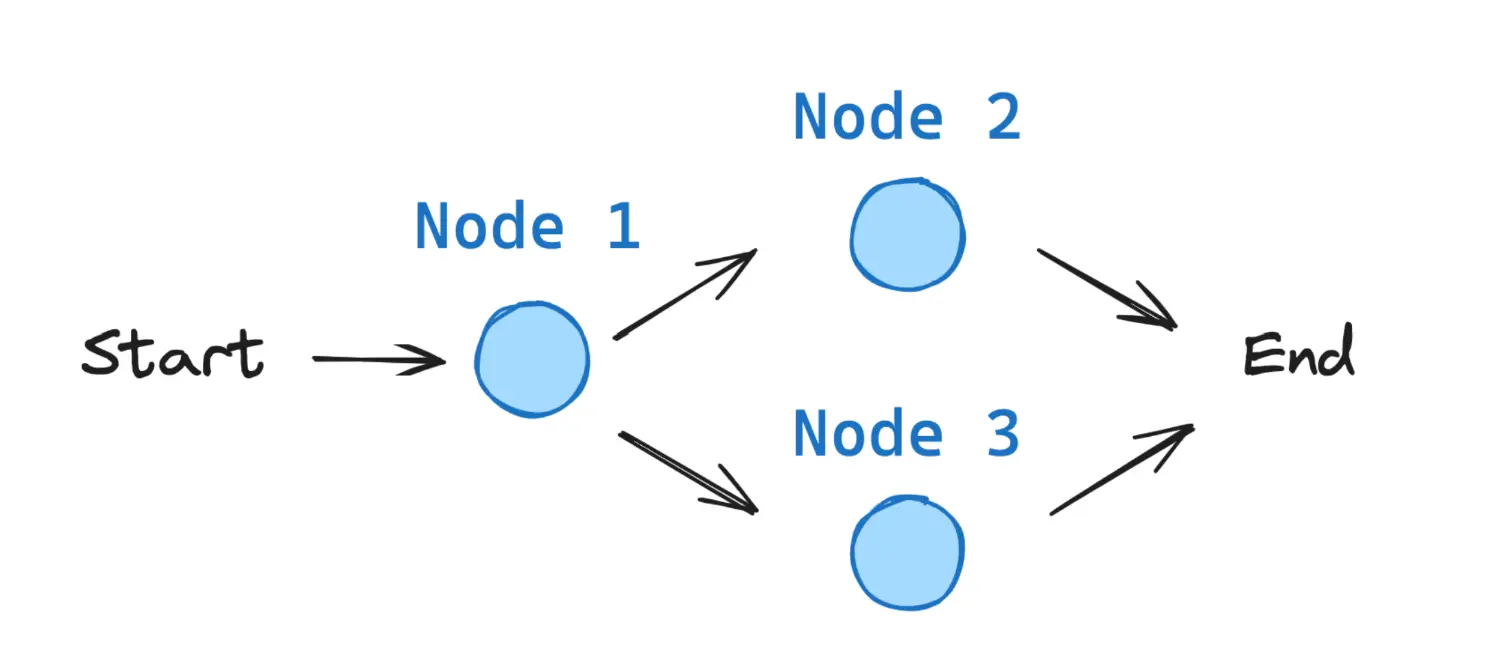

LangGraph baseia-se em três componentes:

- Nós: Representam as unidades de processamento da aplicação, como chamar um LLM ou uma ferramenta. São funções de Python que são executadas quando o nó é chamado.* Tomar o estado como entrada* Realizam alguma operação* Retornam o estado atualizado* Arestas: Representam as transições entre os nós. Definem a lógica de como o grafo será executado, ou seja, qual nó será executado após outro. Podem ser:* Diretos: Vão de um nó para outro* Condicional: Dependem de uma condição* Estado: Representa o estado da aplicação, ou seja, contém todas as informações necessárias para a aplicação. É mantido durante a execução da aplicação. É definido pelo usuário, então é preciso pensar muito bem no que será salvo nele.

Todos os grafos de LangGraph começam a partir de um nó START e terminam em um nó END.

Instalação do LangGraph

Para instalar LangGraph pode-se usar pip:

pip install -U langgraph``` ou instalar a partir do Conda: ```bash conda install langgraph``` Instalação do módulo da Hugging Face e Anthropic

Vamos a usar um modelo de linguagem da Hugging Face, por isso precisamos instalar seu pacote de langgraph.

pip install langchain-huggingface``` Para uma parte vamos usar Sonnet 3.7, depois explicaremos por quê. Então também instalamos o pacote de Anthropic.

pip install langchain_anthropic``` CHAVE DE API do Hugging Face

Vamos a usar Qwen/Qwen2.5-72B-Instruct através de Hugging Face Inference Endpoints, por isso precisamos de uma API KEY.

Para poder usar o Inference Endpoints da HuggingFace, o primeiro que você precisa é ter uma conta na HuggingFace. Uma vez que você tenha, é necessário ir até Access tokens nas configurações do seu perfil e gerar um novo token.

Tem que dar um nome. No meu caso, vou chamá-lo de langgraph e ativar a permissão Make calls to inference providers. Isso criará um token que teremos que copiar.

Para gerenciar o token, vamos a criar um arquivo no mesmo caminho em que estamos trabalhando chamado .env e vamos colocar o token que copiamos no arquivo da seguinte maneira:

HUGGINGFACE_LANGGRAPH="hf_...."``` Agora, para poder obter o token, precisamos ter instalado dotenv, que instalamos através de

pip install python-dotenv``` Executamos o seguinte import osimport dotenvdotenv.load_dotenv()HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

Agora que temos um token, criamos um cliente. Para isso, precisamos ter a biblioteca huggingface_hub instalada. A instalamos através do conda ou pip.

pip install --upgrade huggingface_hub``` o ``` bash conda install -c conda-forge huggingface_hub``` Agora temos que escolher qual modelo vamos usar. Você pode ver os modelos disponíveis na página de Supported models da documentação de Inference Endpoints do Hugging Face.

Vamos a usar Qwen2.5-72B-Instruct que é um modelo muito bom.

MODEL = "Qwen/Qwen2.5-72B-Instruct"

Agora podemos criar o cliente

from huggingface_hub import InferenceClientclient = InferenceClient(api_key=HUGGINGFACE_TOKEN, model=MODEL)client

<InferenceClient(model='Qwen/Qwen2.5-72B-Instruct', timeout=None)>

Fazemos um teste para ver se funciona

message = [{opening_brace} "role": "user", "content": "Hola, qué tal?" {closing_brace}]stream = client.chat.completions.create(messages=message,temperature=0.5,max_tokens=1024,top_p=0.7,stream=False)response = stream.choices[0].message.contentprint(response)

¡Hola! Estoy bien, gracias por preguntar. ¿Cómo estás tú? ¿En qué puedo ayudarte hoy?

CHAVE DE API da Anthropic

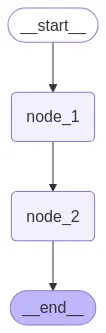

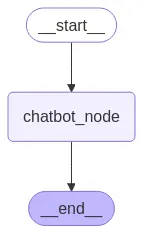

Criar um chatbot básico

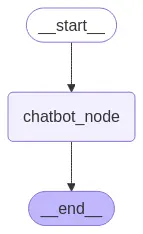

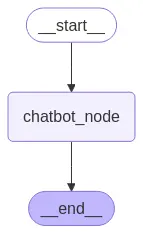

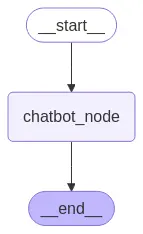

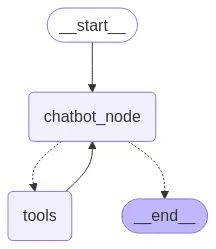

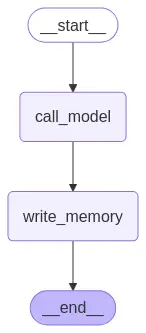

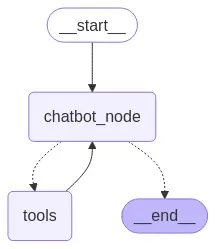

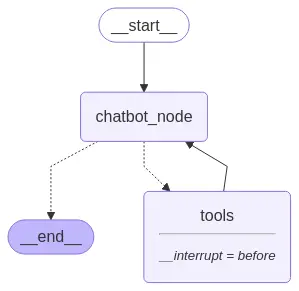

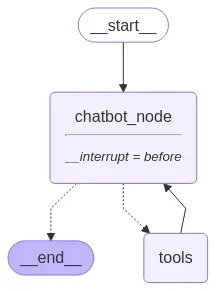

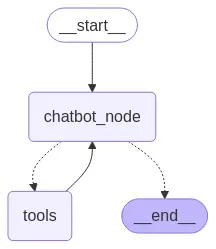

Vamos criar um chatbot simples usando LangGraph. Este chatbot responderá diretamente às mensagens do usuário. Embora seja simples, nos servirá para ver os conceitos básicos da construção de grafos com LangGraph.

Como o nome sugere, LangGraph é uma biblioteca para manipular grafos. Então, começamos criando um grafo StateGraph.

Um StateGraph define a estrutura do nosso chatbot como uma máquina de estados. Adicionaremos nós ao nosso grafo para representar os llms, tools e funções, os llms poderão fazer uso dessas tools e funções; e adicionamos arestas para especificar como o bot deve fazer a transição entre esses nós.

Então começamos criando um StateGraph que precisa de uma classe State para gerenciar o estado do grafo. Como agora vamos criar um chatbot simples, precisamos apenas gerenciar uma lista de mensagens no estado.

from typing import Annotatedfrom typing_extensions import TypedDictfrom langgraph.graph import StateGraphfrom langgraph.graph.message import add_messagesclass State(TypedDict):# Messages have the type "list". The `add_messages` function# in the annotation defines how this state key should be updated# (in this case, it appends messages to the list, rather than overwriting them)messages: Annotated[list, add_messages]graph_builder = StateGraph(State)

A função add_messages une duas listas de mensagens.

Chegarão novas listas de mensagens, portanto, serão adicionadas à lista de mensagens já existente. Cada lista de mensagens contém um ID, portanto, são adicionadas com este ID. Isso garante que as mensagens sejam apenas adicionadas, não substituídas, a menos que uma nova mensagem tenha o mesmo ID que uma já existente, nesse caso, ela será substituída.

add_messages é uma função reducer, é uma função responsável por atualizar o estado.

O grafo graph_builder que criamos, recebe um estado State e retorna um novo estado State. Além disso, atualiza a lista de mensagens.

Conceito>> Ao definir um grafo, o primeiro passo é definir seu

State. OStateinclui o esquema do grafo e asreducer functionsque manipulam atualizações do estado.>> No nosso exemplo,Stateé do tipoTypedDict(dicionário tipado) com uma chave:messages.>>add_messagesé umafunção reducerque é usada para adicionar novas mensagens à lista em vez de sobrescrevê-las na lista. Se uma chave de um estado não tiver umafunção reducer, cada valor que chegar dessa chave sobrescreverá os valores anteriores.>>add_messagesé umafunção reducerdo langgraph, mas nós vamos poder criar as nossas

Agora vamos adicionar ao grafo o nó chatbot. Os nós representam unidades de trabalho. Geralmente, são funções regulares de Python.

Adicionamos um nó com o método add_node que recebe o nome do nó e a função que será executada.

Então vamos criar um LLM com HuggingFace, depois criaremos um modelo de chat com LangChain que fará referência ao LLM criado. Uma vez definido o modelo de chat, definimos a função que será executada no nó do nosso grafo. Essa função fará uma chamada ao modelo de chat criado e retornará o resultado.

Por último, vamos a adicionar um nó com a função do chatbot ao gráfico

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFacefrom huggingface_hub import loginos.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing# Create the LLM modellogin(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the modelMODEL = "Qwen/Qwen2.5-72B-Instruct"model = HuggingFaceEndpoint(repo_id=MODEL,task="text-generation",max_new_tokens=512,do_sample=False,repetition_penalty=1.03,)# Create the chat modelllm = ChatHuggingFace(llm=model)# Define the chatbot functiondef chatbot_function(state: State):return {opening_brace}"messages": [llm.invoke(state["messages"])]}# The first argument is the unique node name# The second argument is the function or object that will be called whenever# the node is used.graph_builder.add_node("chatbot_node", chatbot_function)

<langgraph.graph.state.StateGraph at 0x130548440>

Nós usamos ChatHuggingFace que é um chat do tipo BaseChatModel que é um tipo de chat base de LangChain. Uma vez criado o BaseChatModel, nós criamos a função chatbot_function que será executada quando o nó for executado. E por último, criamos o nó chatbot_node e indicamos que ele deve executar a função chatbot_function.

Aviso>> A função de nó

chatbot_functionrecebe o estadoStatecomo entrada e retorna um dicionário que contém uma atualização da listamessagespara a chavemensagens. Este é o padrão básico para todas as funções do nóLangGraph.

A função reducer do nosso grafo add_messages adicionará as mensagens de resposta do llm a qualquer mensagem que já esteja no estado.

A seguir, adicionamos um nó entry. Isso diz ao nosso grafo onde começar seu trabalho sempre que o executamos.

from langgraph.graph import STARTgraph_builder.add_edge(START, "chatbot_node")

<langgraph.graph.state.StateGraph at 0x130548440>

Da mesma forma, adicionamos um nó finish. Isso indica ao grafo cada vez que esse nó é executado, ele pode finalizar o trabalho.

from langgraph.graph import ENDgraph_builder.add_edge("chatbot_node", END)

<langgraph.graph.state.StateGraph at 0x130548440>

Importamos START e END, que podem ser encontrados em constants, e são o primeiro e o último nó do nosso grafo.

Normalmente são nós virtuais

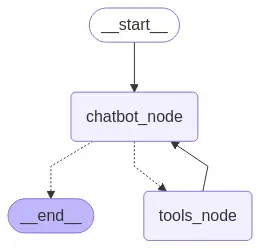

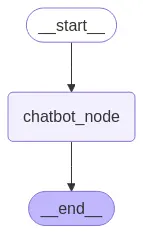

Finalmente, temos que compilar nosso grafo. Para fazer isso, usamos o método construtor de grafos compile(). Isso cria um CompiledGraph que podemos usar para executar nossa aplicação.

graph = graph_builder.compile()

Podemos visualizar o grafo usando o método get_graph e um dos métodos de "desenho", como draw_ascii ou draw_mermaid_png. O desenho de cada um dos métodos requerir dependências adicionais.

from IPython.display import Image, display

try:

display(Image(graph.get_graph().draw_mermaid_png()))

except Exception as e:

print(f"Error al visualizar el grafo: {e}")

Agora podemos testar o chatbot!

Dica>> No bloco de código seguinte, você pode sair do loop de bate-papo a qualquer momento digitando

quit,exitouq.

# Colors for the terminalCOLOR_GREEN = "\033[32m"COLOR_YELLOW = "\033[33m"COLOR_RESET = "\033[0m"def stream_graph_updates(user_input: str):for event in graph.stream({opening_brace}"messages": [{opening_brace}"role": "user", "content": user_input{closing_brace}]{closing_brace}):for value in event.values():print(f"{opening_brace}COLOR_GREEN{closing_brace}User: {opening_brace}COLOR_RESET{closing_brace}{opening_brace}user_input{closing_brace}")print(f"{opening_brace}COLOR_YELLOW{closing_brace}Assistant: {opening_brace}COLOR_RESET{closing_brace}{opening_brace}value['messages'][-1].content{closing_brace}")while True:try:user_input = input("User: ")if user_input.lower() in ["quit", "exit", "q"]:print(f"{opening_brace}COLOR_GREEN{closing_brace}User: {opening_brace}COLOR_RESET{closing_brace}{opening_brace}user_input{closing_brace}")print(f"{opening_brace}COLOR_YELLOW{closing_brace}Assistant: {opening_brace}COLOR_RESET{closing_brace}Goodbye!")breakevents =stream_graph_updates(user_input)except:# fallback if input() is not availableuser_input = "What do you know about LangGraph?"print("User: " + user_input)stream_graph_updates(user_input)break

User: HelloAssistant: Hello! It's nice to meet you. How can I assist you today? Whether you have questions, need information, or just want to chat, I'm here to help!User: How are you doing?Assistant: I'm just a computer program, so I don't have feelings, but I'm here and ready to help you with any questions or tasks you have! How can I assist you today?User: Me well, I'm making a post about LangGraph, what do you think?Assistant: LangGraph is an intriguing topic, especially if you're delving into the realm of graph-based models and their applications in natural language processing (NLP). LangGraph, as I understand, is a framework or tool that leverages graph theory to improve or provide a new perspective on NLP tasks such as text classification, information extraction, and semantic analysis. By representing textual information as graphs (nodes for entities and edges for relationships), it can offer a more nuanced understanding of the context and semantics in language data.If you're making a post about it, here are a few points you might consider:1. **Introduction to LangGraph**: Start with a brief explanation of what LangGraph is and its core principles. How does it model language or text differently compared to traditional NLP approaches? What unique advantages does it offer by using graph-based methods?2. **Applications of LangGraph**: Discuss some of the key applications where LangGraph has been or can be applied. This could include improving the accuracy of sentiment analysis, enhancing machine translation, or optimizing chatbot responses to be more contextually aware.3. **Technical Innovations**: Highlight any technical innovations or advancements that LangGraph brings to the table. This could be about new algorithms, more efficient data structures, or novel ways of training models on graph data.4. **Challenges and Limitations**: It's also important to address the challenges and limitations of using graph-based methods in NLP. Performance, scalability, and the current state of the technology can be discussed here.5. **Future Prospects**: Wrap up with a look into the future of LangGraph and graph-based NLP in general. What are the upcoming trends, potential areas of growth, and how might these tools start impacting broader technology landscapes?Each section can help frame your post in a way that's informative and engaging for your audience, whether they're technical experts or casual readers looking for an introduction to this intriguing area of NLP.User: qAssistant: Goodbye!

!Parabéns! Você construiu seu primeiro chatbot usando LangGraph. Este bot pode participar de uma conversa básica, recebendo a entrada do usuário e gerando respostas utilizando o LLM que definimos.

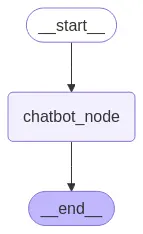

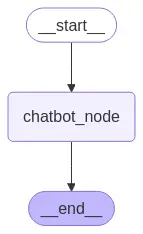

Antes fomos escrevendo o código aos poucos e pode ser que não tenha ficado muito claro. Foi feito assim para explicar cada parte do código, mas agora vamos reescrevê-lo, mas organizado de outra forma, que fica mais claro à vista. Ou seja, agora que não precisamos explicar cada parte do código, o agrupamos de outra maneira para que seja mais claro.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from huggingface_hub import login

from IPython.display import Image, display

import os

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

# State

class State(TypedDict):

messages: Annotated[list, add_messages]

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Function

def chatbot_function(state: State):

return {"messages": [llm.invoke(state["messages"])]}

# Start to build the graph

graph_builder = StateGraph(State)

# Add nodes to the graph

graph_builder.add_node("chatbot_node", chatbot_function)

# Add edges

graph_builder.add_edge(START, "chatbot_node")

graph_builder.add_edge("chatbot_node", END)

# Compile the graph

graph = graph_builder.compile()

# Display the graph

try:

display(Image(graph.get_graph().draw_mermaid_png()))

except Exception as e:

print(f"Error al visualizar el grafo: {e}")

Mais

Todos os blocos mais estão lá se você quiser aprofundar mais em LangGraph, se não, pode ler tudo sem ler os blocos mais

Tipagem do estado

Vimos como criar um agente com um estado tipado usando TypedDict, mas podemos criá-lo com outro tipo tipado.

Tipagem através de TypeDict

É a forma que vimos anteriormente, tipamos o estado como um dicionário usando o tipado de Python TypeDict. Passamos uma chave e um valor para cada variável do nosso estado.

from typing_extensions import TypedDictfrom typing import Anotadofrom langgraph.graph.message import adicionar_mensagensfrom langgraph.graph import StateGraph class Estado(TypedDict):mensagens: Anotado[list, add_mensagens]``` Para acessar as mensagens, fazemos isso como com qualquer dicionário, através de state["messages"]

Tipagem com dataclass

Outra opção é usar o tipado de python dataclass

from dataclasses import dataclassfrom typing import Anotadofrom langgraph.graph.message import add_messagesfrom langgraph.graph import StateGraph @dataclassclass Estado:mensagens: Anotado[list, adicionar_mensagens]``` Como pode ser visto, é semelhante ao tipagem por meio de dicionários, mas agora, sendo o estado uma classe, acessamos as mensagens através de state.messages

Tipagem com Pydantic

Pydantic é uma biblioteca muito usada para tipar dados em Python. Nos oferece a possibilidade de adicionar uma verificação do tipado. Vamos verificar que a mensagem comece com 'User', 'Assistant' ou 'System'.

from pydantic import BaseModel, field_validator, ValidationErrorfrom typing import Anotadofrom langgraph.graph.message import adicionar_mensagens class Estado(BaseModel):mensagens: Anotado[list, add_messages] # Deve começar com 'Usuário', 'Assistente' ou 'Sistema' @field_validator('mensagens')@classmethoddef validate_messages(cls, value):# Garanta que as mensagens comecem com `User`, `Assistant` ou `System`Se não value.startswith["'User'"] e não value.startswith["'Assistant'"] e não value.startswith["'System'"]:raise ValueError("A mensagem deve começar com 'User', 'Assistant' ou 'System'")valor de retorno tente:state = PydanticState(messages=["Olá"])except ValidationError as e:print("Erro de Validação:", e)``` Redutores

Como dissemos, precisamos usar uma função do tipo Reducer para indicar como atualizar o estado, pois se não os valores do estado serão sobrescritos.

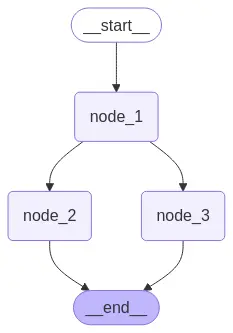

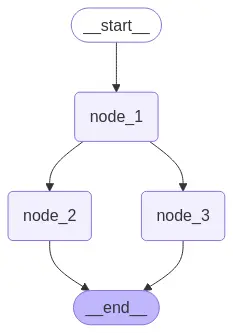

Vamos ver um exemplo de um grafo no qual não usamos uma função do tipo Reducer para indicar como atualizar o estado

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from IPython.display import Image, display

class State(TypedDict):

foo: int

def node_1(state):

print("---Node 1---")

return {"foo": state['foo'] + 1}

def node_2(state):

print("---Node 2---")

return {"foo": state['foo'] + 1}

def node_3(state):

print("---Node 3---")

return {"foo": state['foo'] + 1}

# Build graph

builder = StateGraph(State)

builder.add_node("node_1", node_1)

builder.add_node("node_2", node_2)

builder.add_node("node_3", node_3)

# Logic

builder.add_edge(START, "node_1")

builder.add_edge("node_1", "node_2")

builder.add_edge("node_1", "node_3")

builder.add_edge("node_2", END)

builder.add_edge("node_3", END)

# Add

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

Como podemos ver, definimos um grafo no qual o nó 1 é executado primeiro e depois os nós 2 e 3. Vamos executá-lo para ver o que acontece.

from langgraph.errors import InvalidUpdateErrortry:graph.invoke({"foo" : 1})except InvalidUpdateError as e:print(f"InvalidUpdateError occurred: {e}")

---Node 1------Node 2------Node 3---InvalidUpdateError occurred: At key 'foo': Can receive only one value per step. Use an Annotated key to handle multiple values.For troubleshooting, visit: https://python.langchain.com/docs/troubleshooting/errors/INVALID_CONCURRENT_GRAPH_UPDATE

Obtemos um erro porque primeiro o nó 1 modifica o valor de foo e depois os nós 2 e 3 tentam modificar o valor de foo em paralelo, o que dá um erro.

Então, para evitar isso, usamos uma função do tipo Reducer para indicar como modificar o estado.

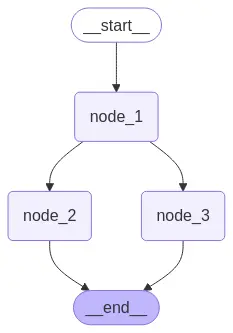

Redutores pré-definidos

Usamos o tipo Annotated para especificar que é uma função do tipo Reducer. E usamos o operador add para adicionar um valor a uma lista.

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from IPython.display import Image, display

from operator import add

from typing import Annotated

class State(TypedDict):

foo: Annotated[list[int], add]

def node_1(state):

print("---Node 1---")

return {"foo": [state['foo'][-1] + 1]}

def node_2(state):

print("---Node 2---")

return {"foo": [state['foo'][-1] + 1]}

def node_3(state):

print("---Node 3---")

return {"foo": [state['foo'][-1] + 1]}

# Build graph

builder = StateGraph(State)

builder.add_node("node_1", node_1)

builder.add_node("node_2", node_2)

builder.add_node("node_3", node_3)

# Logic

builder.add_edge(START, "node_1")

builder.add_edge("node_1", "node_2")

builder.add_edge("node_1", "node_3")

builder.add_edge("node_2", END)

builder.add_edge("node_3", END)

# Add

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

Executamos novamente para ver o que acontece

graph.invoke({"foo" : [1]})

Como vemos inicializamos o valor de foo a 1, o qual se adiciona em uma lista. Depois o nó 1 soma 1 e o adiciona como novo valor na lista, ou seja, adiciona um 2. Por fim os nós 2 e 3 somam um ao último valor da lista, ou seja, os dois nós obtêm um 3 e ambos o adicionam no final da lista, por isso a lista resultante tem dois 3 no final

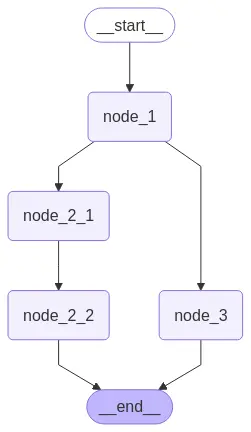

Vamos a ver o caso de que uma branch tenha mais nós que outra

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from IPython.display import Image, display

from operator import add

from typing import Annotated

class State(TypedDict):

foo: Annotated[list[int], add]

def node_1(state):

print("---Node 1---")

return {"foo": [state['foo'][-1] + 1]}

def node_2_1(state):

print("---Node 2_1---")

return {"foo": [state['foo'][-1] + 1]}

def node_2_2(state):

print("---Node 2_2---")

return {"foo": [state['foo'][-1] + 1]}

def node_3(state):

print("---Node 3---")

return {"foo": [state['foo'][-1] + 1]}

# Build graph

builder = StateGraph(State)

builder.add_node("node_1", node_1)

builder.add_node("node_2_1", node_2_1)

builder.add_node("node_2_2", node_2_2)

builder.add_node("node_3", node_3)

# Logic

builder.add_edge(START, "node_1")

builder.add_edge("node_1", "node_2_1")

builder.add_edge("node_1", "node_3")

builder.add_edge("node_2_1", "node_2_2")

builder.add_edge("node_2_2", END)

builder.add_edge("node_3", END)

# Add

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

Se agora executarmos o grafo

graph.invoke({"foo" : [1]})

O que aconteceu é que primeiro foi executado o nó 1, em seguida o nó 2_1, depois, em paralelo, os nós 2_2 e 3, e finalmente o nó END

Como definimos foo como uma lista de inteiros, e está tipada, se inicializarmos o estado com None obtemos um erro

try:graph.invoke({"foo" : None})except TypeError as e:print(f"TypeError occurred: {e}")

TypeError occurred: can only concatenate list (not "NoneType") to list

Vamos a ver como arrumar isso com reducidores personalizados

Redutores personalizados

Às vezes não podemos usar um Reducer pré-definido e temos que criar o nosso próprio.

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from IPython.display import Image, display

from typing import Annotated

def reducer_function(current_list, new_item: list | None):

if current_list is None:

current_list = []

if new_item is not None:

return current_list + new_item

return current_list

class State(TypedDict):

foo: Annotated[list[int], reducer_function]

def node_1(state):

print("---Node 1---")

if len(state['foo']) == 0:

return {'foo': [0]}

return {"foo": [state['foo'][-1] + 1]}

def node_2(state):

print("---Node 2---")

return {"foo": [state['foo'][-1] + 1]}

def node_3(state):

print("---Node 3---")

return {"foo": [state['foo'][-1] + 1]}

# Build graph

builder = StateGraph(State)

builder.add_node("node_1", node_1)

builder.add_node("node_2", node_2)

builder.add_node("node_3", node_3)

# Logic

builder.add_edge(START, "node_1")

builder.add_edge("node_1", "node_2")

builder.add_edge("node_1", "node_3")

builder.add_edge("node_2", END)

builder.add_edge("node_3", END)

# Add

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

Se agora inicializarmos o grafo com um valor None, não recebemos mais um erro.

try:graph.invoke({"foo" : None})except TypeError as e:print(f"TypeError occurred: {e}")

---Node 1------Node 2------Node 3---

Múltiplos estados

Estados privados

Suponhamos que queremos ocultar variáveis de estado, pela razão que seja, porque algumas variáveis só trazem ruído ou porque queremos manter alguma variável privada.

Se quisermos ter um estado privado, simplesmente o criamos.

from typing_extensions import TypedDict

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

class OverallState(TypedDict):

public_var: int

class PrivateState(TypedDict):

private_var: int

def node_1(state: OverallState) -> PrivateState:

print("---Node 1---")

return {"private_var": state['public_var'] + 1}

def node_2(state: PrivateState) -> OverallState:

print("---Node 2---")

return {"public_var": state['private_var'] + 1}

# Build graph

builder = StateGraph(OverallState)

builder.add_node("node_1", node_1)

builder.add_node("node_2", node_2)

# Logic

builder.add_edge(START, "node_1")

builder.add_edge("node_1", "node_2")

builder.add_edge("node_2", END)

# Add

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

Como vemos, criamos o estado privado PrivateState e o estado público OverallState. Cada um com uma variável privada e uma pública. Primeiro é executado o nó 1, que modifica a variável privada e a retorna. Em seguida, é executado o nó 2, que modifica a variável pública e a retorna. Vamos executar o grafo para ver o que acontece.

graph.invoke({"public_var" : 1})

Como vemos ao executar o grafo, passamos a variável pública public_var e obtemos na saída outra variável pública public_var com o valor modificado, mas nunca se acessou a variável privada private_var.

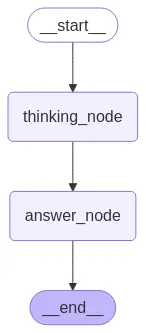

Estados de entrada e saída

Podemos definir as variáveis de entrada e saída do grafo. Embora internamente o estado possa ter mais variáveis, definimos quais variáveis são de entrada para o grafo e quais variáveis são de saída.

from typing_extensions import TypedDict

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

class InputState(TypedDict):

question: str

class OutputState(TypedDict):

answer: str

class OverallState(TypedDict):

question: str

answer: str

notes: str

def thinking_node(state: InputState):

return {"answer": "bye", "notes": "... his is name is Lance"}

def answer_node(state: OverallState) -> OutputState:

return {"answer": "bye Lance"}

graph = StateGraph(OverallState, input=InputState, output=OutputState)

graph.add_node("answer_node", answer_node)

graph.add_node("thinking_node", thinking_node)

graph.add_edge(START, "thinking_node")

graph.add_edge("thinking_node", "answer_node")

graph.add_edge("answer_node", END)

graph = graph.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

Neste caso, o estado tem 3 variáveis: question, answer e notes. No entanto, definimos como entrada do grafo question e como saída do grafo answer. Portanto, o estado interno pode ter mais variáveis, mas elas não são consideradas na hora de invocar o grafo. Vamos executar o grafo para ver o que acontece.

graph.invoke({"question":"hi"})

{'answer': 'bye Lance'}

Como vemos, adicionamos question ao grafo e obtivemos answer na saída.

Gerenciamento do contexto

Vamos a revisar novamente o código do chatbot básico

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from huggingface_hub import login

from IPython.display import Image, display

import os

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

class State(TypedDict):

messages: Annotated[list, add_messages]

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Define the chatbot function

def chatbot_function(state: State):

return {"messages": [llm.invoke(state["messages"])]}

# Create graph builder

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("chatbot_node", chatbot_function)

# Connect nodes

graph_builder.add_edge(START, "chatbot_node")

graph_builder.add_edge("chatbot_node", END)

# Compile the graph

graph = graph_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

Vamos a criar um contexto que passaremos ao modelo

from langchain_core.messages import AIMessage, HumanMessagemessages = [AIMessage(f"So you said you were researching ocean mammals?", name="Bot")]messages.append(HumanMessage(f"Yes, I know about whales. But what others should I learn about?", name="Lance"))for m in messages:m.pretty_print()

================================== Ai Message ==================================Name: BotSo you said you were researching ocean mammals?================================ Human Message =================================Name: LanceYes, I know about whales. But what others should I learn about?

Se passarmos para o grafo, obteremos a saída

output = graph.invoke({'messages': messages})for m in output['messages']:m.pretty_print()

================================== Ai Message ==================================Name: BotSo you said you were researching ocean mammals?================================ Human Message =================================Name: LanceYes, I know about whales. But what others should I learn about?================================== Ai Message ==================================That's a great topic! Besides whales, there are several other fascinating ocean mammals you might want to learn about. Here are a few:1. **Dolphins**: Highly intelligent and social, dolphins are found in all oceans of the world. They are known for their playful behavior and communication skills.2. **Porpoises**: Similar to dolphins but generally smaller and stouter, porpoises are less social and more elusive. They are found in coastal waters around the world.3. **Seals and Sea Lions**: These are semi-aquatic mammals that can be found in both Arctic and Antarctic regions, as well as in more temperate waters. They are known for their sleek bodies and flippers, and they differ in their ability to walk on land (sea lions can "walk" on their flippers, while seals can only wriggle or slide).4. **Walruses**: Known for their large tusks and whiskers, walruses are found in the Arctic. They are well-adapted to cold waters and have a thick layer of blubber to keep them warm.5. **Manatees and Dugongs**: These gentle, herbivorous mammals are often called "sea cows." They live in shallow, coastal areas and are found in tropical and subtropical regions. Manatees are found in the Americas, while dugongs are found in the Indo-Pacific region.6. **Otters**: While not fully aquatic, sea otters spend most of their lives in the water and are excellent swimmers. They are known for their dense fur, which keeps them warm in cold waters.7. **Polar Bears**: Although primarily considered land animals, polar bears are excellent swimmers and spend a significant amount of time in the water, especially when hunting for seals.Each of these mammals has unique adaptations and behaviors that make them incredibly interesting to study. If you have any specific questions or topics you'd like to explore further, feel free to ask!

Como vemos agora na saída temos uma mensagem adicional. Se isso continuar crescendo, chegará um momento em que teremos um contexto muito longo, o que representará um maior gasto de tokens, podendo resultar em um maior custo econômico e também maior latência. Além disso, com contextos muito longos, os LLMs começam a performar pior. Nos últimos modelos, à data da escrita deste post, acima de 8k tokens de contexto, começa a decrescer o desempenho do LLM

Então vamos ver várias maneiras de gerenciar isso

Modificar o contexto com funções do tipo Reducer

Vimos que com as funções do tipo Reducer podemos modificar as mensagens do estado

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from langchain_core.messages import RemoveMessage

from huggingface_hub import login

from IPython.display import Image, display

import os

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

class State(TypedDict):

messages: Annotated[list, add_messages]

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Nodes

def filter_messages(state: State):

# Delete all but the 2 most recent messages

delete_messages = [RemoveMessage(id=m.id) for m in state["messages"][:-2]]

return {"messages": delete_messages}

def chat_model_node(state: State):

return {"messages": [llm.invoke(state["messages"])]}

# Create graph builder

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("filter_messages_node", filter_messages)

graph_builder.add_node("chatbot_node", chat_model_node)

# Connecto nodes

graph_builder.add_edge(START, "filter_messages_node")

graph_builder.add_edge("filter_messages_node", "chatbot_node")

graph_builder.add_edge("chatbot_node", END)

# Compile the graph

graph = graph_builder.compile()

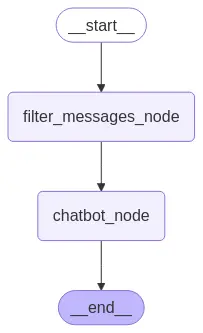

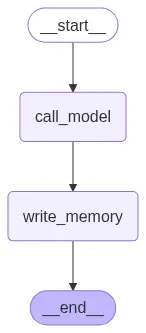

display(Image(graph.get_graph().draw_mermaid_png()))

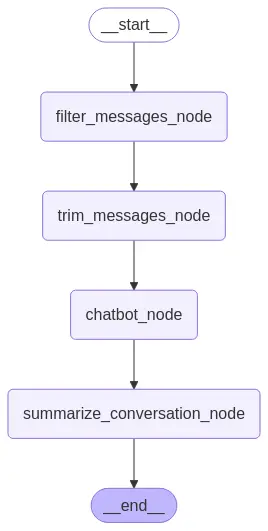

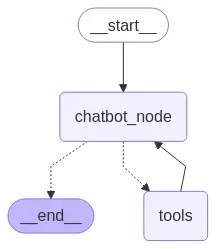

Como vemos no grafo, primeiro filtramos as mensagens e depois passamos o resultado ao modelo.

Voltamos a criar um contexto que passaremos ao modelo, mas agora com mais mensagens

from langchain_core.messages import AIMessage, HumanMessagemessages = [AIMessage(f"So you said you were researching ocean mammals?", name="Bot")]messages.append(HumanMessage(f"Yes, I know about whales. But what others should I learn about?", name="Lance"))messages.append(AIMessage(f"I know about sharks too", name="Bot"))messages.append(HumanMessage(f"What others should I learn about?", name="Lance"))messages.append(AIMessage(f"I know about dolphins too", name="Bot"))messages.append(HumanMessage(f"Tell me more about dolphins", name="Lance"))for m in messages:m.pretty_print()

================================== Ai Message ==================================Name: BotSo you said you were researching ocean mammals?================================ Human Message =================================Name: LanceYes, I know about whales. But what others should I learn about?================================== Ai Message ==================================Name: BotI know about sharks too================================ Human Message =================================Name: LanceWhat others should I learn about?================================== Ai Message ==================================Name: BotI know about dolphins too================================ Human Message =================================Name: LanceTell me more about dolphins

Se passarmos para o grafo, obteremos a saída

output = graph.invoke({'messages': messages})for m in output['messages']:m.pretty_print()

================================== Ai Message ==================================Name: BotI know about dolphins too================================ Human Message =================================Name: LanceTell me more about dolphins================================== Ai Message ==================================Dolphins are highly intelligent marine mammals that are part of the family Delphinidae, which includes about 40 species. They are found in oceans worldwide, from tropical to temperate regions, and are known for their agility and playful behavior. Here are some interesting facts about dolphins:1. **Social Behavior**: Dolphins are highly social animals and often live in groups called pods, which can range from a few individuals to several hundred. Social interactions are complex and include cooperative behaviors, such as hunting and defending against predators.2. **Communication**: Dolphins communicate using a variety of sounds, including clicks, whistles, and body language. These sounds can be used for navigation (echolocation), communication, and social bonding. Each dolphin has a unique signature whistle that helps identify it to others in the pod.3. **Intelligence**: Dolphins are considered one of the most intelligent animals on Earth. They have large brains and display behaviors such as problem-solving, mimicry, and even the use of tools. Some studies suggest that dolphins can recognize themselves in mirrors, indicating a level of self-awareness.4. **Diet**: Dolphins are carnivores and primarily feed on fish and squid. They use echolocation to locate and catch their prey. Some species, like the bottlenose dolphin, have been observed using teamwork to herd fish into tight groups, making them easier to catch.5. **Reproduction**: Dolphins typically give birth to a single calf after a gestation period of about 10 to 12 months. Calves are born tail-first and are immediately helped to the surface for their first breath by their mother or another dolphin. Calves nurse for up to two years and remain dependent on their mothers for a significant period.6. **Conservation**: Many dolphin species are threatened by human activities such as pollution, overfishing, and habitat destruction. Some species, like the Indo-Pacific humpback dolphin and the Amazon river dolphin, are endangered. Conservation efforts are crucial to protect these animals and their habitats.7. **Human Interaction**: Dolphins have a long history of interaction with humans, often appearing in mythology and literature. In some cultures, they are considered sacred or bring good luck. Today, dolphins are popular in marine parks and are often the focus of eco-tourism activities, such as dolphin-watching tours.Dolphins continue to fascinate scientists and the general public alike, with ongoing research into their behavior, communication, and social structures providing new insights into these remarkable creatures.

Como se pode ver, a função de filtragem removeu todas as mensagens, exceto as duas últimas, e essas duas mensagens foram passadas como contexto para o LLM.

Cortar mensagens

Outra solução é recortar cada mensagem da lista de mensagens que tenham muitos tokens, estabelece-se um limite de tokens e elimina-se a mensagem que ultrapassa esse limite.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from langchain_core.messages import trim_messages

from huggingface_hub import login

from IPython.display import Image, display

import os

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

class State(TypedDict):

messages: Annotated[list, add_messages]

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Nodes

def trim_messages_node(state: State):

# Trim the messages based on the specified parameters

trimmed_messages = trim_messages(

state["messages"],

max_tokens=100, # Maximum tokens allowed in the trimmed list

strategy="last", # Keep the latest messages

token_counter=llm, # Use the LLM's tokenizer to count tokens

allow_partial=True, # Allow cutting messages mid-way if needed

)

# Print the trimmed messages to see the effect of trim_messages

print("--- trimmed messages (input to LLM) ---")

for m in trimmed_messages:

m.pretty_print()

print("------------------------------------------------")

# Invoke the LLM with the trimmed messages

response = llm.invoke(trimmed_messages)

# Return the LLM's response in the correct state format

return {"messages": [response]}

# Create graph builder

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("trim_messages_node", trim_messages_node)

# Connecto nodes

graph_builder.add_edge(START, "trim_messages_node")

graph_builder.add_edge("trim_messages_node", END)

# Compile the graph

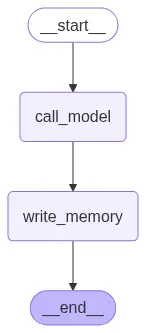

graph = graph_builder.compile()

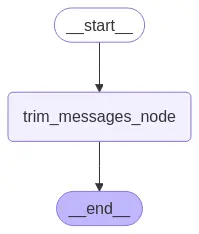

display(Image(graph.get_graph().draw_mermaid_png()))

Como vemos no grafo, primeiro filtramos as mensagens e depois passamos o resultado ao modelo.

Voltamos a criar um contexto que passaremos ao modelo, mas agora com mais mensagens

from langchain_core.messages import AIMessage, HumanMessagemessages = [AIMessage(f"So you said you were researching ocean mammals?", name="Bot")]messages.append(HumanMessage(f"Yes, I know about whales. But what others should I learn about?", name="Lance"))messages.append(AIMessage(f"""I know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.""", name="Bot"))messages.append(HumanMessage(f"What others should I learn about?", name="Lance"))messages.append(AIMessage(f"I know about dolphins too", name="Bot"))messages.append(HumanMessage(f"Tell me more about dolphins", name="Lance"))for m in messages:m.pretty_print()

================================== Ai Message ==================================Name: BotSo you said you were researching ocean mammals?================================ Human Message =================================Name: LanceYes, I know about whales. But what others should I learn about?================================== Ai Message ==================================Name: BotI know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.================================ Human Message =================================Name: LanceWhat others should I learn about?================================== Ai Message ==================================Name: BotI know about dolphins too================================ Human Message =================================Name: LanceTell me more about dolphins

Se passarmos ao grafo, obteremos a saída

output = graph.invoke({'messages': messages})

--- trimmed messages (input to LLM) ---================================== Ai Message ==================================Name: BotThe tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.================================ Human Message =================================Name: LanceWhat others should I learn about?================================== Ai Message ==================================Name: BotI know about dolphins too================================ Human Message =================================Name: LanceTell me more about dolphins------------------------------------------------

Como pode ser visto, o contexto fornecido ao LLM foi truncado. A mensagem, que era muito longa e continha muitos tokens, foi reduzida. Vamos observar a saída do LLM.

for m in output['messages']:m.pretty_print()

================================== Ai Message ==================================Name: BotSo you said you were researching ocean mammals?================================ Human Message =================================Name: LanceYes, I know about whales. But what others should I learn about?================================== Ai Message ==================================Name: BotI know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.================================ Human Message =================================Name: LanceWhat others should I learn about?================================== Ai Message ==================================Name: BotI know about dolphins too================================ Human Message =================================Name: LanceTell me more about dolphins================================== Ai Message ==================================Certainly! Dolphins are intelligent marine mammals that are part of the family Delphinidae, which includes nearly 40 species. Here are some interesting facts about dolphins:1. **Intelligence**: Dolphins are known for their high intelligence and have large brains relative to their body size. They exhibit behaviors that suggest social complexity, self-awareness, and problem-solving skills. For example, they can recognize themselves in mirrors, a trait shared by only a few other species.2. **Communication**: Dolphins communicate using a variety of clicks, whistles, and body language. Each dolphin has a unique "signature whistle" that helps identify it to others, similar to a human name. They use echolocation to navigate and locate prey by emitting clicks and interpreting the echoes that bounce back.3. **Social Structure**: Dolphins are highly social animals and often live in groups called pods. These pods can vary in size from a few individuals to several hundred. Within these groups, dolphins form complex social relationships and often cooperate to hunt and protect each other from predators.4. **Habitat**: Dolphins are found in all the world's oceans and in some rivers. Different species have adapted to various environments, from tropical waters to the cooler regions of the open sea. Some species, like the Amazon river dolphin (also known as the boto), live in freshwater rivers.5. **Diet**: Dolphins are carnivores and primarily eat fish, squid, and crustaceans. Their diet can vary depending on the species and their habitat. Some species, like the killer whale (which is actually a large dolphin), can even hunt larger marine mammals.6. **Reproduction**: Dolphins have a long gestation period, typically around 10 to 12 months. Calves are born tail-first and are nursed by their mothers for up to two years. Dolphins often form strong bonds with their offspring and other members of their pod.7. **Conservation**: Many species of dolphins face threats such as pollution, overfishing, and entanglement in fishing nets. Conservation efforts are ongoing to protect these animals and their habitats. Organizations like the International Union for Conservation of Nature (IUCN) and the World Wildlife Fund (WWF) work to raise awareness and implement conservation measures.8. **Cultural Significance**: Dolphins have been a source of fascination and inspiration for humans for centuries. They appear in myths, legends, and art across many cultures and are often seen as symbols of intelligence, playfulness, and freedom.Dolphins are truly remarkable creatures with a lot to teach us about social behavior, communication, and the complexities of marine ecosystems. If you have any specific questions or want to know more about a particular species, feel free to ask!

Com um contexto truncado, o LLM continua respondendo

Modificação do contexto e corte de mensagens

Vamos a juntar as duas técnicas anteriores, modificaremos o contexto e recortaremos os mensagens.

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from langchain_core.messages import RemoveMessage, trim_messages

from huggingface_hub import login

from IPython.display import Image, display

import os

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

class State(TypedDict):

messages: Annotated[list, add_messages]

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Nodes

def filter_messages(state: State):

# Delete all but the 2 most recent messages

delete_messages = [RemoveMessage(id=m.id) for m in state["messages"][:-2]]

return {"messages": delete_messages}

def trim_messages_node(state: State):

# print the messages

print("--- messages (input to trim_messages) ---")

for m in state["messages"]:

m.pretty_print()

print("------------------------------------------------")

# Trim the messages based on the specified parameters

trimmed_messages = trim_messages(

state["messages"],

max_tokens=100, # Maximum tokens allowed in the trimmed list

strategy="last", # Keep the latest messages

token_counter=llm, # Use the LLM's tokenizer to count tokens

allow_partial=True, # Allow cutting messages mid-way if needed

)

# Print the trimmed messages to see the effect of trim_messages

print("--- trimmed messages (input to LLM) ---")

for m in trimmed_messages:

m.pretty_print()

print("------------------------------------------------")

# Invoke the LLM with the trimmed messages

response = llm.invoke(trimmed_messages)

# Return the LLM's response in the correct state format

return {"messages": [response]}

def chat_model_node(state: State):

return {"messages": [llm.invoke(state["messages"])]}

# Create graph builder

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("filter_messages_node", filter_messages)

graph_builder.add_node("chatbot_node", chat_model_node)

graph_builder.add_node("trim_messages_node", trim_messages_node)

# Connecto nodes

graph_builder.add_edge(START, "filter_messages_node")

graph_builder.add_edge("filter_messages_node", "trim_messages_node")

graph_builder.add_edge("trim_messages_node", "chatbot_node")

graph_builder.add_edge("chatbot_node", END)

# Compile the graph

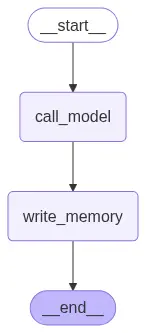

graph = graph_builder.compile()

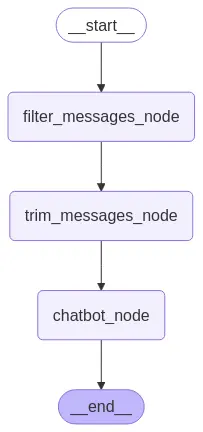

display(Image(graph.get_graph().draw_mermaid_png()))

Agora filtramos ficando com as duas últimas mensagens, depois trimamos o contexto para que não gaste muitos tokens e, finalmente, passamos o resultado ao modelo.

Criamos um contexto para passá-lo ao grafo

from langchain_core.messages import AIMessage, HumanMessagemessages = [AIMessage(f"So you said you were researching ocean mammals?", name="Bot")]messages.append(HumanMessage(f"Yes, I know about whales. But what others should I learn about?", name="Lance"))messages.append(AIMessage(f"I know about dolphins too", name="Bot"))messages.append(HumanMessage(f"What others should I learn about?", name="Lance"))messages.append(AIMessage(f"""I know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.""", name="Bot"))messages.append(HumanMessage(f"What others should I learn about?", name="Lance"))for m in messages:m.pretty_print()

================================== Ai Message ==================================Name: BotSo you said you were researching ocean mammals?================================ Human Message =================================Name: LanceYes, I know about whales. But what others should I learn about?================================== Ai Message ==================================Name: BotI know about dolphins too================================ Human Message =================================Name: LanceWhat others should I learn about?================================== Ai Message ==================================Name: BotI know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.================================ Human Message =================================Name: LanceWhat others should I learn about?

Passamos para o grafo e obtemos a saída

output = graph.invoke({'messages': messages})

--- messages (input to trim_messages) ---================================== Ai Message ==================================Name: BotI know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.================================ Human Message =================================Name: LanceWhat others should I learn about?--------------------------------------------------- trimmed messages (input to LLM) ---================================ Human Message =================================Name: LanceWhat others should I learn about?------------------------------------------------

Como podemos ver, ficamos apenas com a última mensagem, isso ocorreu porque a função de filtro retornou as duas últimas mensagens, mas a função de trim removceu a penúltima mensagem por ter mais de 100 tokens.

Vamos a ver o que temos na saída do modelo

for m in output['messages']:m.pretty_print()

================================== Ai Message ==================================Name: BotI know about sharks too. They are very dangerous, but they are also very beautiful.Sometimes have been seen in the wild, but they are not very common. In the wild, they are very dangerous, but they are also very beautiful.They live in the sea and in the ocean. They can travel long distances and can be found in many parts of the world.Often they live in groups of 20 or more, but they are not very common.They should eat a lot of food. Normally they eat a lot of fish.The white shark is the largest of the sharks and is the most dangerous.The great white shark is the most famous of the sharks and is the most dangerous.The tiger shark is the most aggressive of the sharks and is the most dangerous.The hammerhead shark is the most beautiful of the sharks and is the most dangerous.The mako shark is the fastest of the sharks and is the most dangerous.The bull shark is the most common of the sharks and is the most dangerous.================================ Human Message =================================Name: LanceWhat others should I learn about?================================== Ai Message ==================================Certainly! To provide a more tailored response, it would be helpful to know what areas or topics you're interested in. However, here’s a general list of areas that are often considered valuable for personal and professional development:1. **Technology & Digital Skills**:- Programming languages (Python, JavaScript, etc.)- Web development (HTML, CSS, React, etc.)- Data analysis and visualization (SQL, Tableau, Power BI)- Machine learning and AI- Cloud computing (AWS, Azure, Google Cloud)2. **Business & Entrepreneurship**:- Marketing (digital marketing, SEO, content marketing)- Project management- Financial literacy- Leadership and management-Startup and venture capital3. **Science & Engineering**:- Biology and genetics- Physics and materials science- Environmental science and sustainability- Robotics and automation- Aerospace engineering4. **Health & Wellness**:- Nutrition and dietetics- Mental health and psychology- Exercise science- Yoga and mindfulness- Traditional and alternative medicine5. **Arts & Humanities**:- Creative writing and storytelling- Music and sound production- Visual arts and design (graphic design, photography)- Philosophy and ethics- History and cultural studies6. **Communication & Languages**:- Public speaking and presentation skills- Conflict resolution and negotiation- Learning a new language (Spanish, Mandarin, French, etc.)- Writing and editing7. **Personal Development**:- Time management and productivity- Mindfulness and stress management- Goal setting and motivation- Personal finance and budgeting- Critical thinking and problem solving8. **Social & Environmental Impact**:- Social entrepreneurship- Community organizing and activism- Sustainable living practices- Climate change and environmental policyIf you have a specific area of interest or a particular goal in mind, feel free to share, and I can provide more detailed recommendations!================================== Ai Message ==================================

Filtramos tanto o estado que o LLM não tem contexto suficiente, mais tarde veremos uma maneira de resolver isso adicionando ao estado um resumo da conversação.

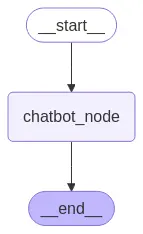

Modos de transmissão

Streaming síncrono

Neste caso, vamos receber o resultado do LLM completo assim que ele terminar de gerar o texto.

Para explicar os modos de transmissão síncrona, primeiro vamos criar um grafo básico.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from langchain_core.messages import HumanMessage

from huggingface_hub import login

from IPython.display import Image, display

import os

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

class State(TypedDict):

messages: Annotated[list, add_messages]

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Nodes

def chat_model_node(state: State):

# Return the LLM's response in the correct state format

return {"messages": [llm.invoke(state["messages"])]}

# Create graph builder

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("chatbot_node", chat_model_node)

# Connecto nodes

graph_builder.add_edge(START, "chatbot_node")

graph_builder.add_edge("chatbot_node", END)

# Compile the graph

graph = graph_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

Agora temos duas maneiras de obter o resultado do LLM, uma é através do modo updates e a outra através do modo values.

Enquanto

Enquanto updates nos dá cada novo resultado, values nos dá todo o histórico de resultados.

Atualizações

for chunk in graph.stream({"messages": [HumanMessage(content="hi! I'm Máximo")]}, stream_mode="updates"):print(chunk['chatbot_node']['messages'][-1].content)

Hello Máximo! It's nice to meet you. How can I assist you today? Feel free to ask me any questions or let me know if you need help with anything specific.

Valores

for chunk in graph.stream({"messages": [HumanMessage(content="hi! I'm Máximo")]}, stream_mode="values"):print(chunk['messages'][-1].content)

hi! I'm MáximoHello Máximo! It's nice to meet you. How can I assist you today? Feel free to ask me any questions or let me know if you need help with anything specific.

Streaming assíncrono

Agora vamos receber o resultado do LLM token a token. Para isso, temos que adicionar streaming=True quando criamos o LLM da HuggingFace e temos que alterar a função do nó do chatbot para que seja assíncrona.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from langchain_core.messages import HumanMessage

from huggingface_hub import login

from IPython.display import Image, display

import os

import dotenv

dotenv.load_dotenv()

HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_LANGGRAPH")

class State(TypedDict):

messages: Annotated[list, add_messages]

os.environ["LANGCHAIN_TRACING_V2"] = "false" # Disable LangSmith tracing

# Create the LLM model

login(token=HUGGINGFACE_TOKEN) # Login to HuggingFace to use the model

MODEL = "Qwen/Qwen2.5-72B-Instruct"

model = HuggingFaceEndpoint(

repo_id=MODEL,

task="text-generation",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03,

streaming=True,

)

# Create the chat model

llm = ChatHuggingFace(llm=model)

# Nodes

async def chat_model_node(state: State):

async for token in llm.astream_log(state["messages"]):

yield {"messages": [token]}

# Create graph builder

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("chatbot_node", chat_model_node)

# Connecto nodes

graph_builder.add_edge(START, "chatbot_node")

graph_builder.add_edge("chatbot_node", END)

# Compile the graph

graph = graph_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

Como pode ser visto, a função foi criada assíncrona e convertida em um gerador, pois o yield retorna um valor e pausa a execução da função até que seja chamada novamente.

Vamos a executar o grafo de forma assíncrona e ver os tipos de eventos que são gerados.

try:

async for event in graph.astream_events({"messages": [HumanMessage(content="hi! I'm Máximo")]}, version="v2"):

print(f"event: {event}")

except Exception as e:

print(f"Error: {e}")

Como se pode ver, os tokens chegam com o evento on_chat_model_stream, então vamos capturá-lo e imprimi-lo.

try:

async for event in graph.astream_events({"messages": [HumanMessage(content="hi! I'm Máximo")]}, version="v2"):

if event["event"] == "on_chat_model_stream":

print(event["data"]["chunk"].content, end=" | ", flush=True)

except Exception as e:

pass

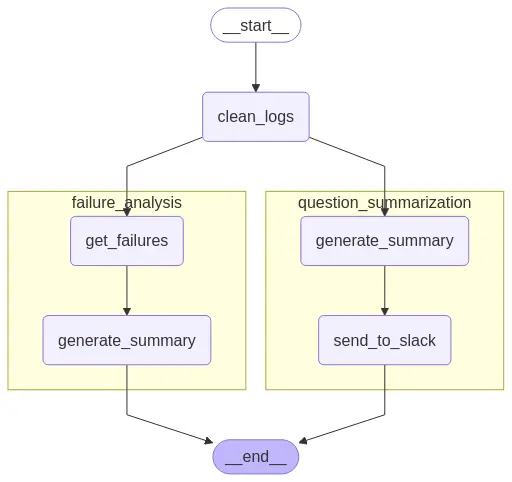

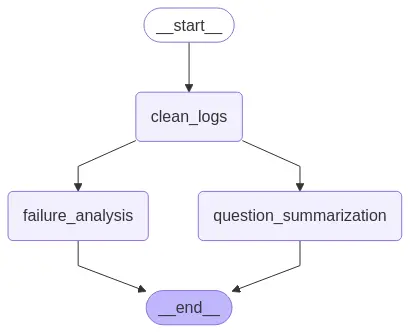

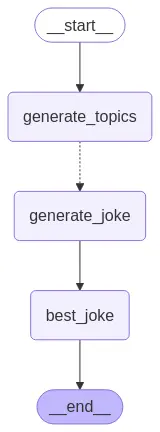

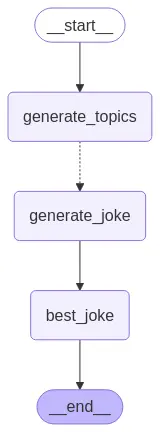

Subgrafos

Antes vimos como bifurcar um grafo de forma que os nós sejam executados em paralelo, mas suponha o caso de que agora o que queremos é que o que seja executado em paralelo sejam subgrafos. Então vamos ver como fazer isso.

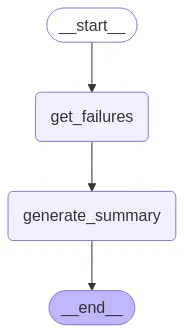

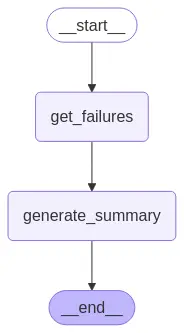

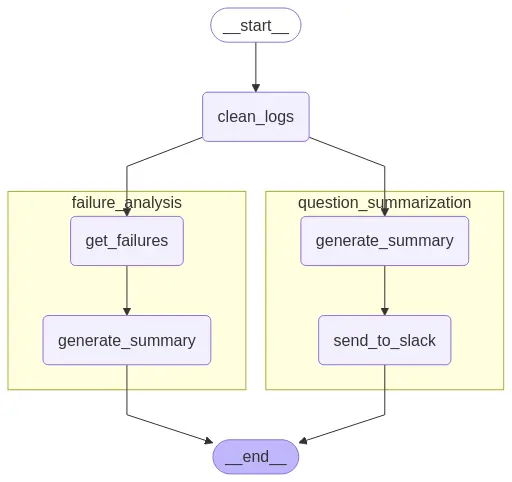

Vamos ver como fazer um grafo de gestão de logs que vai ter um subgrafo de resumo de logs e outro subgrafo de análise de erros nos logs.

Então, o que vamos fazer é primeiro definir cada um dos subgráficos separadamente e depois adicioná-los ao grafo principal.

Então, o que vamos fazer é primeiro definir cada um dos subgráficos separadamente e depois adicioná-los ao grafo principal.

Subgráfico de análise de erros em logs

Importamos as bibliotecas necessárias

from IPython.display import Image, displayfrom langgraph.graph import StateGraph, START, ENDfrom operator import addfrom typing_extensions import TypedDictfrom typing import List, Optional, Annotated

Criamos uma classe com a estrutura dos logs

# The structure of the logsclass Log(TypedDict):id: strquestion: strdocs: Optional[List]answer: strgrade: Optional[int]grader: Optional[str]feedback: Optional[str]

Criamos agora duas classes, uma com a estrutura dos erros dos logs e outra com a análise que relatará na saída

# Failure Analysis Sub-graphclass FailureAnalysisState(TypedDict):cleaned_logs: List[Log]failures: List[Log]fa_summary: strprocessed_logs: List[str]class FailureAnalysisOutputState(TypedDict):fa_summary: strprocessed_logs: List[str]

Agora criamos as funções dos nós, uma obterá os erros nos logs, para isso buscará os logs que tenham algum valor no campo grade. Outra gerará um resumo dos erros. Além disso, vamos adicionar prints para poder ver o que está acontecendo internamente.

def get_failures(state):""" Get logs that contain a failure """cleaned_logs = state["cleaned_logs"]print(f" debug get_failures: cleaned_logs: {cleaned_logs}")failures = [log for log in cleaned_logs if "grade" in log]print(f" debug get_failures: failures: {failures}")return {"failures": failures}def generate_summary(state):""" Generate summary of failures """failures = state["failures"]print(f" debug generate_summary: failures: {failures}")fa_summary = "Poor quality retrieval of documentation."print(f" debug generate_summary: fa_summary: {fa_summary}")processed_logs = [f"failure-analysis-on-log-{failure['id']}" for failure in failures]print(f" debug generate_summary: processed_logs: {processed_logs}")return {"fa_summary": fa_summary, "processed_logs": processed_logs}

Por último, criamos o grafo, adicionamos os nós e os edges e o compilamos.

fa_builder = StateGraph(FailureAnalysisState,output=FailureAnalysisOutputState)

fa_builder.add_node("get_failures", get_failures)

fa_builder.add_node("generate_summary", generate_summary)

fa_builder.add_edge(START, "get_failures")

fa_builder.add_edge("get_failures", "generate_summary")

fa_builder.add_edge("generate_summary", END)

graph = fa_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

Vamos a criar um log de teste

failure_log = {"id": "1","question": "What is the meaning of life?","docs": None,"answer": "42","grade": 1,"grader": "AI","feedback": "Good job!"}

Executamos o grafo com o log de teste. Como a função get_failures pega a chave cleaned_logs do estado, temos que passar o log para o grafo na mesma chave.

graph.invoke({"cleaned_logs": [failure_log]})

Pode-se ver que ele encontrou o log de teste, pois tem um valor de 1 no campo grade e, em seguida, gerou um resumo dos erros.

Vamos a definir todo o subgráfico juntos novamente para ficar mais claro e também para remover os prints que colocamos para depuração.

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

from operator import add

from typing_extensions import TypedDict

from typing import List, Optional, Annotated

# The structure of the logs

class Log(TypedDict):

id: str

question: str

docs: Optional[List]

answer: str

grade: Optional[int]

grader: Optional[str]

feedback: Optional[str]

# Failure clases

class FailureAnalysisState(TypedDict):

cleaned_logs: List[Log]

failures: List[Log]

fa_summary: str

processed_logs: List[str]

class FailureAnalysisOutputState(TypedDict):

fa_summary: str

processed_logs: List[str]

# Functions

def get_failures(state):

""" Get logs that contain a failure """

cleaned_logs = state["cleaned_logs"]

failures = [log for log in cleaned_logs if "grade" in log]

return {"failures": failures}

def generate_summary(state):

""" Generate summary of failures """

failures = state["failures"]

fa_summary = "Poor quality retrieval of documentation."

processed_logs = [f"failure-analysis-on-log-{failure['id']}" for failure in failures]

return {"fa_summary": fa_summary, "processed_logs": processed_logs}

# Build the graph

fa_builder = StateGraph(FailureAnalysisState,output=FailureAnalysisOutputState)

fa_builder.add_node("get_failures", get_failures)

fa_builder.add_node("generate_summary", generate_summary)

fa_builder.add_edge(START, "get_failures")

fa_builder.add_edge("get_failures", "generate_summary")

fa_builder.add_edge("generate_summary", END)

graph = fa_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

Se nós o executarmos novamente, obteremos o mesmo resultado, mas sem os prints.

graph.invoke({"cleaned_logs": [failure_log]})

{opening_brace}'fa_summary': 'Poor quality retrieval of documentation.','processed_logs': ['failure-analysis-on-log-1']{closing_brace}

Subgrafo de resumo de logs

Agora criamos o subgrafo de resumo de logs. Neste caso, não é necessário recriar a classe com a estrutura dos logs, então criamos as classes com a estrutura para os resumos dos logs e com a estrutura da saída.

# Summarization subgraphclass QuestionSummarizationState(TypedDict):cleaned_logs: List[Log]qs_summary: strreport: strprocessed_logs: List[str]class QuestionSummarizationOutputState(TypedDict):report: strprocessed_logs: List[str]

Agora definimos as funções dos nós, uma gerará o resumo dos logs e outra "enviará o resumo para o Slack".

def generate_summary(state):cleaned_logs = state["cleaned_logs"]print(f" debug generate_summary: cleaned_logs: {cleaned_logs}")summary = "Questions focused on ..."print(f" debug generate_summary: summary: {summary}")processed_logs = [f"summary-on-log-{log['id']}" for log in cleaned_logs]print(f" debug generate_summary: processed_logs: {processed_logs}")return {opening_brace}"qs_summary": summary, "processed_logs": processed_logs{closing_brace}def send_to_slack(state):qs_summary = state["qs_summary"]print(f" debug send_to_slack: qs_summary: {qs_summary}")report = "foo bar baz"print(f" debug send_to_slack: report: {report}")return {opening_brace}"report": report{closing_brace}

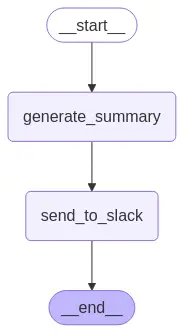

Por último, criamos o grafo, adicionamos os nós e as edges e o compilamos.

# Build the graph

qs_builder = StateGraph(QuestionSummarizationState,output=QuestionSummarizationOutputState)

qs_builder.add_node("generate_summary", generate_summary)

qs_builder.add_node("send_to_slack", send_to_slack)

qs_builder.add_edge(START, "generate_summary")

qs_builder.add_edge("generate_summary", "send_to_slack")

qs_builder.add_edge("send_to_slack", END)

graph = qs_builder.compile()

display(Image(graph.get_graph().draw_mermaid_png()))

Voltamos a testar com o log que criamos anteriormente.

graph.invoke({"cleaned_logs": [failure_log]})

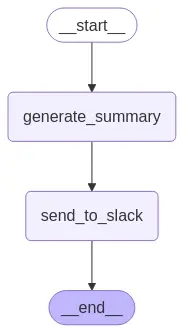

Reescrevemos o subgrafo, tudo junto para ver com maior clareza e sem os prints.

# Summarization clases

class QuestionSummarizationState(TypedDict):

cleaned_logs: List[Log]

qs_summary: str

report: str

processed_logs: List[str]

class QuestionSummarizationOutputState(TypedDict):

report: str

processed_logs: List[str]

# Functions

def generate_summary(state):

cleaned_logs = state["cleaned_logs"]

summary = "Questions focused on ..."

processed_logs = [f"summary-on-log-{log['id']}" for log in cleaned_logs]

return {"qs_summary": summary, "processed_logs": processed_logs}

def send_to_slack(state):

qs_summary = state["qs_summary"]

report = "foo bar baz"

return {"report": report}

# Build the graph

qs_builder = StateGraph(QuestionSummarizationState,output=QuestionSummarizationOutputState)

qs_builder.add_node("generate_summary", generate_summary)

qs_builder.add_node("send_to_slack", send_to_slack)

qs_builder.add_edge(START, "generate_summary")

qs_builder.add_edge("generate_summary", "send_to_slack")

qs_builder.add_edge("send_to_slack", END)

graph = qs_builder.compile()