Florence-2: promoção de uma representação unificada para uma variedade de tarefas de visão

Papel

Este caderno foi traduzido automaticamente para torná-lo acessível a mais pessoas, por favor me avise se você vir algum erro de digitação..

[Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks] (https://arxiv.org/abs/2311.06242) é o artigo Florence-2.

Resumo do artigo

O Florence-2 é um modelo de visão fundamental com uma representação unificada, baseada em prompts, para uma variedade de tarefas de visão e linguagem visual.

Os modelos de visão de grande porte existentes são bons em transferência de aprendizado, mas têm dificuldade em executar uma variedade de tarefas com instruções simples. O Florence-2 foi projetado para receber prompts de texto como instruções de tarefas e gerar resultados na forma de texto, detecção de objetos, aterramento (correspondência de palavras ou frases em linguagem natural com regiões específicas de uma imagem) ou segmentação.

Para treinar o modelo, eles criaram o conjunto de dados FLD-5B, que tem 5,4 bilhões de anotações visuais completas em 126 milhões de imagens. Esse conjunto de dados foi treinado por dois módulos de processamento eficientes.

O primeiro módulo usa modelos especializados para anotar imagens de forma colaborativa e autônoma, em vez do método de anotação manual único. Vários modelos trabalham juntos para chegar a um consenso, lembrando o conceito da sabedoria das multidões, garantindo uma compreensão mais confiável e imparcial da imagem.

O segundo módulo refina e filtra iterativamente essas anotações automatizadas usando modelos fundamentais bem treinados.

O modelo é capaz de executar várias tarefas, como detecção de objetos, legendagem e aterramento, tudo em um único modelo. A ativação da tarefa é obtida por meio de avisos de texto.

Desenvolver um modelo versátil de base de visão. Para isso, o método de treinamento do modelo incorpora três objetivos de aprendizado distintos, cada um dos quais aborda um nível diferente de granularidade e compreensão semântica:

- As tarefas de compreensão em nível de imagem** capturam a semântica de alto nível e promovem uma compreensão abrangente das imagens por meio de descrições linguísticas. Elas permitem que o modelo compreenda o contexto geral de uma imagem e capture relações semânticas e nuances contextuais no domínio da linguagem. Tarefas exemplares incluem classificação de imagens, legendas e respostas a perguntas visuais.

As tarefas de reconhecimento em nível de região/pixel facilitam a localização detalhada de objetos e entidades nas imagens, capturando as relações entre os objetos e seu contexto espacial. As tarefas incluem detecção de objetos, segmentação e compreensão da expressão de referência.

- As tarefas de alinhamento visual-semântico de granularidade fina** exigem uma compreensão de granularidade fina do texto e da imagem. Elas envolvem a localização de regiões da imagem que correspondem a frases de texto, como objetos, atributos ou relações. Essas tarefas desafiam a capacidade de capturar os detalhes locais de entidades visuais e seus contextos semânticos, bem como as interações entre elementos textuais e visuais.

Ao combinar esses três objetivos de aprendizagem em uma estrutura de aprendizagem multitarefa, o modelo aprende a lidar com diferentes níveis de detalhes e compreensão semântica.

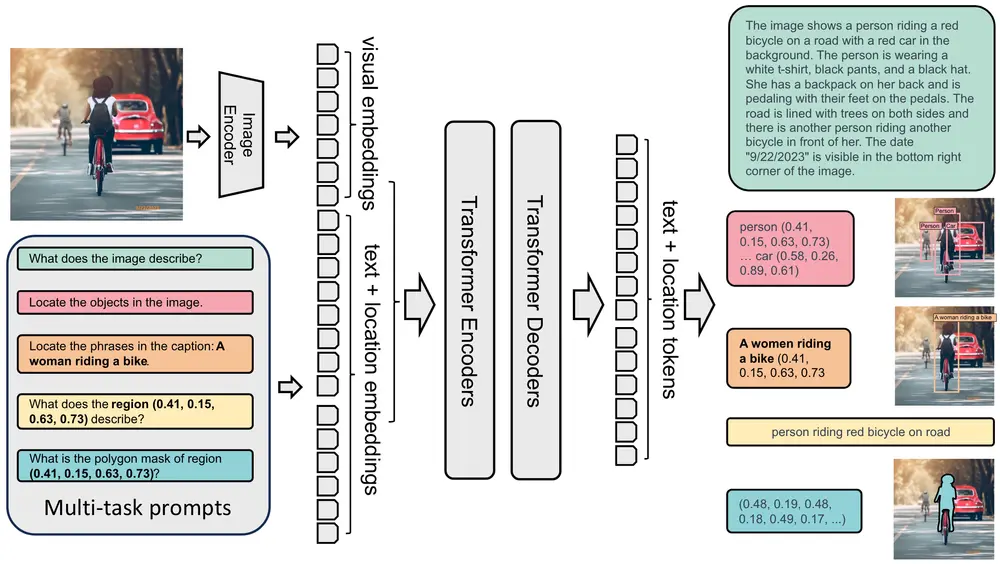

Arquitetura

O modelo usa uma arquitetura de sequência para sequência (seq2seq), que integra um codificador de imagem e um codificador-decodificador multimodo.

Como o modelo vai receber imagens e prompts, ele tem um codificador de imagem para obter a incorporação da imagem; por outro lado, os prompts passam por um tokenizador e incorporam o texto e a localização. Os embeddings da imagem e do prompt são concatenados e passam por um transformador para obter os tokens de texto de saída e a localização na imagem. Por fim, ele é passado por um decodificador de texto e localização para obter os resultados.

O codificador-decodificador (transformador) de texto mais posições é chamado de codificador-decodificador multimodal.

A ampliação do vocabulário do tokenizador para incluir tokens de localização permite que o modelo processe informações específicas da região do objeto em um formato de aprendizado unificado, ou seja, por meio de um único modelo. Isso elimina a necessidade de projetar cabeças específicas para diferentes tarefas e permite uma abordagem mais centrada nos dados.

Eles criaram dois modelos, o Florence-2 Base e o Florence-2 Large. O Florence-2 Base tem 232B de parâmetros e o Florence-2 Large tem 771B de parâmetros. Cada um tem os seguintes tamanhos

| Model | Image Encoder (DaViT) | Image Encoder (DaViT) | Image Encoder (DaViT) | Image Encoder (DaViT) | Encoder-Decoder (Transformer) | Encoder-Decoder (Transformer) | Encoder-Decoder (Transformer) | Encoder-Decoder (Transformer) |

|---|---|---|---|---|---|---|---|---|

| dimensions | blocks | heads/groups | #params | encoder layers | decoder layers | dimensions | #params | |

| Florence-2-B | [128, 256, 512, 1024] | [1, 1, 9, 1] | [4, 8, 16, 32] | 90M | 6 | 6 | 768 | 140M |

| Florence-2-L | [256, 512, 1024, 2048] | [1, 1, 9, 1] | [8, 16, 32, 64] | 360M | 12 | 12 | 1024 | 410M |

Codificador de visão

Eles usaram o DaViT como um codificador de visão. Ele processa uma imagem de entrada para embeddings visuais achatados (Nv×Dv), em que Nv e Dv representam o número de embeddings e a dimensão dos embeddings visuais, respectivamente.

Codificador-decodificador multimodal

Eles usaram uma arquitetura de transformador padrão para processar os embeddings visuais e de linguagem.

Objetivo de otimização

Dada uma entrada x (combinação da imagem e do prompt) e o alvo y, eles usaram a modelagem de linguagem com perdas de entropia cruzada padrão para todas as tarefas.

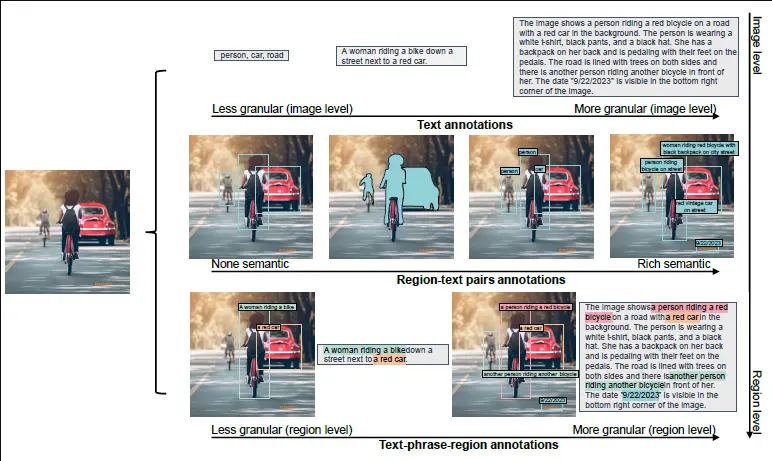

Conjunto de dados

Eles criaram o conjunto de dados FLD-5B que inclui 126 milhões de imagens, 500 milhões de anotações de texto e 1,3 bilhão de anotações de regiões de texto e 3,6 bilhões de anotações de regiões de frases de texto em diferentes tarefas.

Compilação de imagens

Para compilar as imagens, eles usaram imagens dos conjuntos de dados ImageNet-22k, Object 365, Open Images, Conceptual Captions e LAION.

Marcação de imagens

O principal objetivo é gerar anotações que possam ser usadas para um aprendizado eficaz em várias tarefas. Para isso, foram criadas três categorias de anotações: texto, pares texto-região e triplas texto-frase-região.

O fluxo de trabalho de anotação de dados consiste em três fases: (1) anotação inicial usando modelos especializados, (2) filtragem de dados para corrigir erros e remover anotações irrelevantes e (3) um processo iterativo para refinamento de dados.

Anotação inicial com modelos especializados. Eles usaram rótulos sintéticos obtidos de modelos especializados. Esses modelos especializados são uma combinação de modelos off-line treinados em uma variedade de conjuntos de dados disponíveis publicamente e serviços on-line hospedados em plataformas de nuvem. Eles são projetados especificamente para se destacarem na anotação de seus respectivos tipos de anotação. Alguns conjuntos de dados de imagens contêm anotações parciais. Por exemplo, o Object 365 já inclui caixas delimitadoras anotadas por humanos e categorias correspondentes como anotações de regiões de texto. Nesses casos, eles mesclaram as anotações pré-existentes com os rótulos sintéticos gerados pelos modelos especializados.

Filtragem e aprimoramento de dados**. As anotações iniciais obtidas dos modelos especializados são suscetíveis a ruídos e imprecisões. Por isso, eles implementaram um processo de filtragem. Ele se concentra principalmente em dois tipos de dados nas anotações: texto e dados de região. Para anotações textuais, eles desenvolveram uma ferramenta de análise baseada no SpaCy para extrair objetos, atributos e ações. Eles filtraram textos com excesso de objetos, pois eles tendem a introduzir ruído e podem não refletir com precisão o conteúdo real das imagens. Além disso, eles avaliaram a complexidade das ações e dos objetos medindo seu grau de nó na árvore de análise de dependência. Eles mantiveram textos com uma certa complexidade mínima para garantir a riqueza dos conceitos visuais nas imagens. Com relação às anotações de região, eles removeram quadros com ruído abaixo de um limite de pontuação de confiança. Eles também usaram a supressão não máxima para reduzir caixas delimitadoras redundantes ou sobrepostas.

Refinamento iterativo de dados. Usando as anotações filtradas iniciais, eles treinaram um modelo multitarefa que processa sequências de dados.

Treinamento

- Para o treinamento, eles usaram o AdamW como um otimizador, que é uma variante do Adam que inclui a regularização L2 nos pesos.

- Eles usaram um decaimento da taxa de aprendizado de cosine. O valor máximo da taxa de aprendizado foi definido como 1e-4 e um aquecimento linear de 5.000 etapas.

- Eles usaram o [Deep-Speed] e a precisão mista para acelerar o treinamento.

- Eles usaram um tamanho de lote de 2048 para o Florence-2 Base e 3072 para o Florence-2 Large.

- Eles fizeram um primeiro treinamento com imagens de tamanho 184x184 com todas as imagens do conjunto de dados e, em seguida, um ajuste de resolução com imagens de 768x768 com 500 milhões de imagens para o modelo básico e 100 milhões de imagens para o modelo grande.

Resultados

Avaliação de disparo zero

Para tarefas de disparo zero, eles obtiveram os seguintes resultados

| Method | #params | COCO Cap. | COCO Cap. | NoCaps | TextCaps | COCO Det. | Flickr30k | Refcoco | Refcoco+ | Refcocog | Refcoco RES | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| test | val | val | val | val2017 | test | test-A | test-B | val | test-A | test-B | val | test | val | |||

| CIDEr | CIDEr | CIDEr | CIDEr | mAP | R@1 | Accuracy | Accuracy | Accuracy | mIoU | |||||||

| Flamingo [2] | 80B | 84.3 | - | - | - | - | - | - | - | - | - | |||||

| Kosmos-2 [60] | 1.6B | - | - | - | - | - | 78.7 | 52.3 | 57.4 | 47.3 | 45.5 | 50.7 | 42.2 | 60.6 | 61.7 | - |

| Florence-2-B | 0.23B | 133.0 | 118.7 | 70.1 | 34.7 | 34.7 | 83.6 | 53.9 | 58.4 | 49.7 | 51.5 | 56.4 | 47.9 | 66.3 | 65.1 | 34.6 |

| Florence-2-L | 0.77B | 135.6 | 120.8 | 72.8 | 37.5 | 37.5 | 84.4 | 56.3 | 61.6 | 51.4 | 53.6 | 57.9 | 49.9 | 68.0 | 67.0 | 35.8 |

Como pode ser visto em Florença-2, tanto a base quanto o largue superam os modelos de uma e duas ordens de magnitude maiores.

Modelo generalista com dados públicos supervisionados

Eles ajustaram os modelos Florence-2 adicionando uma coleção de conjuntos de dados públicos que abrangem tarefas em nível de imagem, região e pixel. Os resultados podem ser vistos nas tabelas a seguir.

Desempenho em tarefas de legendagem e VQA:

| Method | #params | COCO Caption | NoCaps | TextCaps | VQAv2 | TextVQA | VizWiz VQA |

|---|---|---|---|---|---|---|---|

| Karpathy test | val | val | test-dev | test-dev | test-dev | ||

| CIDEr | CIDEr | CIDEr | Acc | Acc | Acc | ||

| Specialist Models | |||||||

| CoCa [92] | 2.1B | 143.6 | 122.4 | - | 82.3 | - | - |

| BLIP-2 [44] | 7.8B | 144.5 | 121.6 | - | 82.2 | - | - |

| GIT2 [78] | 5.1B | 145 | 126.9 | 148.6 | 81.7 | 67.3 | 71.0 |

| Flamingo [2] | 80B | 138.1 | - | - | 82.0 | 54.1 | 65.7 |

| PaLI [15] | 17B | 149.1 | 127.0 | 160.0 | 84.3 | 58.8 / 73.1△ | 71.6 / 74.4△ |

| PaLI-X [12] | 55B | 149.2 | 126.3 | 147 / 163.7 | 86.0 | 71.4 / 80.8△ | 70.9 / 74.6△ |

| Generalist Models | |||||||

| Unified-IO [55] | 2.9B | - | 100 | - | 77.9 | - | 57.4 |

| Florence-2-B | 0.23B | 140.0 | 116.7 | 143.9 | 79.7 | 63.6 | 63.6 |

| Florence-2-L | 0.77B | 143.3 | 124.9 | 151.1 | 81.7 | 73.5 | 72.6 |

△ Indica que o OCR externo foi usado como entrada

Desempenho em tarefas de nível de região e de pixel:

| Method | #params | COCO Det. | Flickr30k | Refcoco | Refcoco+ | Refcocog | Refcoco RES | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| val2017 | test | test-A | test-B | val | test-A | test-B | val | test | val | |||

| mAP | R@1 | Accuracy | Accuracy | Accuracy | mIoU | |||||||

| Specialist Models | ||||||||||||

| SeqTR [99] | - | - | - | 83.7 | 86.5 | 81.2 | 71.5 | 76.3 | 64.9 | 74.9 | 74.2 | - |

| PolyFormer [49] | - | - | - | 90.4 | 92.9 | 87.2 | 85.0 | 89.8 | 78.0 | 85.8 | 85.9 | 76.9 |

| UNINEXT [84] | 0.74B | 60.6 | - | 92.6 | 94.3 | 91.5 | 85.2 | 89.6 | 79.8 | 88.7 | 89.4 | - |

| Ferret [90] | 13B | - | - | 89.5 | 92.4 | 84.4 | 82.8 | 88.1 | 75.2 | 85.8 | 86.3 | - |

| Generalist Models | ||||||||||||

| UniTAB [88] | - | - | 88.6 | 91.1 | 83.8 | 81.0 | 85.4 | 71.6 | 84.6 | 84.7 | - | - |

| Florence-2-B | 0.23B | 41.4 | 84.0 | 92.6 | 94.8 | 91.5 | 86.8 | 91.7 | 82.2 | 89.8 | 82.2 | 78.0 |

| Florence-2-L | 0.77B | 43.4 | 85.2 | 93.4 | 95.3 | 92.0 | 88.3 | 92.9 | 83.6 | 91.2 | 91.7 | 80.5 |

Resultados da detecção de objetos COCO e da segmentação de instâncias

| Backbone | Pretrain | Mask R-CNN | Mask R-CNN | DINO |

|---|---|---|---|---|

| APb | APm | AP | ||

| ViT-B | MAE, IN-1k | 51.6 | 45.9 | 55.0 |

| Swin-B | Sup IN-1k | 50.2 | - | 53.4 |

| Swin-B | SimMIM [83] | 52.3 | - | - |

| FocalAtt-B | Sup IN-1k | 49.0 | 43.7 | - |

| FocalNet-B | Sup IN-1k | 49.8 | 44.1 | 54.4 |

| ConvNeXt v1-B | Sup IN-1k | 50.3 | 44.9 | 52.6 |

| ConvNeXt v2-B | Sup IN-1k | 51.0 | 45.6 | - |

| ConvNeXt v2-B | FCMAE | 52.9 | 46.6 | - |

| DaViT-B | Florence-2 | 53.6 | 46.4 | 59.2 |

Detecção de objetos COCO usando Mask R-CNN e DINO

| Pretrain | Frozen stages | Mask R-CNN | Mask R-CNN | DINO | UperNet |

|---|---|---|---|---|---|

| APb | APm | AP | mloU | ||

| Sup IN1k | n/a | 46.7 | 42.0 | 53.7 | 49 |

| UniCL [87] | n/a | 50.4 | 45.0 | 57.3 | 53.6 |

| Florence-2 | n/a | 53.6 | 46.4 | 59.2 | 54.9 |

| Florence-2 | [1] | 53.6 | 46.3 | 59.2 | 54.1 |

| Florence-2 | [1, 2] | 53.3 | 46.1 | 59.0 | 54.4 |

| Florence-2 | [1, 2, 3] | 49.5 | 42.9 | 56.7 | 49.6 |

| Florence-2 | [1, 2, 3, 4] | 48.3 | 44.5 | 56.1 | 45.9 |

Resultados da segmentação semântica do ADE20K

| Backbone | Pretrain | mIoU | ms-mIoU |

|---|---|---|---|

| ViT-B [24] | Sup IN-1k | 47.4 | - |

| ViT-B [24] | MAE IN-1k | 48.1 | - |

| ViT-B [4] | BEiT | 53.6 | 54.1 |

| ViT-B [59] | BEiTv2 IN-1k | 53.1 | - |

| ViT-B [59] | BEiTv2 IN-22k | 53.5 | - |

| Swin-B [51] | Sup IN-1k | 48.1 | 49.7 |

| Swin-B [51] | Sup IN-22k | - | 51.8 |

| Swin-B [51] | SimMIM [83] | - | 52.8 |

| FocalAtt-B [86] | Sup IN-1k | 49.0 | 50.5 |

| FocalNet-B [85] | Sup IN-1k | 50.5 | 51.4 |

| ConvNeXt v1-B [52] | Sup IN-1k | - | 49.9 |

| ConvNeXt v2-B [81] | Sup IN-1k | - | 50.5 |

| ConvNeXt v2-B [81] | FCMAE | - | 52.1 |

| DaViT-B [20] | Florence-2 | 54.9 | 55.5 |

Você pode ver que o Florence-2 não é o melhor em algumas tarefas, embora em algumas seja, mas está no mesmo nível dos melhores modelos para cada tarefa, tendo uma ou duas ordens de magnitude a menos de parâmetros do que os outros modelos.

Modelos disponíveis

Na coleção de modelos Florence-2 da Microsofnt na Hugging Face, você pode encontrar Florence-2-large, Florence-2-base, Florence-2-large-ft e Florence-2-base-ft.

Já vimos a diferença entre large e base, large é um modelo com 771B parâmetros e base com 232B parâmetros. Os modelos com -ft são modelos que foram ajustados em algumas tarefas.

Tarefas definidas pelo prompt

Como vimos, o Florence-2 é um modelo que recebe uma imagem e um prompt, de modo que, por meio do prompt, o modelo executará uma tarefa ou outra. Aqui estão os avisos que podem ser usados para cada tarefa

| Task | Annotation Type | Prompt Input | Output |

|---|---|---|---|

| Caption | Text | Image, text | Text |

| Detailed caption | Text | Image, text | Text |

| More detailed caption | Text | Image, text | Text |

| Region proposal | Region | Image, text | Region |

| Object detection | Region-Text | Image, text | Text, region |

| Dense region caption | Region-Text | Image, text | Text, region |

| Phrase grounding | Text-Phrase-Region | Image, text | Text, region |

| Referring expression segmentation | Region-Text | Image, text | Text, region |

| Region to segmentation | Region-Text | Image, text | Text, region |

| Open vocabulary detection | Region-Text | Image, text | Text, region |

| Region to category | Region-Text | Image, text, region | Text |

| Region to description | Region-Text | Image, text, region | Text |

| OCR | Text | Image, text | Text |

| OCR with region | Region-Text | Image, text | Text, region |

Uso de Florence-2 large

Primeiro, importamos as bibliotecas

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport time

Criamos o modelo e o processador

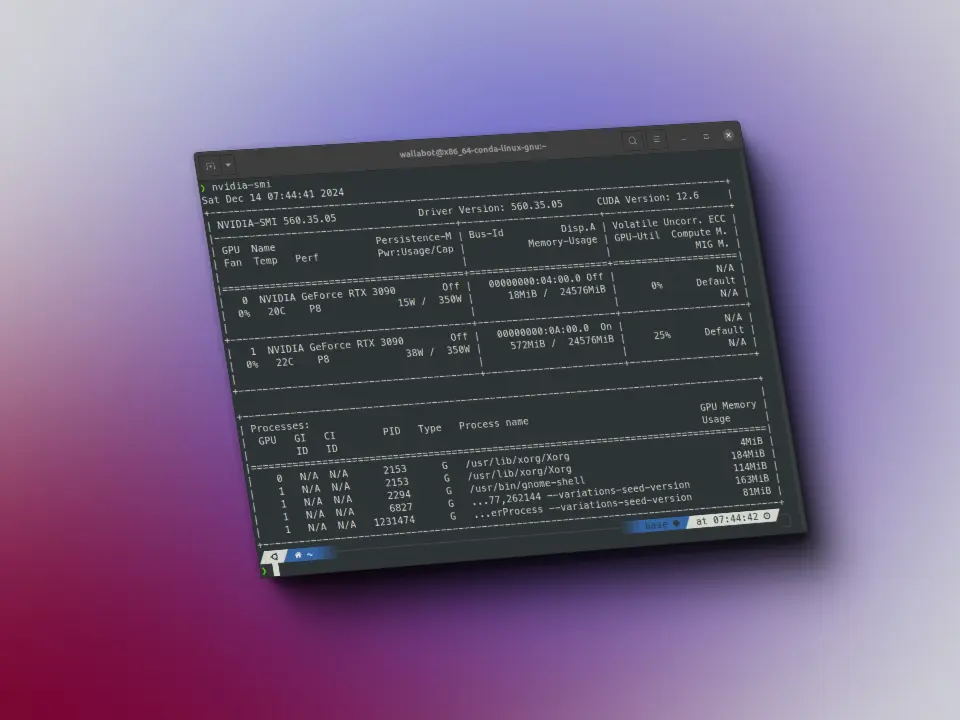

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)

Criamos uma função para criar o prompt

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)def create_prompt(task_prompt, text_input=None):if text_input is None:prompt = task_promptelse:prompt = task_prompt + text_inputreturn prompt

Agora, uma função para gerar a saída

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)def create_prompt(task_prompt, text_input=None):if text_input is None:prompt = task_promptelse:prompt = task_prompt + text_inputreturn promptdef generate_answer(task_prompt, text_input=None):# Create promptprompt = create_prompt(task_prompt, text_input)# Get inputsinputs = processor(text=prompt, images=image, return_tensors="pt").to('cuda')# Get outputsgenerated_ids = model.generate(input_ids=inputs["input_ids"],pixel_values=inputs["pixel_values"],max_new_tokens=1024,early_stopping=False,do_sample=False,num_beams=3,)# Decode the generated IDsgenerated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]# Post-process the generated textparsed_answer = processor.post_process_generation(generated_text,task=task_prompt,image_size=(image.width, image.height))return parsed_answer

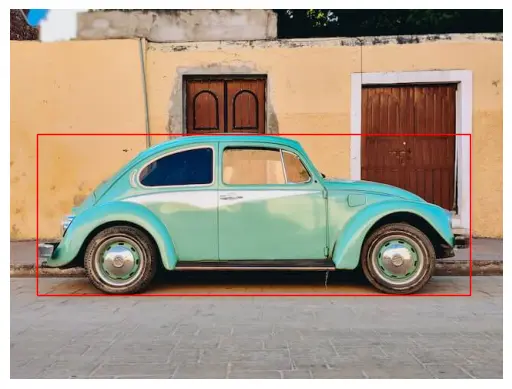

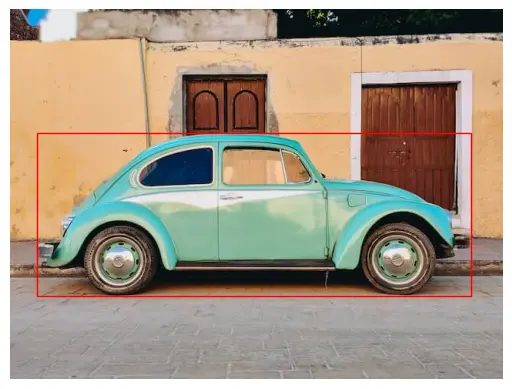

Obtemos uma imagem na qual vamos testar o modelo.

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

image

Tarefas sem prompt adicional

Legenda

task_prompt = '<CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

task_prompt = '<DETAILED_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

task_prompt = '<MORE_DETAILED_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

Proposta da região

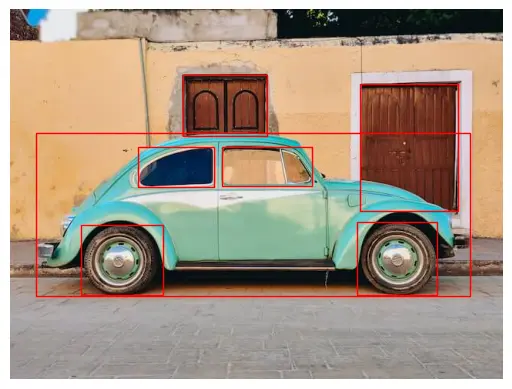

É uma detecção de objetos, mas, nesse caso, não retorna as classes dos objetos.

Como vamos obter caixas delimitadoras, primeiro criaremos uma função para pintá-las na imagem.

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)def create_prompt(task_prompt, text_input=None):if text_input is None:prompt = task_promptelse:prompt = task_prompt + text_inputreturn promptdef generate_answer(task_prompt, text_input=None):# Create promptprompt = create_prompt(task_prompt, text_input)# Get inputsinputs = processor(text=prompt, images=image, return_tensors="pt").to('cuda')# Get outputsgenerated_ids = model.generate(input_ids=inputs["input_ids"],pixel_values=inputs["pixel_values"],max_new_tokens=1024,early_stopping=False,do_sample=False,num_beams=3,)# Decode the generated IDsgenerated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]# Post-process the generated textparsed_answer = processor.post_process_generation(generated_text,task=task_prompt,image_size=(image.width, image.height))return parsed_answerurl = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw)imagetask_prompt = '<CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<MORE_DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answerimport matplotlib.pyplot as pltimport matplotlib.patches as patchesdef plot_bbox(image, data):# Create a figure and axesfig, ax = plt.subplots()# Display the imageax.imshow(image)# Plot each bounding boxfor bbox, label in zip(data['bboxes'], data['labels']):# Unpack the bounding box coordinatesx1, y1, x2, y2 = bbox# Create a Rectangle patchrect = patches.Rectangle((x1, y1), x2-x1, y2-y1, linewidth=1, edgecolor='r', facecolor='none')# Add the rectangle to the Axesax.add_patch(rect)# Annotate the labelplt.text(x1, y1, label, color='white', fontsize=8, bbox=dict(facecolor='red', alpha=0.5))# Remove the axis ticks and labelsax.axis('off')# Show the plotplt.show()

task_prompt = '<REGION_PROPOSAL>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

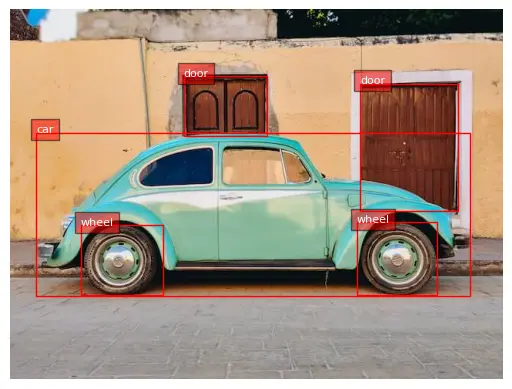

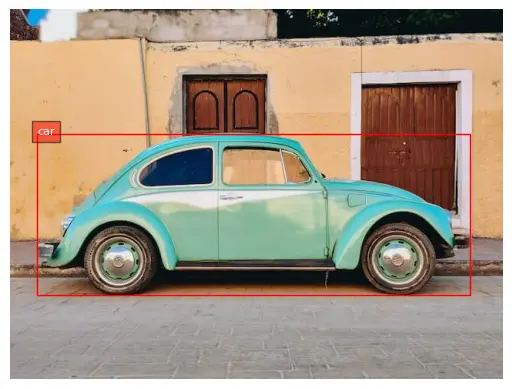

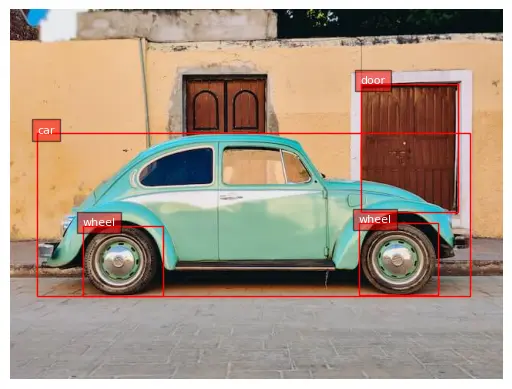

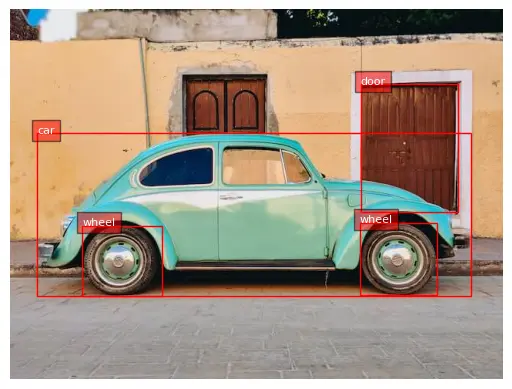

Detecção de objetos

Nesse caso, ele retorna as classes dos objetos

task_prompt = '<OD>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

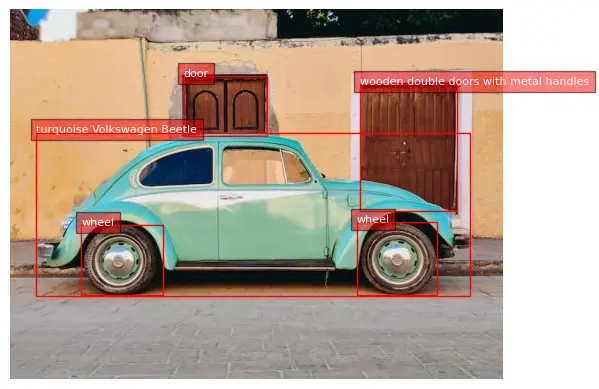

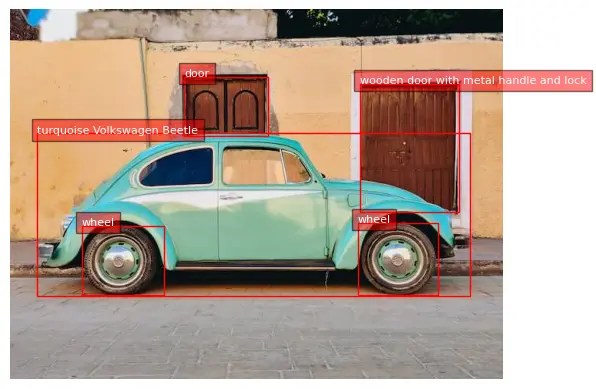

Legenda da região densa

task_prompt = '<DENSE_REGION_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Tarefas com prompts adicionais

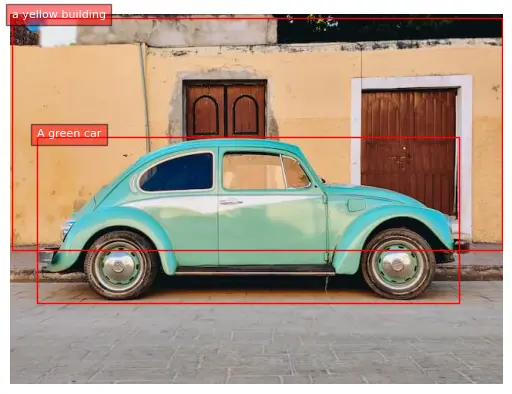

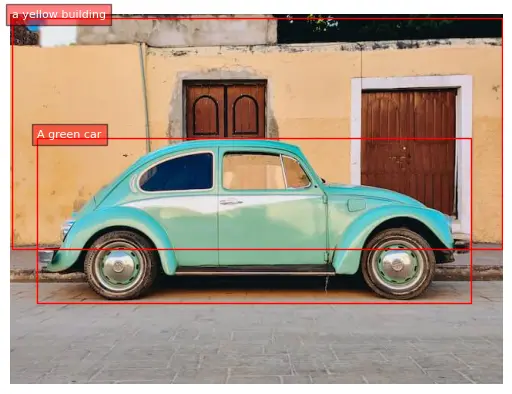

Phrase Grounding

task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'

text_input="A green car parked in front of a yellow building."

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Segmentação de expressões de referência

Como vamos obter máscaras de segmentação, vamos criar uma função para pintá-las na imagem.

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)def create_prompt(task_prompt, text_input=None):if text_input is None:prompt = task_promptelse:prompt = task_prompt + text_inputreturn promptdef generate_answer(task_prompt, text_input=None):# Create promptprompt = create_prompt(task_prompt, text_input)# Get inputsinputs = processor(text=prompt, images=image, return_tensors="pt").to('cuda')# Get outputsgenerated_ids = model.generate(input_ids=inputs["input_ids"],pixel_values=inputs["pixel_values"],max_new_tokens=1024,early_stopping=False,do_sample=False,num_beams=3,)# Decode the generated IDsgenerated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]# Post-process the generated textparsed_answer = processor.post_process_generation(generated_text,task=task_prompt,image_size=(image.width, image.height))return parsed_answerurl = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw)imagetask_prompt = '<CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<MORE_DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answerimport matplotlib.pyplot as pltimport matplotlib.patches as patchesdef plot_bbox(image, data):# Create a figure and axesfig, ax = plt.subplots()# Display the imageax.imshow(image)# Plot each bounding boxfor bbox, label in zip(data['bboxes'], data['labels']):# Unpack the bounding box coordinatesx1, y1, x2, y2 = bbox# Create a Rectangle patchrect = patches.Rectangle((x1, y1), x2-x1, y2-y1, linewidth=1, edgecolor='r', facecolor='none')# Add the rectangle to the Axesax.add_patch(rect)# Annotate the labelplt.text(x1, y1, label, color='white', fontsize=8, bbox=dict(facecolor='red', alpha=0.5))# Remove the axis ticks and labelsax.axis('off')# Show the plotplt.show()task_prompt = '<REGION_PROPOSAL>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<OD>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<DENSE_REGION_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'text_input="A green car parked in front of a yellow building."t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])from PIL import Image, ImageDraw, ImageFontimport randomimport numpy as npcolormap = ['blue','orange','green','purple','brown','pink','gray','olive','cyan','red','lime','indigo','violet','aqua','magenta','coral','gold','tan','skyblue']def draw_polygons(input_image, prediction, fill_mask=False):"""Draws segmentation masks with polygons on an image.Parameters:- input_image: Path to the image file.- prediction: Dictionary containing 'polygons' and 'labels' keys.'polygons' is a list of lists, each containing vertices of a polygon.'labels' is a list of labels corresponding to each polygon.- fill_mask: Boolean indicating whether to fill the polygons with color."""# Copy the input image to draw onimage = copy.deepcopy(input_image)# Load the imagedraw = ImageDraw.Draw(image)# Set up scale factor if needed (use 1 if not scaling)scale = 1# Iterate over polygons and labelsfor polygons, label in zip(prediction['polygons'], prediction['labels']):color = random.choice(colormap)fill_color = random.choice(colormap) if fill_mask else Nonefor _polygon in polygons:_polygon = np.array(_polygon).reshape(-1, 2)if len(_polygon) < 3:print('Invalid polygon:', _polygon)continue_polygon = (_polygon * scale).reshape(-1).tolist()# Draw the polygonif fill_mask:draw.polygon(_polygon, outline=color, fill=fill_color)else:draw.polygon(_polygon, outline=color)# Draw the label textdraw.text((_polygon[0] + 8, _polygon[1] + 2), label, fill=color)# Save or display the image#image.show() # Display the imagedisplay(image)

task_prompt = '<REFERRING_EXPRESSION_SEGMENTATION>'

text_input="a green car"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_polygons(image, answer[task_prompt], fill_mask=True)

Região para segmentação

task_prompt = '<REGION_TO_SEGMENTATION>'

text_input="<loc_702><loc_575><loc_866><loc_772>"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_polygons(image, answer[task_prompt], fill_mask=True)

Detecção de vocabulário aberto

Como vamos obter dicionários com caixas delimitadoras, juntamente com seus rótulos, criaremos uma função para formatar os dados e poderemos reutilizar a função para pintar caixas delimitadoras.

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)def create_prompt(task_prompt, text_input=None):if text_input is None:prompt = task_promptelse:prompt = task_prompt + text_inputreturn promptdef generate_answer(task_prompt, text_input=None):# Create promptprompt = create_prompt(task_prompt, text_input)# Get inputsinputs = processor(text=prompt, images=image, return_tensors="pt").to('cuda')# Get outputsgenerated_ids = model.generate(input_ids=inputs["input_ids"],pixel_values=inputs["pixel_values"],max_new_tokens=1024,early_stopping=False,do_sample=False,num_beams=3,)# Decode the generated IDsgenerated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]# Post-process the generated textparsed_answer = processor.post_process_generation(generated_text,task=task_prompt,image_size=(image.width, image.height))return parsed_answerurl = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw)imagetask_prompt = '<CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<MORE_DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answerimport matplotlib.pyplot as pltimport matplotlib.patches as patchesdef plot_bbox(image, data):# Create a figure and axesfig, ax = plt.subplots()# Display the imageax.imshow(image)# Plot each bounding boxfor bbox, label in zip(data['bboxes'], data['labels']):# Unpack the bounding box coordinatesx1, y1, x2, y2 = bbox# Create a Rectangle patchrect = patches.Rectangle((x1, y1), x2-x1, y2-y1, linewidth=1, edgecolor='r', facecolor='none')# Add the rectangle to the Axesax.add_patch(rect)# Annotate the labelplt.text(x1, y1, label, color='white', fontsize=8, bbox=dict(facecolor='red', alpha=0.5))# Remove the axis ticks and labelsax.axis('off')# Show the plotplt.show()task_prompt = '<REGION_PROPOSAL>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<OD>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<DENSE_REGION_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'text_input="A green car parked in front of a yellow building."t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])from PIL import Image, ImageDraw, ImageFontimport randomimport numpy as npcolormap = ['blue','orange','green','purple','brown','pink','gray','olive','cyan','red','lime','indigo','violet','aqua','magenta','coral','gold','tan','skyblue']def draw_polygons(input_image, prediction, fill_mask=False):"""Draws segmentation masks with polygons on an image.Parameters:- input_image: Path to the image file.- prediction: Dictionary containing 'polygons' and 'labels' keys.'polygons' is a list of lists, each containing vertices of a polygon.'labels' is a list of labels corresponding to each polygon.- fill_mask: Boolean indicating whether to fill the polygons with color."""# Copy the input image to draw onimage = copy.deepcopy(input_image)# Load the imagedraw = ImageDraw.Draw(image)# Set up scale factor if needed (use 1 if not scaling)scale = 1# Iterate over polygons and labelsfor polygons, label in zip(prediction['polygons'], prediction['labels']):color = random.choice(colormap)fill_color = random.choice(colormap) if fill_mask else Nonefor _polygon in polygons:_polygon = np.array(_polygon).reshape(-1, 2)if len(_polygon) < 3:print('Invalid polygon:', _polygon)continue_polygon = (_polygon * scale).reshape(-1).tolist()# Draw the polygonif fill_mask:draw.polygon(_polygon, outline=color, fill=fill_color)else:draw.polygon(_polygon, outline=color)# Draw the label textdraw.text((_polygon[0] + 8, _polygon[1] + 2), label, fill=color)# Save or display the image#image.show() # Display the imagedisplay(image)task_prompt = '<REFERRING_EXPRESSION_SEGMENTATION>'text_input="a green car"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_polygons(image, answer[task_prompt], fill_mask=True)task_prompt = '<REGION_TO_SEGMENTATION>'text_input="<loc_702><loc_575><loc_866><loc_772>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_polygons(image, answer[task_prompt], fill_mask=True)def convert_to_od_format(data):"""Converts a dictionary with 'bboxes' and 'bboxes_labels' into a dictionary with separate 'bboxes' and 'labels' keys.Parameters:- data: The input dictionary with 'bboxes', 'bboxes_labels', 'polygons', and 'polygons_labels' keys.Returns:- A dictionary with 'bboxes' and 'labels' keys formatted for object detection results."""# Extract bounding boxes and labelsbboxes = data.get('bboxes', [])labels = data.get('bboxes_labels', [])# Construct the output formatod_results = {'bboxes': bboxes,'labels': labels}return od_results

task_prompt = '<OPEN_VOCABULARY_DETECTION>'

text_input="a green car"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

bbox_results = convert_to_od_format(answer[task_prompt])

plot_bbox(image, bbox_results)

Região para categoria

from transformers import AutoProcessor, AutoModelForCausalLMfrom PIL import Imageimport requestsimport copyimport timemodel_id = 'microsoft/Florence-2-large'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)def create_prompt(task_prompt, text_input=None):if text_input is None:prompt = task_promptelse:prompt = task_prompt + text_inputreturn promptdef generate_answer(task_prompt, text_input=None):# Create promptprompt = create_prompt(task_prompt, text_input)# Get inputsinputs = processor(text=prompt, images=image, return_tensors="pt").to('cuda')# Get outputsgenerated_ids = model.generate(input_ids=inputs["input_ids"],pixel_values=inputs["pixel_values"],max_new_tokens=1024,early_stopping=False,do_sample=False,num_beams=3,)# Decode the generated IDsgenerated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]# Post-process the generated textparsed_answer = processor.post_process_generation(generated_text,task=task_prompt,image_size=(image.width, image.height))return parsed_answerurl = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw)imagetask_prompt = '<CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<MORE_DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answerimport matplotlib.pyplot as pltimport matplotlib.patches as patchesdef plot_bbox(image, data):# Create a figure and axesfig, ax = plt.subplots()# Display the imageax.imshow(image)# Plot each bounding boxfor bbox, label in zip(data['bboxes'], data['labels']):# Unpack the bounding box coordinatesx1, y1, x2, y2 = bbox# Create a Rectangle patchrect = patches.Rectangle((x1, y1), x2-x1, y2-y1, linewidth=1, edgecolor='r', facecolor='none')# Add the rectangle to the Axesax.add_patch(rect)# Annotate the labelplt.text(x1, y1, label, color='white', fontsize=8, bbox=dict(facecolor='red', alpha=0.5))# Remove the axis ticks and labelsax.axis('off')# Show the plotplt.show()task_prompt = '<REGION_PROPOSAL>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<OD>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<DENSE_REGION_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'text_input="A green car parked in front of a yellow building."t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])from PIL import Image, ImageDraw, ImageFontimport randomimport numpy as npcolormap = ['blue','orange','green','purple','brown','pink','gray','olive','cyan','red','lime','indigo','violet','aqua','magenta','coral','gold','tan','skyblue']def draw_polygons(input_image, prediction, fill_mask=False):"""Draws segmentation masks with polygons on an image.Parameters:- input_image: Path to the image file.- prediction: Dictionary containing 'polygons' and 'labels' keys.'polygons' is a list of lists, each containing vertices of a polygon.'labels' is a list of labels corresponding to each polygon.- fill_mask: Boolean indicating whether to fill the polygons with color."""# Copy the input image to draw onimage = copy.deepcopy(input_image)# Load the imagedraw = ImageDraw.Draw(image)# Set up scale factor if needed (use 1 if not scaling)scale = 1# Iterate over polygons and labelsfor polygons, label in zip(prediction['polygons'], prediction['labels']):color = random.choice(colormap)fill_color = random.choice(colormap) if fill_mask else Nonefor _polygon in polygons:_polygon = np.array(_polygon).reshape(-1, 2)if len(_polygon) < 3:print('Invalid polygon:', _polygon)continue_polygon = (_polygon * scale).reshape(-1).tolist()# Draw the polygonif fill_mask:draw.polygon(_polygon, outline=color, fill=fill_color)else:draw.polygon(_polygon, outline=color)# Draw the label textdraw.text((_polygon[0] + 8, _polygon[1] + 2), label, fill=color)# Save or display the image#image.show() # Display the imagedisplay(image)task_prompt = '<REFERRING_EXPRESSION_SEGMENTATION>'text_input="a green car"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_polygons(image, answer[task_prompt], fill_mask=True)task_prompt = '<REGION_TO_SEGMENTATION>'text_input="<loc_702><loc_575><loc_866><loc_772>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_polygons(image, answer[task_prompt], fill_mask=True)def convert_to_od_format(data):"""Converts a dictionary with 'bboxes' and 'bboxes_labels' into a dictionary with separate 'bboxes' and 'labels' keys.Parameters:- data: The input dictionary with 'bboxes', 'bboxes_labels', 'polygons', and 'polygons_labels' keys.Returns:- A dictionary with 'bboxes' and 'labels' keys formatted for object detection results."""# Extract bounding boxes and labelsbboxes = data.get('bboxes', [])labels = data.get('bboxes_labels', [])# Construct the output formatod_results = {'bboxes': bboxes,'labels': labels}return od_resultstask_prompt = '<OPEN_VOCABULARY_DETECTION>'text_input="a green car"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)bbox_results = convert_to_od_format(answer[task_prompt])plot_bbox(image, bbox_results)task_prompt = '<REGION_TO_CATEGORY>'text_input="<loc_52><loc_332><loc_932><loc_774>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)

Time taken: 284.60 msTime taken: 491.69 msTime taken: 1011.38 msTime taken: 439.41 ms{'<REGION_PROPOSAL>': {'bboxes': [[33.599998474121094, 159.59999084472656, 596.7999877929688, 371.7599792480469], [454.0799865722656, 96.23999786376953, 580.7999877929688, 261.8399963378906], [449.5999755859375, 276.239990234375, 554.5599975585938, 370.3199768066406], [91.19999694824219, 280.0799865722656, 198.0800018310547, 370.3199768066406], [224.3199920654297, 85.19999694824219, 333.7599792480469, 164.39999389648438], [274.239990234375, 178.8000030517578, 392.0, 228.239990234375], [165.44000244140625, 178.8000030517578, 264.6399841308594, 230.63999938964844]], 'labels': ['', '', '', '', '', '', '']}}Time taken: 385.74 ms{'<OD>': {'bboxes': [[33.599998474121094, 159.59999084472656, 596.7999877929688, 371.7599792480469], [454.0799865722656, 96.23999786376953, 580.7999877929688, 261.8399963378906], [224.95999145507812, 86.15999603271484, 333.7599792480469, 164.39999389648438], [449.5999755859375, 276.239990234375, 554.5599975585938, 370.3199768066406], [91.19999694824219, 280.0799865722656, 198.0800018310547, 370.3199768066406]], 'labels': ['car', 'door', 'door', 'wheel', 'wheel']}}Time taken: 434.88 ms{'<DENSE_REGION_CAPTION>': {'bboxes': [[33.599998474121094, 159.59999084472656, 596.7999877929688, 371.7599792480469], [454.0799865722656, 96.72000122070312, 580.1599731445312, 261.8399963378906], [449.5999755859375, 276.239990234375, 554.5599975585938, 370.79998779296875], [91.83999633789062, 280.0799865722656, 198.0800018310547, 370.79998779296875], [224.95999145507812, 86.15999603271484, 333.7599792480469, 164.39999389648438]], 'labels': ['turquoise Volkswagen Beetle', 'wooden double doors with metal handles', 'wheel', 'wheel', 'door']}}Time taken: 327.24 ms{'<CAPTION_TO_PHRASE_GROUNDING>': {'bboxes': [[34.23999786376953, 159.1199951171875, 582.0800170898438, 374.6399841308594], [1.5999999046325684, 4.079999923706055, 639.0399780273438, 305.03997802734375]], 'labels': ['A green car', 'a yellow building']}}Time taken: 4854.74 ms{'<REFERRING_EXPRESSION_SEGMENTATION>': {'polygons': [[[180.8000030517578, 180.72000122070312, 182.72000122070312, 180.72000122070312, 187.83999633789062, 177.83999633789062, 189.75999450683594, 177.83999633789062, 192.95999145507812, 175.9199981689453, 194.87998962402344, 175.9199981689453, 198.0800018310547, 174.0, 200.63999938964844, 173.0399932861328, 203.83999633789062, 172.0800018310547, 207.0399932861328, 170.63999938964844, 209.59999084472656, 169.67999267578125, 214.0800018310547, 168.72000122070312, 217.9199981689453, 167.75999450683594, 221.75999450683594, 166.8000030517578, 226.239990234375, 165.83999633789062, 230.72000122070312, 164.87998962402344, 237.1199951171875, 163.9199981689453, 244.1599884033203, 162.95999145507812, 253.1199951171875, 162.0, 265.2799987792969, 161.0399932861328, 312.6399841308594, 161.0399932861328, 328.6399841308594, 162.0, 337.6000061035156, 162.95999145507812, 344.6399841308594, 163.9199981689453, 349.7599792480469, 164.87998962402344, 353.6000061035156, 165.83999633789062, 358.0799865722656, 166.8000030517578, 361.91998291015625, 167.75999450683594, 365.7599792480469, 168.72000122070312, 369.6000061035156, 169.67999267578125, 372.79998779296875, 170.63999938964844, 374.7200012207031, 172.0800018310547, 377.91998291015625, 174.95999145507812, 379.8399963378906, 177.83999633789062, 381.7599792480469, 180.72000122070312, 383.67999267578125, 183.59999084472656, 385.6000061035156, 186.95999145507812, 387.5199890136719, 189.83999633789062, 388.79998779296875, 192.72000122070312, 390.7200012207031, 194.63999938964844, 392.0, 197.51998901367188, 393.91998291015625, 200.87998962402344, 395.8399963378906, 203.75999450683594, 397.7599792480469, 206.63999938964844, 399.67999267578125, 209.51998901367188, 402.8800048828125, 212.87998962402344, 404.79998779296875, 212.87998962402344, 406.7200012207031, 213.83999633789062, 408.6399841308594, 215.75999450683594, 408.6399841308594, 217.67999267578125, 410.55999755859375, 219.59999084472656, 412.47998046875, 220.55999755859375, 431.03997802734375, 220.55999755859375, 431.67999267578125, 221.51998901367188, 443.8399963378906, 222.47999572753906, 457.91998291015625, 222.47999572753906, 466.8799743652344, 223.44000244140625, 473.91998291015625, 224.87998962402344, 479.67999267578125, 225.83999633789062, 486.0799865722656, 226.79998779296875, 491.1999816894531, 227.75999450683594, 495.03997802734375, 228.72000122070312, 498.8799743652344, 229.67999267578125, 502.0799865722656, 230.63999938964844, 505.2799987792969, 231.59999084472656, 507.8399963378906, 232.55999755859375, 511.03997802734375, 233.51998901367188, 514.239990234375, 234.47999572753906, 516.7999877929688, 235.4399871826172, 520.0, 237.36000061035156, 521.9199829101562, 237.36000061035156, 534.0800170898438, 243.59999084472656, 537.2799682617188, 245.51998901367188, 541.1199951171875, 249.36000061035156, 544.9599609375, 251.75999450683594, 548.1599731445312, 252.72000122070312, 551.3599853515625, 253.67999267578125, 553.2799682617188, 253.67999267578125, 556.47998046875, 255.59999084472656, 558.3999633789062, 255.59999084472656, 567.3599853515625, 260.3999938964844, 569.2799682617188, 260.3999938964844, 571.2000122070312, 261.3599853515625, 573.1199951171875, 263.2799987792969, 574.3999633789062, 265.67999267578125, 574.3999633789062, 267.6000061035156, 573.1199951171875, 268.55999755859375, 572.47998046875, 271.44000244140625, 572.47998046875, 281.5199890136719, 573.1199951171875, 286.32000732421875, 574.3999633789062, 287.2799987792969, 575.0399780273438, 290.6399841308594, 576.3200073242188, 293.5199890136719, 576.3200073242188, 309.3599853515625, 576.3200073242188, 312.239990234375, 576.3200073242188, 314.1600036621094, 577.5999755859375, 315.1199951171875, 578.239990234375, 318.47998046875, 578.239990234375, 320.3999938964844, 576.3200073242188, 321.3599853515625, 571.2000122070312, 322.32000732421875, 564.1599731445312, 323.2799987792969, 555.2000122070312, 323.2799987792969, 553.2799682617188, 325.1999816894531, 553.2799682617188, 333.3599853515625, 552.0, 337.1999816894531, 551.3599853515625, 340.0799865722656, 550.0800170898438, 343.44000244140625, 548.1599731445312, 345.3599853515625, 546.8800048828125, 348.239990234375, 544.9599609375, 351.1199951171875, 543.0399780273438, 354.47998046875, 534.0800170898438, 363.1199951171875, 530.8800048828125, 365.03997802734375, 525.1199951171875, 368.3999938964844, 521.9199829101562, 369.3599853515625, 518.0800170898438, 370.3199768066406, 496.9599914550781, 370.3199768066406, 491.1999816894531, 369.3599853515625, 488.0, 368.3999938964844, 484.79998779296875, 367.44000244140625, 480.9599914550781, 365.03997802734375, 477.7599792480469, 363.1199951171875, 475.1999816894531, 361.1999816894531, 464.9599914550781, 351.1199951171875, 463.03997802734375, 348.239990234375, 461.1199951171875, 345.3599853515625, 459.8399963378906, 343.44000244140625, 459.8399963378906, 341.03997802734375, 457.91998291015625, 338.1600036621094, 457.91998291015625, 336.239990234375, 456.6399841308594, 334.32000732421875, 454.7200012207031, 332.3999938964844, 452.79998779296875, 333.3599853515625, 448.9599914550781, 337.1999816894531, 447.03997802734375, 338.1600036621094, 426.55999755859375, 337.1999816894531, 424.0, 337.1999816894531, 422.7200012207031, 338.1600036621094, 419.5199890136719, 339.1199951171875, 411.8399963378906, 339.1199951171875, 410.55999755859375, 338.1600036621094, 379.8399963378906, 337.1999816894531, 376.0, 337.1999816894531, 374.7200012207031, 338.1600036621094, 365.7599792480469, 337.1999816894531, 361.91998291015625, 337.1999816894531, 360.6399841308594, 338.1600036621094, 351.67999267578125, 337.1999816894531, 347.8399963378906, 337.1999816894531, 346.55999755859375, 338.1600036621094, 340.79998779296875, 337.1999816894531, 337.6000061035156, 337.1999816894531, 336.9599914550781, 338.1600036621094, 328.6399841308594, 337.1999816894531, 323.5199890136719, 337.1999816894531, 322.8800048828125, 338.1600036621094, 314.55999755859375, 337.1999816894531, 310.7200012207031, 337.1999816894531, 309.44000244140625, 338.1600036621094, 301.7599792480469, 337.1999816894531, 298.55999755859375, 337.1999816894531, 297.91998291015625, 338.1600036621094, 289.6000061035156, 337.1999816894531, 287.67999267578125, 337.1999816894531, 286.3999938964844, 338.1600036621094, 279.3599853515625, 337.1999816894531, 275.5199890136719, 337.1999816894531, 274.239990234375, 338.1600036621094, 267.1999816894531, 337.1999816894531, 265.2799987792969, 337.1999816894531, 264.6399841308594, 338.1600036621094, 256.32000732421875, 337.1999816894531, 254.39999389648438, 337.1999816894531, 253.1199951171875, 338.1600036621094, 246.0800018310547, 337.1999816894531, 244.1599884033203, 337.1999816894531, 243.51998901367188, 338.1600036621094, 235.1999969482422, 337.1999816894531, 232.0, 337.1999816894531, 231.36000061035156, 338.1600036621094, 223.0399932861328, 337.1999816894531, 217.9199981689453, 337.1999816894531, 217.27999877929688, 338.1600036621094, 214.0800018310547, 339.1199951171875, 205.1199951171875, 339.1199951171875, 201.9199981689453, 338.1600036621094, 200.0, 337.1999816894531, 198.0800018310547, 335.2799987792969, 196.1599884033203, 334.32000732421875, 194.239990234375, 334.32000732421875, 191.67999267578125, 336.239990234375, 191.0399932861328, 338.1600036621094, 191.0399932861328, 340.0799865722656, 189.1199951171875, 343.44000244140625, 189.1199951171875, 345.3599853515625, 187.83999633789062, 347.2799987792969, 185.9199981689453, 349.1999816894531, 184.63999938964844, 352.0799865722656, 182.72000122070312, 355.44000244140625, 180.8000030517578, 358.3199768066406, 176.95999145507812, 362.1600036621094, 173.75999450683594, 364.0799865722656, 170.55999755859375, 366.0, 168.63999938964844, 367.44000244140625, 166.0800018310547, 368.3999938964844, 162.87998962402344, 369.3599853515625, 159.67999267578125, 370.3199768066406, 152.63999938964844, 371.2799987792969, 131.52000427246094, 371.2799987792969, 127.68000030517578, 370.3199768066406, 124.47999572753906, 369.3599853515625, 118.7199935913086, 366.0, 115.5199966430664, 364.0799865722656, 111.68000030517578, 361.1999816894531, 106.55999755859375, 356.3999938964844, 104.63999938964844, 353.03997802734375, 103.36000061035156, 350.1600036621094, 101.43999481201172, 348.239990234375, 100.79999542236328, 346.32000732421875, 99.5199966430664, 343.44000244140625, 99.5199966430664, 340.0799865722656, 98.23999786376953, 337.1999816894531, 96.31999969482422, 335.2799987792969, 94.4000015258789, 334.32000732421875, 87.36000061035156, 334.32000732421875, 81.5999984741211, 335.2799987792969, 80.31999969482422, 336.239990234375, 74.55999755859375, 337.1999816894531, 66.23999786376953, 337.1999816894531, 64.31999969482422, 335.2799987792969, 53.439998626708984, 335.2799987792969, 50.23999786376953, 334.32000732421875, 48.31999969482422, 333.3599853515625, 47.03999710083008, 331.44000244140625, 47.03999710083008, 329.03997802734375, 48.31999969482422, 327.1199951171875, 50.23999786376953, 325.1999816894531, 50.23999786376953, 323.2799987792969, 43.20000076293945, 322.32000732421875, 40.0, 321.3599853515625, 38.07999801635742, 320.3999938964844, 37.439998626708984, 318.47998046875, 36.15999984741211, 312.239990234375, 36.15999984741211, 307.44000244140625, 38.07999801635742, 305.5199890136719, 40.0, 304.55999755859375, 43.20000076293945, 303.6000061035156, 46.39999771118164, 302.6399841308594, 53.439998626708984, 301.67999267578125, 66.23999786376953, 301.67999267578125, 68.15999603271484, 299.2799987792969, 69.43999481201172, 297.3599853515625, 69.43999481201172, 293.5199890136719, 68.15999603271484, 292.55999755859375, 67.5199966430664, 287.2799987792969, 67.5199966430664, 277.67999267578125, 68.15999603271484, 274.32000732421875, 69.43999481201172, 272.3999938964844, 73.27999877929688, 268.55999755859375, 75.19999694824219, 267.6000061035156, 78.4000015258789, 266.6399841308594, 80.31999969482422, 266.6399841308594, 82.23999786376953, 264.7200012207031, 81.5999984741211, 260.3999938964844, 81.5999984741211, 258.47998046875, 83.5199966430664, 257.5199890136719, 87.36000061035156, 257.5199890136719, 89.27999877929688, 256.55999755859375, 96.31999969482422, 249.36000061035156, 96.31999969482422, 248.39999389648438, 106.55999755859375, 237.36000061035156, 110.39999389648438, 233.51998901367188, 112.31999969482422, 231.59999084472656, 120.63999938964844, 223.44000244140625, 123.83999633789062, 221.51998901367188, 126.39999389648438, 220.55999755859375, 129.59999084472656, 218.63999938964844, 132.8000030517578, 216.72000122070312, 136.63999938964844, 213.83999633789062, 141.75999450683594, 209.51998901367188, 148.8000030517578, 202.8000030517578, 153.9199981689453, 198.95999145507812, 154.55999755859375, 198.95999145507812, 157.75999450683594, 196.55999755859375, 161.59999084472656, 193.67999267578125, 168.63999938964844, 186.95999145507812, 171.83999633789062, 186.0, 173.75999450683594, 183.59999084472656, 178.87998962402344, 181.67999267578125, 180.8000030517578, 179.75999450683594]]], 'labels': ['']}}Time taken: 1246.26 ms{'<REGION_TO_SEGMENTATION>': {'polygons': [[[468.79998779296875, 288.239990234375, 472.6399841308594, 285.3599853515625, 475.8399963378906, 283.44000244140625, 477.7599792480469, 282.47998046875, 479.67999267578125, 282.47998046875, 482.8799743652344, 280.55999755859375, 485.44000244140625, 279.6000061035156, 488.6399841308594, 278.6399841308594, 491.8399963378906, 277.67999267578125, 497.5999755859375, 276.7200012207031, 511.67999267578125, 276.7200012207031, 514.8800048828125, 277.67999267578125, 518.0800170898438, 278.6399841308594, 520.6400146484375, 280.55999755859375, 522.5599975585938, 280.55999755859375, 524.47998046875, 282.47998046875, 527.6799926757812, 283.44000244140625, 530.8800048828125, 285.3599853515625, 534.0800170898438, 287.2799987792969, 543.0399780273438, 296.3999938964844, 544.9599609375, 299.2799987792969, 546.8800048828125, 302.1600036621094, 548.7999877929688, 306.47998046875, 548.7999877929688, 308.3999938964844, 550.719970703125, 311.2799987792969, 552.0, 314.1600036621094, 552.6400146484375, 318.47998046875, 552.6400146484375, 333.3599853515625, 552.0, 337.1999816894531, 550.719970703125, 340.0799865722656, 550.0800170898438, 343.44000244140625, 548.7999877929688, 345.3599853515625, 546.8800048828125, 347.2799987792969, 545.5999755859375, 350.1600036621094, 543.6799926757812, 353.03997802734375, 541.760009765625, 356.3999938964844, 536.0, 362.1600036621094, 532.7999877929688, 364.0799865722656, 529.5999755859375, 366.0, 527.6799926757812, 366.9599914550781, 525.760009765625, 366.9599914550781, 522.5599975585938, 369.3599853515625, 518.0800170898438, 370.3199768066406, 495.67999267578125, 370.3199768066406, 489.91998291015625, 369.3599853515625, 486.7200012207031, 368.3999938964844, 483.5199890136719, 366.9599914550781, 479.67999267578125, 365.03997802734375, 476.47998046875, 363.1199951171875, 473.91998291015625, 361.1999816894531, 465.5999755859375, 353.03997802734375, 462.3999938964844, 349.1999816894531, 460.47998046875, 346.32000732421875, 458.55999755859375, 342.47998046875, 457.91998291015625, 339.1199951171875, 456.6399841308594, 336.239990234375, 455.3599853515625, 333.3599853515625, 454.7200012207031, 329.5199890136719, 454.7200012207031, 315.1199951171875, 455.3599853515625, 310.32000732421875, 456.6399841308594, 306.47998046875, 457.91998291015625, 303.1199951171875, 459.8399963378906, 300.239990234375, 459.8399963378906, 298.32000732421875, 460.47998046875, 296.3999938964844, 462.3999938964844, 293.5199890136719, 465.5999755859375, 289.1999816894531]]], 'labels': ['']}}Time taken: 256.23 ms{'<OPEN_VOCABULARY_DETECTION>': {'bboxes': [[34.23999786376953, 158.63999938964844, 582.0800170898438, 374.1600036621094]], 'bboxes_labels': ['a green car'], 'polygons': [], 'polygons_labels': []}}Time taken: 231.91 ms{'<REGION_TO_CATEGORY>': 'car<loc_52><loc_332><loc_932><loc_774>'}

Região para descrição

task_prompt = '<REGION_TO_DESCRIPTION>'text_input="<loc_52><loc_332><loc_932><loc_774>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)

Time taken: 269.62 ms{'<REGION_TO_DESCRIPTION>': 'turquoise Volkswagen Beetle<loc_52><loc_332><loc_932><loc_774>'}

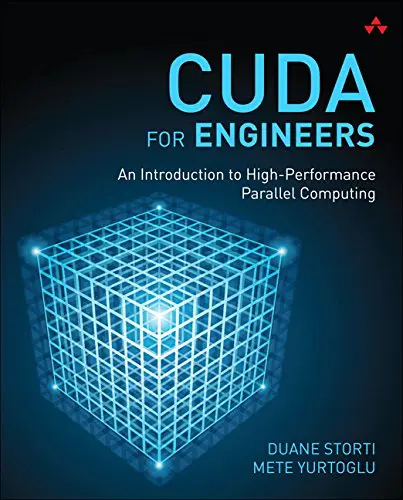

Tarefas de OCR

Usamos uma nova imagem

url = "http://ecx.images-amazon.com/images/I/51UUzBDAMsL.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw).convert('RGB')

image

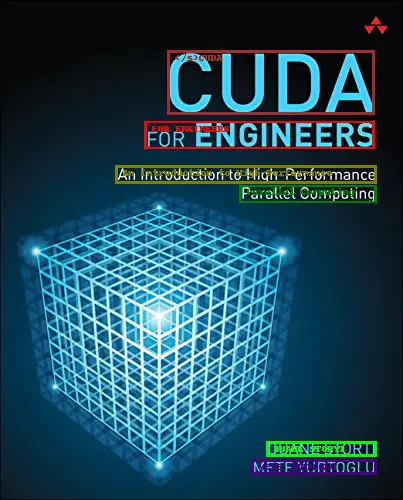

OCR

url = "http://ecx.images-amazon.com/images/I/51UUzBDAMsL.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw).convert('RGB')imagetask_prompt = '<OCR>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)

Time taken: 424.52 ms{'<OCR>': 'CUDAFOR ENGINEERSAn Introduction to High-PerformanceParallel ComputingDUANE STORTIMETE YURTOGLU'}

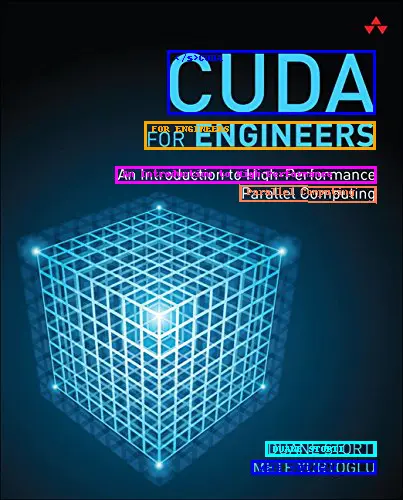

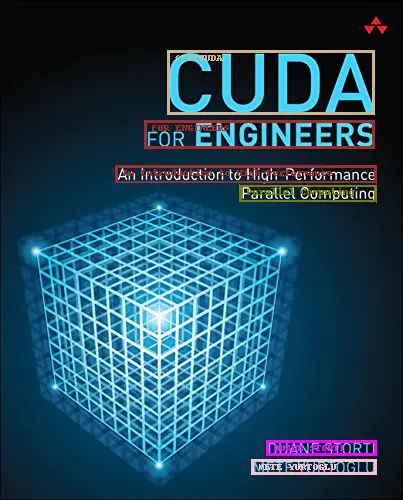

OCR com região

Como vamos obter o texto do OCR e suas regiões, vamos criar uma função para pintá-los na imagem.

def draw_ocr_bboxes(input_image, prediction):image = copy.deepcopy(input_image)scale = 1draw = ImageDraw.Draw(image)bboxes, labels = prediction['quad_boxes'], prediction['labels']for box, label in zip(bboxes, labels):color = random.choice(colormap)new_box = (np.array(box) * scale).tolist()draw.polygon(new_box, width=3, outline=color)draw.text((new_box[0]+8, new_box[1]+2),"{}".format(label),align="right",fill=color)display(image)

task_prompt = '<OCR_WITH_REGION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_ocr_bboxes(image, answer[task_prompt])

Uso do ajuste fino grande de Florence-2

Criamos o modelo e o processador

def draw_ocr_bboxes(input_image, prediction):image = copy.deepcopy(input_image)scale = 1draw = ImageDraw.Draw(image)bboxes, labels = prediction['quad_boxes'], prediction['labels']for box, label in zip(bboxes, labels):color = random.choice(colormap)new_box = (np.array(box) * scale).tolist()draw.polygon(new_box, width=3, outline=color)draw.text((new_box[0]+8, new_box[1]+2),"{}".format(label),align="right",fill=color)display(image)task_prompt = '<OCR_WITH_REGION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_ocr_bboxes(image, answer[task_prompt])model_id = 'microsoft/Florence-2-large-ft'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)

Temos a imagem do carro novamente.

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

image

Tarefas sem prompt adicional

Legenda

task_prompt = '<CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

task_prompt = '<DETAILED_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

task_prompt = '<MORE_DETAILED_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

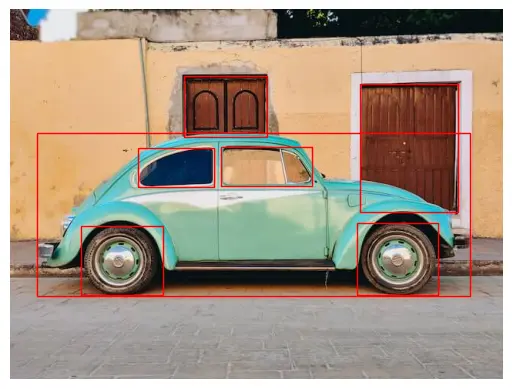

Proposta da região

É uma detecção de objetos, mas, nesse caso, não retorna as classes dos objetos.

task_prompt = '<REGION_PROPOSAL>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Detecção de objetos

Nesse caso, ele retorna as classes dos objetos

task_prompt = '<OD>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Legenda da região densa

task_prompt = '<DENSE_REGION_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Tarefas com prompts adicionais

Phrase Grounding

task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'

text_input="A green car parked in front of a yellow building."

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Segmentação de expressões de referência

task_prompt = '<REFERRING_EXPRESSION_SEGMENTATION>'

text_input="a green car"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_polygons(image, answer[task_prompt], fill_mask=True)

Região para segmentação

task_prompt = '<REGION_TO_SEGMENTATION>'

text_input="<loc_702><loc_575><loc_866><loc_772>"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_polygons(image, answer[task_prompt], fill_mask=True)

Detecção de vocabulário aberto

task_prompt = '<OPEN_VOCABULARY_DETECTION>'

text_input="a green car"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

bbox_results = convert_to_od_format(answer[task_prompt])

plot_bbox(image, bbox_results)

Região para categoria

def draw_ocr_bboxes(input_image, prediction):image = copy.deepcopy(input_image)scale = 1draw = ImageDraw.Draw(image)bboxes, labels = prediction['quad_boxes'], prediction['labels']for box, label in zip(bboxes, labels):color = random.choice(colormap)new_box = (np.array(box) * scale).tolist()draw.polygon(new_box, width=3, outline=color)draw.text((new_box[0]+8, new_box[1]+2),"{}".format(label),align="right",fill=color)display(image)task_prompt = '<OCR_WITH_REGION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_ocr_bboxes(image, answer[task_prompt])model_id = 'microsoft/Florence-2-large-ft'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw)imagetask_prompt = '<CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<MORE_DETAILED_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")answertask_prompt = '<REGION_PROPOSAL>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<OD>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<DENSE_REGION_CAPTION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'text_input="A green car parked in front of a yellow building."t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)plot_bbox(image, answer[task_prompt])task_prompt = '<REFERRING_EXPRESSION_SEGMENTATION>'text_input="a green car"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_polygons(image, answer[task_prompt], fill_mask=True)task_prompt = '<REGION_TO_SEGMENTATION>'text_input="<loc_702><loc_575><loc_866><loc_772>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_polygons(image, answer[task_prompt], fill_mask=True)task_prompt = '<OPEN_VOCABULARY_DETECTION>'text_input="a green car"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)bbox_results = convert_to_od_format(answer[task_prompt])plot_bbox(image, bbox_results)task_prompt = '<REGION_TO_CATEGORY>'text_input="<loc_52><loc_332><loc_932><loc_774>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)

Time taken: 758.95 ms{'<OCR_WITH_REGION>': {'quad_boxes': [[167.0435028076172, 50.25, 375.7974853515625, 50.25, 375.7974853515625, 114.75, 167.0435028076172, 114.75], [144.8784942626953, 120.75, 375.7974853515625, 120.75, 375.7974853515625, 149.25, 144.8784942626953, 149.25], [115.86249542236328, 165.25, 376.6034851074219, 166.25, 376.6034851074219, 184.25, 115.86249542236328, 183.25], [239.9864959716797, 184.25, 376.6034851074219, 186.25, 376.6034851074219, 204.25, 239.9864959716797, 202.25], [266.1814880371094, 441.25, 376.6034851074219, 441.25, 376.6034851074219, 456.25, 266.1814880371094, 456.25], [252.0764923095703, 460.25, 376.6034851074219, 460.25, 376.6034851074219, 475.25, 252.0764923095703, 475.25]], 'labels': ['</s>CUDA', 'FOR ENGINEERS', 'An Introduction to High-Performance', 'Parallel Computing', 'DUANE STORTI', 'METE YURTOGLU']}}Time taken: 292.35 msTime taken: 437.06 msTime taken: 779.38 msTime taken: 255.08 ms{'<REGION_PROPOSAL>': {'bboxes': [[34.880001068115234, 161.0399932861328, 596.7999877929688, 370.79998779296875]], 'labels': ['']}}Time taken: 245.54 ms{'<OD>': {'bboxes': [[34.880001068115234, 161.51998901367188, 596.7999877929688, 370.79998779296875]], 'labels': ['car']}}Time taken: 282.75 ms{'<DENSE_REGION_CAPTION>': {'bboxes': [[34.880001068115234, 161.51998901367188, 596.7999877929688, 370.79998779296875]], 'labels': ['turquoise Volkswagen Beetle']}}Time taken: 305.79 ms{'<CAPTION_TO_PHRASE_GROUNDING>': {'bboxes': [[34.880001068115234, 159.59999084472656, 598.719970703125, 374.6399841308594], [1.5999999046325684, 4.079999923706055, 639.0399780273438, 304.0799865722656]], 'labels': ['A green car', 'a yellow building']}}Time taken: 745.87 ms{'<REFERRING_EXPRESSION_SEGMENTATION>': {'polygons': [[[178.239990234375, 184.0800018310547, 256.32000732421875, 161.51998901367188, 374.7200012207031, 170.63999938964844, 408.0, 220.0800018310547, 480.9599914550781, 225.36000061035156, 539.2000122070312, 247.9199981689453, 573.760009765625, 266.6399841308594, 575.6799926757812, 289.1999816894531, 598.0800170898438, 293.5199890136719, 596.1599731445312, 309.8399963378906, 576.9599609375, 309.8399963378906, 576.9599609375, 321.3599853515625, 554.5599975585938, 322.32000732421875, 547.5199584960938, 354.47998046875, 525.1199951171875, 369.8399963378906, 488.0, 369.8399963378906, 463.67999267578125, 354.47998046875, 453.44000244140625, 332.8800048828125, 446.3999938964844, 340.0799865722656, 205.1199951171875, 340.0799865722656, 196.1599884033203, 334.79998779296875, 182.0800018310547, 361.67999267578125, 148.8000030517578, 370.79998779296875, 121.27999877929688, 369.8399963378906, 98.87999725341797, 349.1999816894531, 93.75999450683594, 332.8800048828125, 64.31999969482422, 339.1199951171875, 41.91999816894531, 334.79998779296875, 48.959999084472656, 326.6399841308594, 36.79999923706055, 321.3599853515625, 34.880001068115234, 303.6000061035156, 66.23999786376953, 301.67999267578125, 68.15999603271484, 289.1999816894531, 68.15999603271484, 268.55999755859375, 81.5999984741211, 263.2799987792969, 116.15999603271484, 227.27999877929688]]], 'labels': ['']}}Time taken: 358.71 ms{'<REGION_TO_SEGMENTATION>': {'polygons': [[[468.1600036621094, 292.0799865722656, 495.67999267578125, 276.239990234375, 523.2000122070312, 279.6000061035156, 546.8800048828125, 297.8399963378906, 555.8399658203125, 324.7200012207031, 548.7999877929688, 351.6000061035156, 529.5999755859375, 369.3599853515625, 493.7599792480469, 371.7599792480469, 468.1600036621094, 359.2799987792969, 449.5999755859375, 334.79998779296875]]], 'labels': ['']}}Time taken: 245.96 ms{'<OPEN_VOCABULARY_DETECTION>': {'bboxes': [[34.880001068115234, 159.59999084472656, 598.719970703125, 374.6399841308594]], 'bboxes_labels': ['a green car'], 'polygons': [], 'polygons_labels': []}}Time taken: 246.42 ms{'<REGION_TO_CATEGORY>': 'car<loc_52><loc_332><loc_932><loc_774>'}

Região para descrição

task_prompt = '<REGION_TO_DESCRIPTION>'text_input="<loc_52><loc_332><loc_932><loc_774>"t0 = time.time()answer = generate_answer(task_prompt, text_input)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)

Time taken: 280.67 ms{'<REGION_TO_DESCRIPTION>': 'turquoise Volkswagen Beetle<loc_52><loc_332><loc_932><loc_774>'}

Tarefas de OCR

Usamos uma nova imagem

url = "http://ecx.images-amazon.com/images/I/51UUzBDAMsL.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw).convert('RGB')

image

OCR

url = "http://ecx.images-amazon.com/images/I/51UUzBDAMsL.jpg?download=true"image = Image.open(requests.get(url, stream=True).raw).convert('RGB')imagetask_prompt = '<OCR>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)

Time taken: 444.77 ms{'<OCR>': 'CUDAFOR ENGINEERSAn Introduction to High-PerformanceParallel ComputingDUANE STORTIMETE YURTOGLU'}

OCR com região

task_prompt = '<OCR_WITH_REGION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_ocr_bboxes(image, answer[task_prompt])

Uso da base Florence-2

Criamos o modelo e o processador

task_prompt = '<OCR_WITH_REGION>'t0 = time.time()answer = generate_answer(task_prompt)t1 = time.time()print(f"Time taken: {(t1-t0)*1000:.2f} ms")print(answer)draw_ocr_bboxes(image, answer[task_prompt])model_id = 'microsoft/Florence-2-base'model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True).eval().to('cuda')processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)

Temos a imagem do carro novamente

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

image

Tarefas sem prompt adicional

Legenda

task_prompt = '<CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

task_prompt = '<DETAILED_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

task_prompt = '<MORE_DETAILED_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

answer

Proposta da região

É uma detecção de objetos, mas, nesse caso, não retorna as classes dos objetos.

task_prompt = '<REGION_PROPOSAL>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Detecção de objetos

Nesse caso, ele retorna as classes dos objetos

task_prompt = '<OD>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Legenda da região densa

task_prompt = '<DENSE_REGION_CAPTION>'

t0 = time.time()

answer = generate_answer(task_prompt)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Tarefas com prompts adicionais

Phrase Grounding

task_prompt = '<CAPTION_TO_PHRASE_GROUNDING>'

text_input="A green car parked in front of a yellow building."

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

plot_bbox(image, answer[task_prompt])

Segmentação de expressões de referência

task_prompt = '<REFERRING_EXPRESSION_SEGMENTATION>'

text_input="a green car"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_polygons(image, answer[task_prompt], fill_mask=True)

Região para segmentação

task_prompt = '<REGION_TO_SEGMENTATION>'

text_input="<loc_702><loc_575><loc_866><loc_772>"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

draw_polygons(image, answer[task_prompt], fill_mask=True)

Detecção de vocabulário aberto

task_prompt = '<OPEN_VOCABULARY_DETECTION>'

text_input="a green car"

t0 = time.time()

answer = generate_answer(task_prompt, text_input)

t1 = time.time()

print(f"Time taken: {(t1-t0)*1000:.2f} ms")

print(answer)

bbox_results = convert_to_od_format(answer[task_prompt])

plot_bbox(image, bbox_results)

Região para categoria